( ESNUG 581 Item 2 ) ---------------------------------------------- [03/23/18]

Subject: User kickstarts InFact PSS by using *only* System Verilog as input

In contrast, InFact's killer graph-based constraint solver means I do

NOT have to guide/assist the System Verilog constraint solver. I only

set out objectives (goals) of what I want to solve -- and InFact does

the graph or derivation of the path to get there, as well as manages

all the dependencies and redundancies.

After we adopted InFact, we no longer do extra constraint guidance.

In my next section I will cover Mentor InFact modeling, libraries, and

detail how their hierarchical graph-based works.

- [ Light Bulb Man ]

User on why MENT InFact leads graph-based PSS

From: [ Light Bulb Man ]

Hi John,

Here's my part 2.

FUNCTIONAL MODELING FOR INFACT

It's actually constraining when people talk about modeling in InFact. It's

where I enter in my functional, or application-specific constraints. For

example, the tool needs to know that I'm trying to send an packet to the

cloud that conforms to our internal cloud standard; otherwise, if I don't

form the packet correctly, they consider it an attack and they will drop

the packet.

To constrain that, I put in a header with a particular encapsulation

hierarchy; it has a special signature, length, and must come in at the right

order in a stream of packets, so on and so forth.

That is the extent to which I set my modeling constraints. InFact then

derives the logical relationships/dependencies between the different

variables that I use in my stimulus and infers the correct order ... and

when to solve which one. We don't need to figure those out. InFact

does it for you.

---- ---- ---- ---- ---- ---- ----

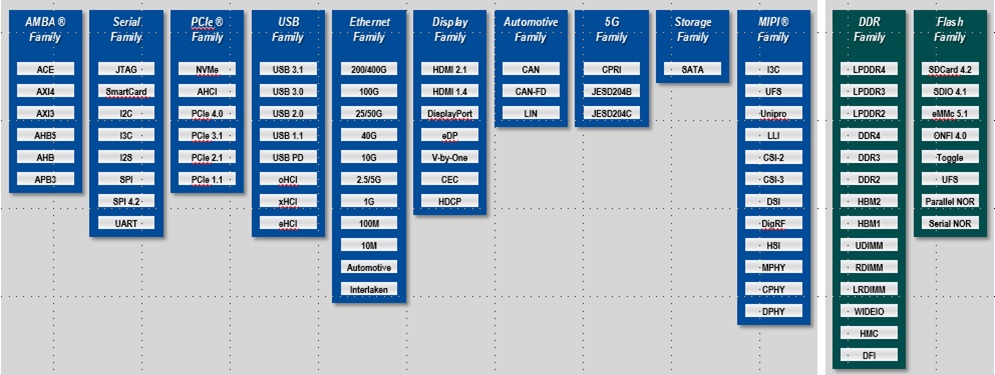

INFACT STANDARD PROTOCAL LIBRARIES

Mentor has InFact libraries that come with standard checks to see if your

signals are valid. For example, when you say that your device "supports

USB" you know that anything that has a correct USB connector hooked to it

will work. To do that, you must get back the signal at the right time,

at the right level, etc. -- those are called protocol-level checks.

(click on pic to enlarge image)

|

I use these prequalified libraries for all the standard protocols in my

chip, e.g. Ethernet (40 Gigabit, 100 Gigabit), PCIe, and the DDR memory.

These libraries definitely save me time. I know there's something like

100 of them, but I don't know the exact list off hand.

I also develop my own private libraries for our internal protocols.

---- ---- ---- ---- ---- ---- ----

THE INFACT SYSTEM VERILOG IMPORTER

Mentor has it's own proprietary graph-based language to drive InFact and

other related tools. I know the language now, but that was a negative

when we first started. I told my Mentor AE,

"If you ask a software engineer to learn a new language, it's fine.

However, most verification engineers have a hardware background,

and learning a new language is a headache for them."

That was 3 years ago. Mentor's response was create an InFact System

Verilog Importer that automatically generates the equivalent of their

in-house graph language from our existing System Verilog constraints

and coverage goals.

The first time I used their System Verilog importer, I wrote all my

constraints in System Verilog, and literally pressed a button and it

automatically converted it into the InFact graph-based language. That

was it. It was a one-to-one correlation for our use case.

It worked for then, but I was worried about future chips where messy

constraints would confuse this importer.

But as I got more comfortable, I found from tinkering with the importer

output that I was learning the MENT Graph language. Over time, I would

bypass the auto-translate and go in and tweak a few things.

And of course, once you're willing to go under the hood, you can gain a

lot more control.

This initial automated translation was key to helping new users ease their

learning curve. Our engineers didn't have to learn all the language up

front to realize the tool's benefit. Their importer solved our chicken-

and-egg-learning-curve situation, by giving us the benefits of graph tools

early on.

---- ---- ---- ---- ---- ---- ----

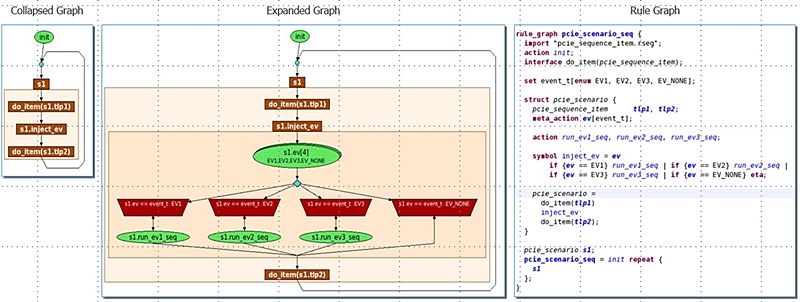

INFACT GRAPHS & HIERARCHY

The very first thing we do in any engineering problem is to divide and

conquer. No one would design an entire system in one single monolithic

entity, not for a schematic, or a graph, or even program or procedure. We

always break it up and make it hierarchical.

If you model a CPU, one simple way to break it up could be just the ALU and

the instruction decode and other control units. You put in the basic

instruction stream, look at the code, and then dispatch commands to all the

different units inside of the CPU to perform their tasks in a timely manner,

and in the right order. You break those things up and then adhere to some

kind or protocol in between the units so that they can communicate to each

other correctly.

(click on pic to enlarge image)

|

InFact's graph mode fully supports hierarchy. Each node can be a simple

node or another graph -- i.e. you can have a graph inside a graph. You can

decompose or construct the entire system from simple subcomponents. If you

partition your system at the right level, it would be a one-to-one mapping

between what you show graphically, and what you enter on the code.

Once you know the tool, it is easier to work in graph mode than just textual

code.

- The graph can illustrate concurrent/parallel relationships more

clearly, than if you were to read straight code. People tend to be

visual. If I show you a graph with pointer looping back to itself,

you can immediately identify that this particular code got repeated

multiple time.

- With software code, you have a concept of a flow chart. But when

you read code, if I start by giving you the exact syntax of

System Verilog code "for(int i=0; i<10; i++) begin ... end" so on

and so forth -- it's hard to spot errors, intent, and simply what

is going on. Graphs don't have that problem.

A graph is just a connection from point to point. A computer can process a

graph with millions of nodes; for us, even when we build a chip, if you call

each of the gates on the chip a node, and the wire that hooks them up as the

edge, the chip layout is essentially one very large graph.

But if you ever look at a chip layout it's so dense it looks like just a

big blob of black. In the same way, if you try to reconstruct a graph

with even 100 nodes it's incomprehensible, if its densely populated.

The first thing I asked Mentor about InFact was how they dealt with these

kinds of massive connections. They mentioned their hierarchical approach.

I can collapse the 5 or 10 nodes that represents (for example) my CPU

instruction decoder; then call that instruction decode graph and collapse

them, then at a higher level see the interactions between my instruction

decoder and ALU.

With InFact's graph-based approach:

- They can derive the global scheme from the graph.

- They treat each of the graph nodes as a compute entity.

- When you drill down to a particular graph node, inside of that

node, you can enter/execute any code you want, i.e. each compute

node can act like its own procedure.

They organize it to make it more manageable for a human designer.

In my last section I'll discuss how you define your test intent with InFact

so your coverage space doesn't explode, along with debug and coverage.

(See ESNUG 581 #3.)

- [ Light Bulb Man ]

---- ---- ---- ---- ---- ---- ----

Related Articles

Mentor InFact PSS user convinces boss, cuts project time in half

User kickstarts InFact PSS by using *only* System Verilog as input

User reports InFact PSS prunes coverage space for 30X sim speed-up

Cooley schooled by user on why MENT InFact leads graph-based PSS

Mentor InFact was pretty much an early No Show in the PSS tool wars

Join

Index

Next->Item

|

|