( ESNUG 580 Item 3 ) ---------------------------------------------- [02/23/18]

Subject: Cooley schooled by user on why MENT InFact leads graph-based PSS

That is, while at DAC in Austin, I talked with Ravi Subramanian, the new

4-months-in-the-job VP & GM of All AMS and Digital Simulation at Mentor

(with the "and Digital" being new for Ravi; he's done SPICE at MENT ever

since they acquired him and BDA back in 2013). When I said "it looks like

Portable Stimulus is going to be hot at DAC this year", Ravi replied with

something like:

"Yes, our InFact is doing really well blah blah blah customer

traction blah blah blah graph-based PSS blah blah blah...

I can't remember his exact words from that time.

Now, with Anirudh's Perspec getting 3,097 words from 33 users about Perspec;

and even Aart's Unannounced Mystery PSS tool getting 188 words from 3 users

in this survey -- it's embarrassing that Siemens MENT Questa inFact PSS

only got 24 words in 2 throwaway user comments here... So I'll just ask

now before Anirudh asks later: "Where's Waldo, Ravi?"

- from Mentor InFact was an early No Show in PSS tool wars

From: [ Spider Man ]

Hi, John,

Please keep me anonymous.

I can't speak for why the other InFact users didn't speak up, but I can take

a few moments to give my impressions of the tool.

In a nutshell, John, you couldn't be more wrong. Ravi was right. InFact

is ahead in PSS.

It's a graph-based tool which has repeatedly helped us close functional

coverage 10x faster than constrained random approaches. It supports

System Verilog, SystemC, and VHDL languages, plus UVM and OVM.

I've personally been using InFact for 4 years, and my team was using it

even before then.

INFACT CLOSES COVERAGE 10X FASTER BY CUTTING REDUNDANCY

Our group uses SystemVerilog for our test benches, combined with a UVM

methodology. We might have 15 features to verify in a block. One feature

might be something like an Audio Bridge Video (ABV).

For every feature we verify, our engineers develop coverage models that

include the set of data points that must be covered in our tests. Closing

to 100% coverage is what guarantees that a feature has been verified.

Traditionally, we used directed tests or constrained random tests to

generate stimulus. Directed tests give good control over test stimulus

from the engineers.

- Directed tests can guarantee that every scenario of the feature

will be verified. But with the increased complexity of features,

this approach needs an ever large number of verification engineers

to hand code the tests and verify a feature. Not practical.

- When we use a constrained random verification approach, our

engineers run 100s or 1000s of simulations, checking their coverage

after every regression round. Constrained random techniques have

less control over stimulus generation from engineers; the stimulus

control is more with the System Verilog randomization. This leads

to a lot of redundant test cases being generated.

The problems with the constrained random method occur when there are complex

features and/or there are a lot of properties to be covered. For example,

the ABV feature might have a mix of different unique registers, frame

contents, etc. to be verified.

When the number of coverbins/coverpoints is large, it typically takes us

up to 15 days to close 100% coverage due to all of the redundant stimulus

generation with constrained random. If the project has 10-15 complex

features, where each one takes 15 days to just close the coverage, the

duration of our constrained random verification cycle skyrockets.

This is where InFact's intelligent automation tools comes into play. It

got us targeted stimulus by eliminating redundancy in constrained random

tests. The best of two worlds: directed and constrained random.

If closing coverage with constrained random took: 10 days

Then closing coverage with InFact would take: ~1 day

The main reason InFact closes so much faster it remembers all of the already

covered cases and then eliminates these redundant cases.

We give InFact a targeted stimulus spec, and programs it so that if a

certain sequence of properties is covered once, InFact doesn't go through

it again. It's accounted for in the coverage, so no more wasted runs

taking up time and licenses.

WE DIDN'T HAVE TO LEARN A NEW LANGUAGE

When I start an InFact project, the tool asks what methodology I want, such

as UVM or OVM, and what language. Once I enter SystemVerilog and the UVM

option, InFact immediately generates a SystemVerilog template with a UVM

sequenced-based project to code in my properties. (Additionally, Mentor

also has their own list of options outside of standard SystemVerilog to

choose from, if it suits the user's needs.)

InFact generates an empty rules file for me to code the different properties

I want to verify, their constraints, sequences & their range. With

constrained random tests, we generate stimulus in SystemVerilog that

includes our constraints. InFact rules file is where these constraints for

different properties are coded. It then automatically translates our

constraints and rule properties and displays a clear graph of our property

list and the properties we've coded.

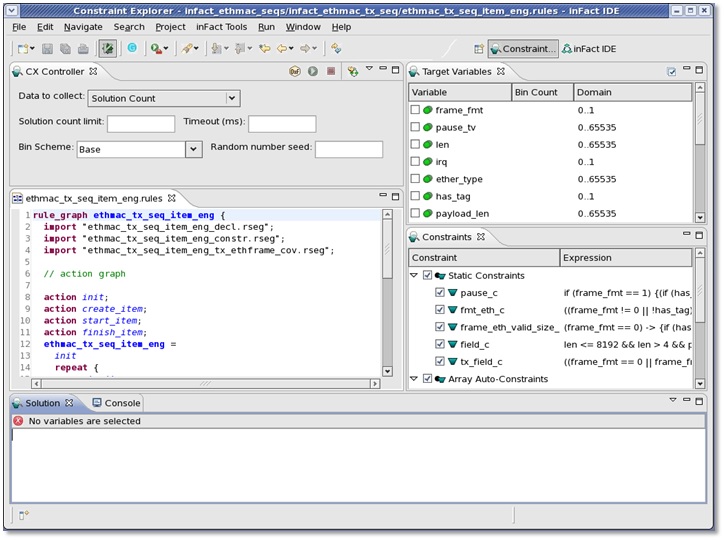

InFact Constraint Explorer

(click on pic to enlarge image)

|

The fact that we did not have to learn a new PSS language (unlike Breker

or Cadence Perspec) was a big positive for us.

- It only took me a couple of days to learn how to use InFact.

Because InFact can use plain SystemVerilog, plus give us a UVM

sequence, it was mostly the GUI that I needed to familiarize myself

with. For example, how to generate the graph, plot coverage, and

how to integrate a sequence, representing conditional branching

using the graph. InFact is an Eclipse based tool and easy to

navigate with.

- Once I set InFact up initially, deploying it for each new project

is easy. I only need to make minimal changes, such as changing

our rule files and constraints a bit, and adding new coverage

strategies to customize them for our new project. For any new

properties in our new project we input our rules file into InFact,

generate a graph, and proceed.

Whenever we use a commercial EDA tool, we want it well-integrated with our

existing environment. We cannot afford to waste a large amount of time

understanding the tool's language or trying to develope an entirely new

infrastructure to support the tool. It should just fit in and work.

InFact does that.

Because UVM is the dominant methodology that most people are moving to

these days, InFact does very well because of it generates UVM sequence

files directly, based on the information we provide as input.

We then integrate the InFact generated sequence into our test bench.

Apart from an all graph construction and setup, that's all that is needed.

Once the file is generated, we just call the file or create an instance

of the file and run it in our test. This saves us a ton of time!

INFACT'S COVERAGE STRATEGY

After entering our rules, we create our coverage strategy. We define our

coverage strategy, or 'test intent', to help us decide which properties we

want for a particular path.

For example, for property A and property B, we'd cover all combinations of

A with B.

For every feature, there will be a number of key properties whose

combinations have to be exhaustively verified. Such a huge coverage space

will take days/weeks to finish with a constrained random approach based on

that feature's complexity.

Once we have our coverage model ready, we go back to the graph that InFact

generated and put our final coverage strategy there.

- Which properties should be crossed with what properties?

- What sequence of coverage do we want?

For Mentor's path (sequence) coverage: We define a starting and ending point

for the properties, and in between them we define the properties we want to

cross in a particular sequence. Mentor's graph looks like a flow chart,

with a lot of nodes (stimulus model actions) connected with arrows to

indicate the paths.

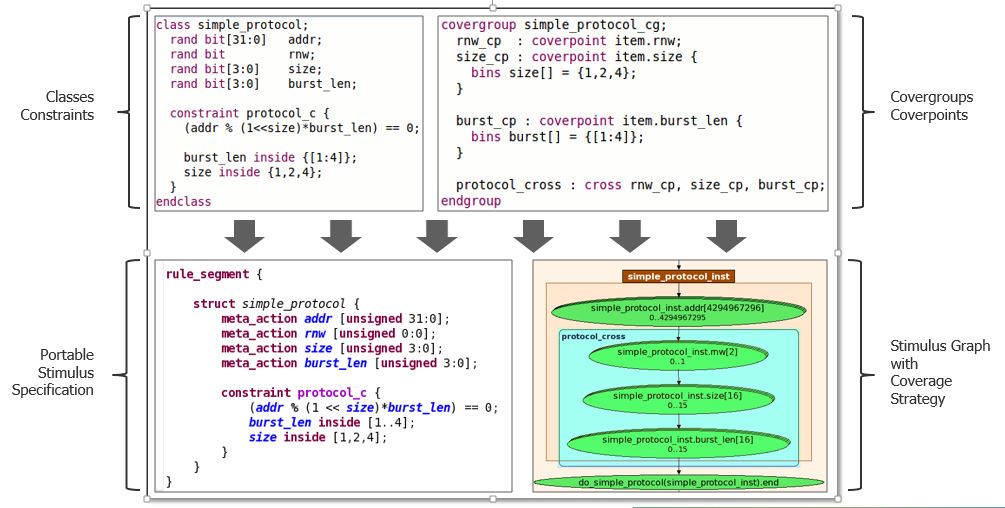

InFact SystemVerilog Constraint Importer

(click on pic to enlarge image)

|

If we have 10 properties defined in our rules file, we can choose which

properties to cross with each other. For example, on my graph I may want

to cross properties from #3 to properties #7. InFact can generate all

combinations of fields from #3 to #7.

We enter our rules via a text file (System Verilog), then set our coverage

strategy utilizing InFact's graphical editor. The good part is InFact's

editor gives us a very clear visualization of the different paths we've

defined, so we can clearly identify them. For example:

- For properties 3-7, there may be one path coverage that is pink

- For properties 2-8, the path will be a different color.

We can also cross two parts together; it's visually very clear to we what

we're doing because of the colors.

As an example, we have a table with 30 properties, and our verification

intent is to make sure different combinations of these 30 properties are

covered. Crossing all 30 properties may not be relevant, so we can

pick the 10 relevant properties and prioritize them in the graph. (State

space reduction.)

For each of these properties say I have address, data and priority, where:

- Address can be 4 values

- Data can be another 4 values

- Priority another 4 values.

In my rules file, I give 4 values for address, 4 values for data, 4 values

for priority. In my graph I can say address, data and priority are all one

group (path coverage) and ask InFact to generate all combinations of these

three things for me, which is 4 x 4 x 4. When InFact is running, it will

try to fill all values of these every time it goes through this path without

repeating already traversed paths. (Reducing the target space.)

This path coverage I defined is a one-to-one match to my coverage model and

constraints that I already created in my System Verilog test bench. So,

for whatever crosses in this coverage model, I only need to make sure they

are translated and mapped to the properties correctly of the InFact graph.

After that, my entire coverage strategy is done.

CLOSING COVERAGE

So far, with InFact, for each feature I:

- Define my rules

- Define the constraints

- Define the coverage strategy in the generated graph

When I run my tests for a feature, instead of doing traditional

constraint randomization, InFact will do the work of randomizing these

properties and go through these paths and close coverage for me.

Once InFact exits the simulations then I go in and I check if all my

coverage is 100%. For our work, basic runtime coverage is: run our

simulation, collect the coverage post simulation and make sure that all

parts are covered. In traditional constraint random test, we have to wait

for all 1000's or as many simulation launched to check coverage. But with

InFact, the tool exits immediately after coverage is achieved.

The test bench has a coverage model, which I write in System Verilog to

specify what properties I want to cover and the cross coverage of these

properties.

I'm defining my coverage strategy in the graph from my System Verilog

coverage model. (The engineer has already coded all combinations he wants

for that feature inside his System Verilog coverage model; what I do is to

map the coverage model to the InFact graph.)

When we define our coverage strategy in our graph, InFact will tell us how

many paths there are in a particular cross we have defined. Let's say in

my coverage model, I manually code in System Verilog that

A crosses B crosses C crosses D

After I define my A to D strategy, it show the # of paths / combinations

involved for those parameters. For example, it will say it will cover

3000 combinations for A to D.

InFact then partitions those 3000 combinations across multiple clients. (For

our real life blocks we can easily have 50,000 cover points, and we use

just 10 InFact clients to get 24 hour runs.)

This figure shows InFact doing parallel processing across multiple CPUs in

a regression farm. It can unfold the sequences from a single graph and

distributes them across 1000's of CPUs.

Unlike constrained random testing, each CPU is given different sequences

to execute, eliminating redundant work between CPU's. The results from

each CPU are then automatically collected by the InFact Distributor, so

they can be analyzed, and only the failing tests are analyzed for debug.

When InFact is done running, it lets us know that we've covered all paths

from A to D; we collect this data from all the clients to see how much it

has covered in our System Verilog test bench.

If InFact has covered this 100%, this feature is done. If it's not 100%,

then we debug to see if the problem is with:

- Our rules

- Our constraints

- The way we defined our path coverage in the graph

DEBUG

For design debug, we either go through the log files by hand; or use a

waveform viewer, like Verdi. Let's say we define our 3000 count path

A to D path coverage as:

- Path X: coverage strategy from point A to point B

- Path Y: coverage strategy from point B to point C

- Path Z: coverage strategy from Point C to Point D

Each verification team owns a certain number of InFact license/clients, with

each license/client it has one slave VCS/IES/Questa simulator. InFact's

Simulation Distribution Manager (SDM) is a central manager that distributes

these path coverages across different clients, monitors which client is

covering what path, and reports the coverage results.

When we launch the InFact simulations, the distributed verification manager

will pop up, monitoring the progress. This monitor will tell us there are:

- 600 paths in coverage area for path X, 92% (552) covered

- 900 paths in coverage area for path Y, 34% (306) covered

- 500 paths in coverage area for path Z, 100% (500) covered

It tells us how many paths there are, what percent of paths are done. If

all our combinations are covered and coverage goals have been met, InFact's

job is done and the SDM exits.

InFact makes debugging simpler by storing the history for each client. If

any InFact simulation fails, we don't have to run all 10 clients again to

see what went wrong where. Once we spot the failed simulation, we simply

rerun this using the history file with waveforms or log messages -- and

continue with debugging.

InFact saves time by allowing us to quickly narrow down the stimulus causing

the error for debug. If simulation/client #2 fails, we only need to rerun

#2's history file with the waveform (or log message)s and debug only that.

We don't have to worry about what the other 9 clients are doing.

The tool is fast, but it still takes a lot of time to run and debug the

entire coverage space. So rather than rerunning all the 10 clients. I can

just take the one failed simulation/client and spend only 2-3 hours to run

and debug it again.

QUICKIE RECAP:

There are two basic ways to have our model hit 100% coverage on all our

combinations.

1. We do it in the traditional constrained random way, where we run

our simulation regressions taking 10-20 days and then come back

and collect coverage against our models to see if we've hit 100%.

or

2. We translate our coverage model into an InFact graph, and then we

run our tests integrating with InFact generated sequences. To

see if we hit 100%, we check it with our coverage model and test

bench and confirm that InFact generated stimulus what we expect.

In 24 hours with InFact, we can finish coverage for things that would take

us 10 days with constrained random. And because InFact reduces redundancy

and it has the intelligence know that something has already been covered

and not to do it again, we don't waste our Questa simulations. Without

InFact, it's not possible to eliminate that redundancy accurately.

That 10x savings is directly related to the number of InFact licenses that

your company has. As I mentioned earlier, the InFact manager distributes

the coverage across multiple clients. We had 10 InFact licenses to get

50,000 coverage points in 24 hours. If instead we had 40 InFact licenses,

we probably could finish in 6 or 7 hours.

CONCLUSION

We are very happy with InFact and I would definitely recommend it other

users. The learning curve is simple, the GUI is really comfortable to work

with, and we don't have to learn a new language.

- InFact's biggest benefit is with 50,000 cover points it can close

coverage 10X faster by eliminating redundancies. (I would say

10,000 coverage points is the breakpoint where it makes sense.)

- The second benefit is it helps us to capture more design bugs

because InFact automatically generates a lot of corner cases that

we'd normally miss with human coded testbenches. We have caught

certain corner cases that we missed with traditional methods.

- A third very good advantage, it minimizes the number of lines of

testbench code we have to write by hand, because we're moving to

its graph-based approach. That makes it easier to maintain and pull

together across multiple projects.

Right now, what we have with InFact and System Verilog and UVM works. If a

few years from now, PSS comes into play and everyone is adopting PSS, at

some point we might consider moving to the standard's template/language;

which Mentor says they will support.

In the meantime, InFact is straight forward and useful for us without having

to wait for the standard.

- [ Spider Man ]

---- ---- ---- ---- ---- ---- ----

Related Articles

Cadence Perspec wins #1 in #1 "Best of 2017" with Portable Stimulus

Breker TrekSoC takes #2 in #1 "Best of 2017" with Portable Stimulus

Mentor InFact was pretty much an early No Show in the PSS tool wars

Spies hint that Synopsys is making a PSS tool, but it wasn't at DAC

Join

Index

Next->Item

|

|