( ESNUG 567 Item 2 ) -------------------------------------------- [02/23/17]

Subject: Prakash on DVcon'16, portable stimulus, the end of simulation, Ajoy

Hi, John,

I'm following in the tradition of giving DeepChip our own internal

DVcon'16 Trip Report in anticipation of DVcon'17 next week.

- Prakash Narain

Real Intent, Inc. Sunnyvale, CA

---- ---- ---- ---- ---- ---- ----

DVCON ATTENDANCE NUMBERS

2006 :################################ 650

2007 :################################### 707

2008 :######################################## 802

2009 :################################ 665

2010 :############################## 625

2011 :##################################### 749

2012 :########################################## 834

2013 :############################################ 883

2014 :############################################ 879

2015 :############################################### 934

2016 :############################################ 882

The 2016 conference attendance numbers fell back to the traditional level

of 882, which is the average of the last five years.

Wally Rhines' keynote touched on the hot topic of automotive and security.

There was a lunch-time panel on software-driven verification with portable

stimulus. And the role of DW simulation tools versus formal and emulation

was a discussion topic of one industry panel. There were some technical

paper highlights. And Ajoy Bose talked about starting Atrenta.

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

WALLY'S KEYNOTE - Design Verification Challenges: Past, Present, Future

"Design Verification Challenges" and how engineers are going to meet them

was the theme of Mentor Graphics Chairman Wally Rhines' keynote at DVCon'16.

He had four main topics: design productivity, the ages of verification from

"0.0" to the system era, what's next, and beyond functional verification.

DESIGN PRODUCTIVITY

Wally started with interesting statistics on the growth of design.

In the past 30 years design productivity has grown 5 orders of magnitude

(500,000X) in terms of numbers of transistors. The number of designers has

only grown slightly since the 1980's. Cost per transistor is continuing to

fall at dramatic logarithmic rates, enabling us now to make 1 billion

transistors for about $1. (Wally is always a good analyzer of EDA trends,

and he did not disappoint.) He noted the cost of EDA software decreases at

the same rate as the revenue per transistor. This is not a surprise because

the size of designs EDA tools can handle has continued to grow to the

giga-gate scale without any corresponding rise in list prices of their SW.

IP reuse has driven a large share of design productivity gains. Wally

showed trend curves of the number of IP blocks per SoC chips based on

IP type. For CPUs/DSPs/Controllers usage the number is about 40 on

average. Embedded memories average about 50, and other semiconductor

IP blocks are near 70.

As he mentioned earlier, demand for design engineers has grown slowly at

a 3.7% compounded annual growth rate (CAGR). In contrast the demand for

verification engineers increases at a rate 3.5 times that of design

engineers at about 13% CAGR.

By combining all this different IP, you might think this would reduce

the verification burden for an SoC. Wally reminded the audience that

even though the IP is functionally verified, the combination of all

that different IP creates a new much larger state-space that makes

verification more difficult and grows at a rate of 2 to the power of X,

where X is the number of memory bits + flip-flops + latches + I/O.

VERIFICATION AGES: FROM "0.0" TO THE SYSTEM ERA

Next, Wally wanted the audience to take a walk down the memory lane of

verification. First, we had LSI grow to VLSI, which demanded the

creation of the first transistor circuit simulators CANCER and SPICE

from UC Berkeley in the early 1970s. Gate-level simulation followed in

the early 1980s.

Verification 1.0 was the RTL era with a focus on languages and improving

performance. VHDL was a standard in 1987, and Verilog was released in 1985

and became a standard in 1995. The CPU clocks for computer hardware were

giving more performance and by the year 2001 were operating in the 1.5 to

2.0 GHz range. Wally showed that the simulators themselves also were

getting better and that between 2000 and 2004 Mentor's Questasim had grown

10X in speed independent of the hardware.

The rise of testbench automation is Wally's Verification 2.0 era, with

the use of Specman "e", Vera, SystemC, and eventually System Verilog which

became mainstream by 2012. There is huge growth in for the Accellera UVM

base class libraries used in design projects.

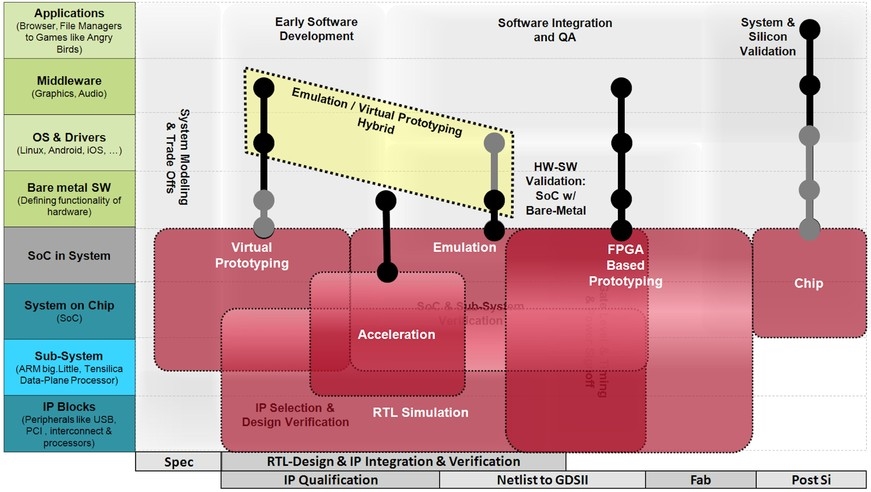

The System era is where we are today. There are multiple layers of

verification requirements that now must be met.

We now have a whole suite of technologies to handle these requirements:

virtual prototyping, formal, simulation, emulation, and FPGA prototyping.

With virtual prototyping you can expose emergent behavior earlier in the

design process. Wally used an automotive example, where a mix of hundreds

of millions of lines of embedded software, plus complex hardware and

sensors, and a high cost of failure drive the need for a multi-domain

analysis. Function, performance, safety/security and connectivity are

the major areas. Wally also identified OS and application-level power

management as a growing trend, and said that architectural trade-offs at

the transaction-level need to be done using system-level models.

Formal was another focus of Wally's. He identified that formal technology

adoption and specifically automatic formal applications as the #1 growing

category in EDA. He made special mention of clock-domain crossing as an

important application and said it is a full-chip problem and the formal

solution handles full-chip as well. He did note the trend that the larger

the chip, the more likely it will use formal property checking. He also

identified other applications including FSM design issues, unreachable

code, X-propagation, and IP connectivity errors. Real Intent's suite of

sign-off applications includes CDC and the other types. One category

we think should be added is SDC constraints, in which formal methods

can provide exception verification.

Simulation has growing requirements including data mining and applying

analytics to waveform databases.

Wally reviewed the various ages of emulation - from the ICE age in the

'80s to the Acceleration age of the '90s to the Virtualization age of

the mid-2000s when emulation moved from the lab to the data center. Not

surprisingly the headcount for SW engineers doing embedded development

has surged past that of HW verification engineers. Wally sees emulation

entering the Applications age with Power, deterministic ICE, and DFT

being the leading use categories.

FPGA prototyping is still used for regression testing and application

software verification, but emulation usage grows as design size increases,

while prototyping decreases for designs of more than 80 million gates

according to their latest verification study.

WHAT'S NEXT?

The question is what's next for verification? Wally touched on what is

coming to your desktop in the very near future. A common verification

environment that is completely abstracted from the underlying engines is

still needed. Bill Hodges of Intel PPA Austin was quoted as saying:

"Users should not be able to tell if their job was executed on a

simulator, emulator, or a prototype."

Wally next presented portable stimulus, a topic that received considerable

treatment at DVCon in general, and is an evolving Accellera standard.

The goal of portable stimulus is to encompass all of the various test types

including directed tests, constrained random tests, embedded C test

programs, system software tests, and graph-based tests to drive all the

various vector-based analysis engines. A single specification will be able

to generate all the different test types in a tool-independent method.

BEYOND FUNCTIONAL VERIFICATION

It's a brave new world out there. Wally introduced his first topic

that is beyond functional verification: security.

[Level of Security concerns for Chip Designers graphic]

Soon the Internet-of-Things will expand the security need to almost

everything we do. Wally highlighted Beckstrom's Laws of cybersecurity:

1. Everything that is connected to the internet can be hacked.

2. Everything is being connected to the internet

3. Everything else flows from these first two laws.

We see news of corporate breaches reported regularly in the press. How can

we mitigate these threats? Chip designers will need to be concerned with

malicious logic inside chips, counterfeit chips, and 'side-channel'

attacks. Verification's traditional focus has been verifying that a chip

does what it is supposed to do. The new challenge is to verify that a

chip does nothing it is not supposed to do. Wally thinks EDA will be the

core of this solution. And the industry will be able to sell more software.

The other area Wally addressed was safety-critical design and verification.

This means no harm can come to systems, their operators or to bystanders.

Certification standards have to be met such as ISO 26262 (automotive),

IEC 60601 (medical), and DO-254 (aerospace).

The certification process in general validates that you do what you say

and say what you do. Auditor-checks confirm each design requirement is

verified and identify the specific test that does it. All design features

are derived from design specifications, which are derived from the system

specifications.

He pointed out that fault injection is one important area of system

verification for safety-critical design. Formal-based methods can

exhaustively verify the safe behavior of designs.

WALLY'S SUMMARY

Wally always gives an informative and engaging keynote. This year, there

was a little less humor than in years past, but that may be due to the

historical perspective he was trying to share.

Key takeaways for me are the following:

- Static verification is fastest growing category in EDA. Emulation

closely follows as number 3. (#2 was not mentioned)

- New kinds of focused verification will continue be adopted, such

as reset-domain crossing and constraints verification. (At Real

Intent, we see new requirements for focused static solutions at

the gate-level also.)

- With the verification "what" being separated from the verification

"how," engineers can focus on getting answers quickly. The big driver

for this is that giga-gate designs produce a ton of data that needs

to be processed.

Prakash Note: The Real Intent iDebug data-driven debugging environment that

is SW engine-independent has similar productivity gains that Wally alluded

to in his keynote.

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

LUNCH PANEL ON SOFTWARE DRIVEN VERIFICATION WITH PORTABLE STIMULUS

(click pic to enlarge)

|

Panelists:

- Frank Schirrmeister, Cadence

- Alex Starr, Advanced Micro Devices

- Karthik Gururaj, Vayavya Labs

- Sharon Rosenberg, Cadence

- Tom Fitzpatrick, Mentor Graphics

While everyone spoke to questions raised by moderator, Frank Schirrmeister

of Cadence, I have focused on what I thought were the industry perspectives

of the end-user companies such as AMD, that unlike other panelist companies

were *not* involved in the development of the standard. Frank opened with

the following:

Q. What are the drivers for Portable Software Stimulus at

your company?

Karthik Gururaj, Vayavya Labs:

"We are trying to see what we can do in the no-man's land between hardware

and software, to capture the interactions between HW and SW, in a form

that can be used in different implementations."

Alex Starr, AMD:

"Why AMD is particularly interested has two reasons. We are all interested

in 'Shift-left.' How can we do things earlier, and do them with a high

level of quality? First is coverage, and what I mean by this is not the

functional coverage that we do with simulation. It's more about system-

level coverage of testing. And coming from the emulation world and the

virtual platforms world, one of the things we do is a lot of system-

level workloads. And try and test those earlier."

"One of the challenges for that is the schedule. What I mean by that

is we want to test production-code. But that comes at the tail-end of

the project. When it's almost too late. So you have production code

testing on the 'right-hand' side, on the 'left' you have the low-level

UVM block code testing, and at the SoC-level you are doing directed

tests as much as you can. Generally that creates a gap in the middle.

And how do we fill that? I think that is the role of portable stimulus

and software-driven verification."

"The question I want to answer is how can we get close to the 'metal' and

have software that tests out all the interactions much earlier in the

schedule?"

Q. Hardware and Software teams: who is more fun to work with?

Alex Starr, AMD:

"I am not sure what your definition of 'fun' is. When we do this pre-

silicon work, we get a lot of software engineers and diagnostic

guys involved. They do live in a different world than verification

engineers. They are fun to work with but very demanding. They want

more speed and performance. That's why you are doing emulation, virtual

platforms, and hybrid emulation to get the software ready early."

"The number of firmware engines and processes going on in a large

microprocessor-based SoC is a lot. Just to get out reset, for example,

with secure-boot aspects requires a lot of firmware. You actually

have to have the software there to get out of reset now. It's not

like a few years ago when you would 'pull' a pin and then you are

good."

"Inherently you are verifying the software, and inherently you

are engaging with the firmware and close-to-the-metal software engineers

right at the beginning, at the system-level. That's a new user base

that has grown and keeps on coming stronger and stronger."

"We also see software engineers from the driver side wanting to start

to do things earlier. And that creates a very interesting kind of

language and alignment gap for what software engineers want to

see and what their goals are, and what the hardware guys have already

verified and how you get those two to meet. It is definitely a

challenge."

Q. What are the sweet spots of the verification engines

that you are using?

Alex Starr, AMD:

"We start all of our work in virtual platforms and do all the firmware

bring-up in that environment. And that's very fast, so you screen your

software for issues. There's some level of abstraction in the virtual

platform, so you don't catch everything. So you start to move to

emulation when the design is ready."

"Typically we start with hybrid emulation before the whole SoC is

ready. So we'll jump-start that and maybe get some extra performance.

And then we will go to co-emulation for the SoC, to test out all the

clock-based cycle accurate interactions for the HW and SW."

Q. What about the portability feature, what does it look like?

Alex Starr, AMD:

"I see portability on two axes. First, the portability of platforms and

engines on which the uses executes the model and the stimulus. For

example you may have a high-level software stimulus you could run as

a virtual platform, as a full-system with disks attached. You could run

that equally in an emulation platform or even a simulation platform

if you get it fast enough, where you are modeling external peripherals

and so on. You maintain the same system-level viewpoint and run the

same stimulus. The stimulus has no idea what it is running on. It works

across those."

"The other axis is how you take that stimulus and drive it down to the

sub-block and IP level. Why do you want to do that? Say you are

developing a new CPU core or IP block, and you want to test that in the

right context, much earlier in the design schedule, while developing IP,

even before an SoC exists. How do you take that real system-level

stimulus from a portable stimulus description and drive that at the IP

level? That is the other axis."

Karthik, Vayavya Labs:

"Portable Stimulus allows the user to model essential interactions that

exist in the final device, in software. This is important because all

these verification technologies have their own sweet spots and will

co-exist. There is a need for representing information that

will be shared across the solutions in a common way, across the HW/SW

layer which is the "last mile" connectivity between software and hardware.

The stimulus must move from IP to block to system-level, and must be

captured in a portable way."

Q: How do you introduce the Portable Stimulus approach to a team?

Alex Starr, AMD:

"That is a tough one. Definitely teams are siloed. Verification engineers

want to be verification engineers and use simulation and UVM all the time.

That's fine. But how do you instigate change where you want the software

work to be done early? Maybe you want to do BIOS and operating system

boot as a verification engineer, not as a post-silicon validation person

on an emulator. We are seeing more and more of that."

"Is the verification team comfortable being able to completely verify

the design? It's pushing them out of their comfort zone to go into those

areas. We are seeing a transition of doing software workloads and system-

level workloads earlier. But at the end of the day you need some

'forcing function' to make these teams work. We generally do that through

executive hammers, who drive it down from the top and say we've really

got to do this to get time-to-market."

Q: As we go to software-driven verification, what is the coverage

metric? Do we just run the final system software and if that

works we can ship the device with a software stack on top of it?

Alex Starr, AMD:

"There are definitely new forms of coverage coming in. Even today if you

ask a platform validation guy what coverage is, you get a completely

different view from what a verification engineer has. It's that language

barrier again. When a system validation engineer talks about coverage they

ask did they cover all the features of a chip that were designed and did

they hit the chip with all the tens of thousands of tests they use in the

validation lab."

"So definitely, there is some new coverage coming in. We are doing firmware

coverage, which is line coverage on the actual firmware, the C code that is

in there. That's together with the traditional functional coverage that we

do in our RTL simulation."

"There are different dimensions of coverage that need to come in, and the

verification community needs to be looking at more to get to system-level

workloads."

"An example to illustrate this: we are bringing up a design and the

interrupt rate coming in from the outside world is very high. From the

RTL point-of-view you have all the coverage points and the design is good;

but at the system-level, there is something not quite right. How do you

know that the interrupts are coming too fast? No functional coverage will

tell you that. You really need to up-level it to find out what is going on

in the system. Did this DMA happen when this interrupt came in? So you

have to think about these system-level interactions. These are the kinds

of things where you find bugs in real designs and they're what emulation is

used for."

"We have been doing emulation, as a community, for a long time, but we

really don't know the coverage at the system-level. We know we need to do

it, and we all do it a lot. But how do you measure that and get tangible

results? I think it's a difficult problem. I think the software driven

approach is going to lend a solution to that. For example, a graph-based

solution will get the coverage of the graph, of the stimulus itself, and

give you another metric of what you have actually tested."

Prakash Note: The panel closed with Frank Shirrmeister of Cadence asking

them additional questions from his "27-page list."

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

PANEL: WILL FORMAL AND EMULATION REPLACE SW SIMULATION TOOLS?

Real Intent sponsored a panel on whether SW simulation tools are going to be

replaced by formal verification and emulation.

Panelists:

- Jim Hogan, EDA investor

- Ashish Darbari, Imagination Technologies

- Richard Ho, Google

- Lauro Rizzatti, Consultant

- Brian Hunter, Cavium

- Steven Holloway, Dialog Semiconductor

- Pranav Ashar, Real Intent

The panelists stated their views. In general they liked simulation and

did not want to do away with it. When the panel started to debate the

relative importance of verification solutions, the discussion became

controversial with divergent opinions emerging.

Q. Will formal and emulation replace SW simulation tools?

Ashish Dubari, Imagination

"I don't think it is a good idea to displace simulation. At Imagination

what we have seen is that formal is used early in the verification cycle,

followed by constrained random and directed simulation, and of course

emulation needs to be done full-chip. I think if you do these three things

at the sensible time in your project you can't go wrong. I think where we

hear that verification is a nightmare, it is a sign that teams are doing

things in the wrong order. They might do formal late in the design cycle.

And emulation might be done too early, when it is not very productive."

Richard Ho, Google:

"For me what is important is the time to find the bug, fix the RTL, do the

debugging and get it back into the cycle. And the number of times you do

this is the thing that determines your overall schedule. There are going to

be some number of bugs in your design, say one thousand. How fast can you

get to that one thousand? How deep can you get into that thousand is what

matters. So having tools that can get at those really hard bugs like formal

verification, and having software-drive emulation is very important. But in

the middle of your cycle, when you have got a bunch of relatively easy bugs

to find, it is how many iterations of this debug loop you can get in."

"The metric my team likes to use is how many iterations can I do before

lunch. You don't want to skip lunch to keep doing your debug cycle. And

sadly, right now, nothing beats simulation. But that doesn't mean it's the

only game in town. I think at the different phases of design maturity

different things matter. So I agree with Ashish, early-on static just kills

and finds bugs very fast and is easy to use. Later on when the design is

more mature, nothing beats emulation. Having real software driving the RTL

finds things you wouldn't find any other way."

"But there is this gap, that I call the simulation gap. We could get formal

to cover more of this gap, or we could get the compile time of emulation to

be much faster, and you get more iterations in before lunch. So I think you

could close this gap, but right now that gap is large and I don't see it

closing anytime soon."

Lauro Rizzatti

"I have the reputation of being totally in the camp of emulation. This is

true. If HDL simulation would be able to keep up with the growing

complexity of the hardware and do something with the embedded software, it

would be the right choice. Emulation would not have a chance to succeed.

Simulation is the best tool in terms of usability, flexibility, debugging,

design iteration time, compilation and what-if analysis. Simulation supports

timing, emulation doesn't. Simulation supports multiple logic states,

emulation doesn't. There are many reasons to go with simulation."

"As my colleagues have said, complexity is killing simulation. There is

good news in the emulation camp, which I have followed for 22 years. Today

emulation does most of what simulation does with the exception of timing

and multi-value logic. It works 4 to 6 orders of magnitude faster. I hear

from customers in the CPU business, with a design size of 100 million gates

they are down to one cycle per second in simulation. An emulator would run

at a one megahertz, maybe more."

Brian Hunter, Cavium

"Everyone in this room is facing different challenges. We are all designing

completely different chips. If you are building a small widget and you can

go straight to emulation, that is fantastic for you. If you slap a bunch of

IP down and think that it will work, maybe you can go straight to emulation,

and use some formal tools to help ensure the connectivity is correct. Maybe

you could skip simulation in those regards."

"At Cavium we build really large SoCs. A lot of custom logic, a lot

of home grown models, and we don't use a lot of IP. So we find at least

99% of our bugs with simulation. If you wait until the whole thing is

emulation-ready and you try to find some of those bugs you are crazy.

You would never tape out."

"So simulation is king. Static and other tool like that are going to find

your simple errors. Maybe not simple to you, but generally it's straight

forward for those tools to do that. Simulation is the cheapest way to find

bugs right now."

Steve Holloway, Dialog Semiconductor

"I am going to be uncontroversial as well. Simulation forms the mainstay of

our functional verification activity these days. People have already spoken

at this conference about how much time we spend in debug. And to me the

simulator is the best debug platform we have. So what you gain in speed and

capacity on the emulator, you lose in controllability and observability of

the design. For companies like mine we deal with mixed-signal designs and

mixed-signal simulation. That doesn't fit well with the emulator's view of

the world. On the static side, although we are very successfully using these

static methods to analyze the design and to do some automatic formal

analysis and do some point checks that the tools are very good at, one of

the limitations we see these days with static, is that the number of static

experts in formal is actually limiting us. So simulation is going to be our

main activity for many years to come."

Pranav Ashar, Real Intent

"I'll speak a little bit on the formal side of things. The panel topic is

a little tongue-in-cheek. Simulation debug is not going to go away. It's

the easiest verification step to start. You just compile your design, apply

stimuli and you don't need to know anything about the design. And you just

start doing verification. The trend is simulation is being used less, and

static verification is being used more. And this trend is secular, and not

just a trend for only certain kinds of designs."

"Now Richard talked about static being used early and simulation used late.

There is another way to look at the problem. Static verification is useful

for certain kinds of problems, and in those applications it really shines.

We have a trend of signing off of chips based on a partitioning of the

verification concern that is really taking hold. And there are certain

kinds of problem where it really works well. For example, the verification

of asynchronous clock domain crossings on a chip is a very narrow problem

and its scope is limited."

"I see formal as a more systematic simulation and emulation as a faster

simulation. If this trend continues I see the scope that simulation is

going to be used for is going to continue to diminish. I don't know when

it will plateau out, but simulation will be used less and less over time."

So the panel agreed that simulation is not going away, which was not a

surprise but there was back-and-forth discussion of what were the rights and

wrongs of verification.

Q. You are going to have this tiered approach to verification.

What about the economic trade-offs?

Ashish Dubari, Imagination

"I am quite surprised at what Richard said about a better ROI with

simulation. Someone else said 'simulation is the king.' At Imagination,

I have been driving a lot of formal verification work including methodology

development and training. What we are doing is in agreement with what

Pranav was saying. We are actually doing less simulation at the block-

level and smaller systems. And we would actually lean more on formal. We

are finding bugs at a fraction of cost in terms of time."

"We looked at bugs we had missed early on when formal was not used

on a block, but uncovered in emulation after three weeks of debug.

It came into my mind that using one second of wall clock time we can

reproduce the bug. If simulation can beat this, I would love it. If

simulation can find the corner case bugs that we find with formal and the

ROI we put in, I am your slave.

"The main driver why formal hasn't been successfully used is the methodology.

And I agree with Pranav and Steve it is seen as an expert-level activity.

I think the EDA vendors and service companies in the Valley have made a

lot of effort in actually making things simpler. But look how long it has

taken constraint random to come this far and how long directed testing has

been used. We are expecting too many things to happen in a short amount of

time."

"Everything is methodology related. As Richard was saying, simulation can

find a lot of bugs that are clearly written by people who know what they

are doing. Similarly, formal is written by those who know what they are

doing. If you have better specifications with a strict goal in mind,

there is no reason why formal won't be able to get it. I mean why are

you going to use simulation and emulation to find your X bugs, your

CDC issues, protocol checking on the interfaces, and so on. You could

do this in an hour with formal. It's a no-brainer really, and I feel that

these things are not expert-related. You don't to have someone with a

PhD in formal to do all of this. There are lots of people who do

creative-type stuff without an expert tag on them."

Jim Hogan:

"If you go to Mars you have to take along a roll of duct tape... Ashish

what did you get your PhD in?"

Ashish Dubari, Imagination:

"Sorry, it was in formal verification." (audence laughter and chuckles)

"I do apologize for that."

Richard Ho, Google:

"I really don't like the phrase "formal expert" and I think we should stop

using it. You don't need to be an expert to use the formal tools of today.

And I wanted to clarify one thing Ashish was pinging me on was 'ROI.' I

said earlier there was a large number of bugs in a project, in a design,

and I 100% believe you have to use the right tool to find each of these

different bugs. Some are better addressed with formal and some are better

addressed with emulation, and I believe there is a large chunk of bugs

that are better addressed by simulation."

"I want to share a story of a simulation engineer I know who was asked to do

some verification work with a formal tool without any training. This person

just picked up the documentation and got a few words of advice from an

existing user and turned out some really amazing verification from it. The

key thing was being a good engineer and having a verification mindset. I

think that is what it takes. It doesn't take someone who knows anything

about the algorithms underneath these tools. Why do you care how it works?

What you do need to know is how to write properties, and how to look at a

design specification and think, 'what do I really want to check in this

design?'"

"Then you have a toolkit. You have your formal tools, you have your super-

lint static checking tools, simulation, and if all else fails, get it working

on an emulator. But I think it's the verification mindset that matters."

Brian Hunter, Cavium

"Wait a minute! Where did you get this 'design specification' from? I want

to work at that company." (general laughter)

"One of the problems we have seen is the time it takes to write properties

for some design we call X [not X-bugs]. Now if the formal tool even

finishes and doesn't blow up, then when you are done verifying your design

X, the design engineer is saying let's do X-prime (the next version).

Or let's do X+Y or something completely different."

"The process of getting from a napkin to silicon is evolutionary. And

trying to apply formal techniques to things that are constantly changing

is very complicated. On the other hand, if you have a very standard

mathematical function or standard interface then formal is great, since

it's not going to change over time. Unfortunately, I don't get to work at

those companies (Brian smiles)."

Pranav Ashar, Real Intent

"At Real Intent, we don't see ourselves as a formal verification company.

We see ourselves as a company that provides solutions for certain kinds of

problems - for example, CDC or early functional verification or exception

verification. I think that speaks to how the formal techniques have matured.

It is quite amazing the complexity that formal engines can handle today.

And it's not because of the fact that processors speeds have increased,

which they haven't. The complexity formal can handle today is orders of

magnitude greater than it once was even 5 or 10 years ago."

"I attended a good talk by Harry Foster this morning. It was on collecting

patterns of verification. An intersection of social media and verification,

by outsourcing patterns. The verification we do is actually solving some

established failure pattern, in the chips we do today. CDC is a failure

mode we are addressing. Exceptions and untimed paths in a gate-level

netlist and so on are established failure patterns and we go after that."

"And because of the narrow scope the specification is implicit, the analysis

is easier and the debug is very focused."

Q. Where do you put your budget dollars?

Steven Holloway, Dialog Semiconductor

"Simulation. And for some time to come. But, we make investments and train

people in the formal domain, for it does work very well. You get one formal

expert to crack a number of different problems. You almost have to create

an engine-agnostic kind of portable checking - some way of expressing these

things and making the selection later on."

Jim Hogan: "...A portable stimulus with an optimal engine selection,

something like that.... Lauro?"

Lauro Rizzatti:

"Before I get in to the economic trade-offs, there was a comment before

where an engineer learned to use formal with a manual. Emulators are very

much enhanced compared to the old days, but a manual will not put you in

the position to use the emulator unless you have thorough and support

from experienced people."

"Regarding ROI there are two metrics for the cost of the emulator, one

very popular. The dollars-per-gate in my analysis over the last 23

years has dropped from $5 to less than one cent.

"And the trend will continue in the future. The cost is still expensive

because emulators have a billion gates and a capacity for million-gate

designs. And the dollars will continue to go up, but there is a promise."

"Because of the data centers the cost of the emulator now can be spread

across multiple users, multiple designs."

"The other metric is the dollars (or cents) per verification cycle. It

sounds very strange but it is the cheapest solution on the market."

Jim Hogan:

"That is a bit non-intuitive."

Brian Hunter, Cavium:

"Our biggest chip last year was over 4 billion transistors. If I do

the math that comes out to about ten million dollars."

Lauro Rizzatti: "Yeah I believe it."

Ashish Dubari: "That is just the setup cost. And then you run them. And

then you try to debug the emulation failures. Have fun with that."

Pranav Ashar, Real Intent:

"But the 10 million has to be judged in the context of an existential

crisis that you are facing. Computer processors are not getting any

faster, and you need to get the chip out on budget. You can have failures

so it's okay to go spend that kind of money."

Jim Hogan: "If you got cancer, the MRI scanner cost is insignificant."

Brian Hunter, Cavium:

"Anytime we find a bug with an emulation platform, we always go back and

question what did we do wrong in simulation. We have to have that feedback

since we don't want to find any bugs in emulation, because it is really

expensive. Finding them later make them more expensive. If we do find

them it is really hard to debug, which make it even more expensive."

Ashish Dubari, Imagination:

"What Lauro is not telling you is that the amount of time spent

debugging emulation traces is long. This is a consequence of not doing

something simple up front in the design-cycle such as formal."

"Now I agree with you Richard. The bring-up time of dynamic simulation is

not in your scoreboard for your checkers, it's in the sequences. You have

got to dream up all these corner case scenarios so that you can send these

transactions in. With formal you don't have to. Write your constraints.

Ask the questions. For example, are these two threads mutually exclusive?

Can I drain my buffers, can I see these particular events? A simple cover

property would find you a bug in a 10 million flop design in a matter of

a couple of minutes. Why wouldn't I do that? Why is that expensive?"

Lauro Rizzatti:

"There is only one class of problems that emulation will find and that is

the integration of the embedded software with the underlying hardware.

There are bugs that show up in the software, but in reality they are

hardware bugs and the reverse is true as well where hardware bugs show up

in software. The only thing that can cross the line between software and

hardware is an emulator."

"When you find that bug, you have shed one month of time from your

development cycle. Now economics tells us that if you save one month out

of a 24-month development cycle, then you save 12% of the total revenue

for the product."

"We are talking products of 500 million dollars to a billion dollars. When

Apple makes 50 billion dollars! A 12% savings does justify the purchase

of a ten million dollar emulator many times over."

Brian Hunter, Cavium:

"Why are you saying one month? Why not say you saved three months. I mean,

if you are going to make up numbers..." [general laughter]

Jim Hogan: "I gotta talk to you guys about my problem. Baseball season has

started and I leave at 3PM to get to the ballpark by 6PM. On the way I

get passed by buses driven by somebody [Jim points toward Richard Ho from

Google, audience laughs). And there are Lexus cars passing me with gear on

their roofs and no one is driving and it freaks me out. For the 20-year

life of a car, how are you going to test and verify every instance of

usage?"

Pranav Ashar, Real Intent

"Let me ask you a question. Would you rather drive a car that is formally

verified or simulated?" [general laughter by audience.]

Prakash Note: The panel closed with Jim Hogan asking about managing products

like cars which have a very long life -- and how formal, simulation,

emulation can address high-reliability designs.

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

DVCON'16 TECHNICAL PAPERS

There was a good selection of papers covering the practical use of formal in

functional verification. Formal works best when it is applied to a specific

problem type in a design. This means only a sub-set of the design is

formally analyzed. To do this, structural methods analyze the design and

then give it to the formal engine.

We have seen this applied to CDC, X-propagation, and timing exception

verification, for example. Given a precise specification of correctness

and manageable logical complexity, formal can be successful.

The paper "Automated Safety Verification for Automotive Microcontrollers"

by H. Busch from Infineon highlighted the opportunity for failure mode

analysis created by the enforcement of the DO254 and ISO26262 standards.

The standards apply to narrow application domains and force system/SOC

design companies to create solutions for narrow safety-critical problems.

The paper specifically proposes a formal approach for verifying that the

layer of hardware added to ensure system safety operates correctly

and does not itself compromise safety. This is an example of verification

complexity being in the hardware required for system integration

rather than in the core functionality itself.

As a formal-sign-off services company, Oski Technology has a history of

recognizing design problems and applying viable formal approaches to them

manually. "The Process and Proof for Formal Sign-off: A Live Case

Study" by Oski technology captured some of what they do. At the heart of

it, their approach revolves around identifying complex logical structures

that tend to have typical patterns of failure. The failure patterns are

captured as assertions and the Oski team applies its understanding of the

logical structure to model it in an analysis-friendly manner. Not very

different from what is done for the static verification of CDC, etc.

The third paper of this type was "Verification Patterns -- taking Reuse

to the Next Level" by Harry Foster et al of Mentor Graphics. It

attempts to take the Oski approach to the next level by crowd-sourcing

typical failure patterns. It is a great idea and a boon for technologists

looking to develop static solutions for narrow verification problems.

The downside is that the database will be available only to Mentor

Graphics. We call on them to make it available to the EDA community,

which includes not just the EDA companies but also universities. I believe

that it will create new momentum in formal verification research, with

significant reciprocal benefit to Mentor Graphics.

Mark Litterick presented a paper and presentation on "Full Flow Clock

Domain Crossing -- From Source to Si." Mark works for Verilab as senior

verification consultant.

Even though, you may have signed-off for CDC at RTL, logic synthesis,

design-for-test and low-power optimization tools can break CDC at the

gate-level, the physical implementation stage of design. Real Intent's

Meridian products provide clock-domain crossing verification and sign-off.

Our most recent offering is Meridian Physical CDC that provides sign-off

at the netlist level of the design.

In his conclusion, Mark states:

"The main advantage of running CDC analysis on the final netlist (as

well as the RTL sign-off stage) is to pick up additional structural

artifacts that were introduced during the back-end synthesis stages

such as additional logic on CDC signal paths that might result in

glitches, and badly constrained clock-tree synthesis -- which could

destroy an intended derived clock structure. The tools use the same

fundamental clock domain and synchronizer protocol descriptions as

the RTL phase and can therefore assess if some high-level intent

has been compromised."

His last word is:

"Finally, we would recommend a methodology with an explicit dedicated

CDC and synchronizer review as part of the final pre-silicon sign-off

criteria prior to tape-out."

Prakash Note: At Real Intent, we agree with Mark that gate-level CDC signoff

is now necessary for SoC designs.

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

JIM HOGAN'S ONE-ON-ONE WITH AJOY BOSE, FOUNDER OF ATRENTA

In conjunction with DVCon, on Tuesday evening, EDAC hosted an installment

of the very popular and informative EDAC Jim Hogan Emerging Companies

Series, "Crossing the Chasm: From Technology to Valuable Enterprise" where

concepts and best practices for emerging companies are explored. This

installment featured a conversation with Ajoy Bose, former chairman,

president and CEO of Atrenta Inc. (Atrenta was recently acquired by

Synopsys.)

Dr. Bose founded Atrenta in 2001. He began his career at AT&T Bell Labs in

Murray Hill, NJ and spent 12 years there. From AT&T, he moved to Gateway,

then Cadence where he served as VP of Engineering. In this role, he managed

and led the team that pioneered the Verilog simulation products. He was

also founder and president of Software & Technologies, Inc. and Interra.

During his tenure as Chairman and CEO of Interra, Ajoy incubated and spun

out a number of companies in EDA, digital video, and IT services.

Ajoy has a BSEE from IIT-Kanpur, India, and earned an M.Sc. degree and a PhD

in Electrical and Computer Engineering from University of Texas at Austin.

Hook 'em Horns!

Ajoy rarely speaks about himself and many parts of his story were shared

with an audience for the first time. I captured a few highlights.

- "I had just joined Gateway Design Automation, the inventors of Verilog,

which was then quickly acquired by Cadence. Within days of that, the

edict came down to open up Verilog which was proprietary at the time.

So Open Verilog was born. The good thing for me being the engineering

guy was I got a headcount of 6 people. My project was to create a

public version of Verilog that ran really slow, so people cannot

do benchmarks (against our fast Verilog simulator). These were smart

people and we did it."

- "There were two major thrusts to Verilog. First it was a new simple

hardware description language (HDL) that was adopted very quickly.

And second, it was FAST. Phil Moorby tuned the heck out of the

simulator. He would rewrite core routines in assembly language to

speed things up. It got a lot of attention."

- "What really created the proliferation of Verilog was the fact that

these ASIC companies -- Motorola, COMPAQ, and others -- were

constantly on our case about having some way of dealing with timing

in some funny way, or you have to filter signal spikes, deal with

hazards, and so on. There was a lot of work done at Cadence to get

in all the practical aspects of simulation. And that got it

entrenched in the ASIC community."

- "The success of any EDA tool, even though it has these visible and

glamorous aspects to it, is the grinding away at features and

functions that really make it more and more practical and usable."

Interra was Ajoy's services company that provided digital video, and

web-based technologies back in the 1990s. This lead to the Atrenta

story.

"Having an EDA services company [Interra] there was a project at a

large semiconductor company [Intel], and they had this notion they

were going to promote re-use internally. So they wanted software

that could screen designs against a set of re-use properties

that they had defined. If your design passes these 150 things then

your design was stamped re-usable. This was in the year 2000

time-frame before IPs were around.

"I had developed my negotiating skills by this time, and I retained

the rights to the technology even though it was licensed to them.

So we built it -- actually Bernard Murphy built it who is sitting

over there -- and called it SpyGlass. A catchy name... the product

became better known than the company [Atrenta].

"Four or five companies were also interested in re-use and we sold the

product to them in quick succession. So I thought this could lead me

to my mission in life, which is to build an EDA product company. So

this would be my company known for an EDA product."

"Being a little naive, I decided I needed to raise some venture money

for Atrenta.... I needed to build up a sales force and so on, and this

required more funds than could come from my bootstrap resources. I

received funding but there was a meltdown in the financial industry

during the Dotcom bust. It made life a lot more difficult for us.

Reuse was no longer a viable concept. It was too esoteric. Our

customers were just trying to survive in those days. We had to re-invent

ourselves so instead of providing 'vitamins' (reuse) we starting

providing 'aspirins' (solutions)."

"We had to make SpyGlass more compelling. The good thing we had done was

having created a platform on which you could expand. So we looked around

and saw that Test was becoming more of problem and the goal of coverage

was no longer 90% but 99%. And we also realized clocks and clock-domain

crossing was becoming an important problem. So that was another thing

we added to our platform. And then we went into Power. So we slowly

expanded the product into these other domains that were becoming

critical."

"By having it across a common platform, the integration provided

differentiation against point-tool suppliers. As the industry woke

up to these challenges, 'if I don't get my clock-domain crossing right

my chip will fail' and that brought our growth momentum back again.

So it came from expanding our portfolio and making it more compelling.

The notion of targeting solutions that would become important was the

reason for our success."

"My piece of advice for people is that you never get it right the first

time. If we had just relied on 're-use' our company would not have

succeeded. Recognizing the opportunities as they show up [is crucial],

such as IPs becoming a big thing. So we tied a solution to IP sign-off,

and then SoCs. Integrating those IPs in an SoC became another area where

we could offer a solution."

Prakash Note: For over 10 years, Real Intent and Atrenta were head-to-head

competitors in the CDC verification and lint areas. We always thought we

had the better tools in terms of speed, capacity and precision. Real Intent

takes the view that having best-in-class point tools is better than a

platform solution that has compromises.

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

WHO ELSE EXHIBITED

This year DVcon'16 had 32 exhibitors; each of the Big 3, plus 4 new booths.

Cadence

Mentor Graphics + Calypto

Synopsys + Atrenta

Agnisys

Aldec

AMIQ EDA

Avery Design Systems

Blue Pearl Software

Breker Verification Systems

* CAST

DINIGroup

Doulos

EDACafe

* InnovativeLogic

Magillem Design Services

MathWorks

OneSpin Solutions

Oski Technology

PRO DESIGN Electronic

Real Intent

Rocketick

S2C

Semifore

SmartDV Technologies

Sunburst Design + Sutherland HDL

Test and Verification Solutions

Truechip Solutions

Verific Design Automation

Verification Academy

Verifyter

* Veritools

* Vtool

* -- first time showing at DVcon'16

Real Intent has been exhibiting at DVCon since the beginning. DVCon'16 was

a great show for us as the adoption of static solutions is an increasingly

important topic in verification. The booth crawl with food was a great

idea. We plan to be at DVCon for many years to come.

- Prakash Narain

Real Intent, Inc. Sunnyvale, CA

P.S. And I wish thank Graham Bell for putting together this trip report.

---- ---- ---- ---- ---- ---- ----

|

Prakash Narain has 26 years experience doing EDA at IBM, chip architecture at AMD, and verification for UltraSPARC IIi at Sun Microsystems. He founded Real Intent in 1999 and has the infamous Andy Bechtolsheim as an early investor. Rumor has it Prakash secretly craves peanut M&M's chocolate candy.

|

Related Articles

Vigyan of Oski on DVcon'15, Aart, formal guidelines, goal-posting

Bernard Murphy on DVcon'14, UVM, Lip-bu, SW vs. HW engineers, specs

Industry Gadfly -- Prakash Narain's not-so-secret DVcon'13 Report

Brett's quickie trip reports on both DVcon'12 and NASCUG'12 confs

Join

Index

Next->Item

|

|