( ESNUG 547 Item 6 ) -------------------------------------------- [02/27/15]

Subject: Bernard on UVM, SW-driven verification, C-to-RTL, Jim Hogan, HLS

Editor's Note: This is continued from ESNUG 547 #5 -- Bernard on DVcon.

---- ---- ---- ---- ---- ---- ----

Panel: "Did We Create The Verification Gap?"

Moderator: John Blyler of Extension Media

Panelists:

- Janick Bergeron - Synopsys

- Jim Caravella - NXP

- Harry Foster - Mentor

- Bill Grundman - Xilinx

- Mike Stellfox - Cadence

Not as exciting as the morning panel. It had its moments and I saw some

significant overlap of opinions on software with that morning panel.

Theme was "are we blindly following industry best practices rather than

focusing on finding as many bugs as possible?" (I heard the first part of

this repeated elsewhere at the conference.)

Q: "Is there such a thing as doing too much verification of the design

and does that contribute?"

- Verification is an insurance policy - you can never do too much.

This is more about balance. Verification is just one part of what

is needed; verification, validation, electrical, bring-up, etc.

If it affects the customer, it has to be tested.

- There has been a good focus on systematic approaches like UVM,

but this is focused on bottom up, for IPs and perhaps some sub-

systems. But total system verification requires more.

- Most SoCs hardly use all the functionality put into the hardware,

hence danger of over-verification. But different market segments

have different constraints, so it is difficult to generalize.

- If you have continuity of products, like a micro-processor, you

keep adding to your set of tests. But you will never be done.

So you need to define what is good enough - when are you done.

- We struggle a lot with system-use-cases that are fuzzy up-front,

leading to misunderstanding and probably a lot of wasted effort.

- The problem is driving programmability to be able to mitigate

bugs in the field.

Q: "How can the SW world help with HW verification?"

- There is a big divide between software and hardware teams, and

knowledge of use-cases as a system. There is a cultural divide.

The big issue is getting teams together to design hardware with

software in mind.

- There is also a lack of good concurrent engineering practices in

software development. Software is often late, meaning you don't

understand the spec for sure until very late.

- Bringing hardware and software people together is not happening.

We need structural changes. Often there are teams of a hundred

on a product. In groups this large, there is a problem in

handoff -- fuzzy, incomplete documents full of opportunities for

misinterpretation.

- The analog/digital divide is as bad as hardware/software. But

this is not through lack of tools. More than enough tools are

available today for mixed signal. We lack chip designers with

experience and expertise to understand the problems and to be

able to communicate to non-specialists. We depend heavily on

superstars and that is not always repeatable.

Q: "Why so many SoC verification approaches? Could they be simplified?"

- There are multiple levels of complexity, functionality, software,

power, security domains, you have to have multiple solutions.

Everyone wants a single hammer, but that hammer doesn't exist.

- The level you are working at usually dictates the methodology.

You wouldn't use constrained random at the SoC level, and you

wouldn't use use-cases at the IP level. You need to plan on

WHAT you want to verify. Today there is too much emphasis

on HOW to verify. There is a dangerous tendency to say: "I now

understand UVM, UVM is great, let's do all of this in UVM". If

you don't need constrained random, UVM may not be the right tool.

- You need to get the software and hardware guys together to review

the specs. You'll find a lot of bugs this way. Also repeat that

process as the design (and the specs) evolve.

Audience Q: "Why are there good solutions for IP verification but not

for SoC verification?"

- There has been a lot of focus on UVM but that is designed for

IP/subsystem bottom-up verification. UVM is not so applicable to

the SoC-level.

- Today, SoC verification is the wild-west, there are no obvious

directions. It certainly needs to be more software-driven.

- There should be more emphasis on software-directed testing as the

direction for SoC, starting with virtual models and driving that

on down. You can find issues much earlier this way. This is

definitely not mainstream today but it is picking up fast.

Audience Q: "What about HLS? Could C-to-RTL be a part of the solution?"

- RTL works well today, and that is not going to change until the

process becomes simply too painful. This is a return-on-investment

question. You also can't underestimate the culture shock to chip

designers. Switching from gate-level design to RTL design was a

generational shift -- a lot of old-school designers were left

behind. Switching from RTL to C-level will be worse.

- Modernizing teams is a constant challenge. You have to keep moving.

You have to keep re-training. But these steps alone don't change

anything. The teams need to understand the why, they need to be

helped through culture changes with hands-on supervision and

leadership. All of this implies increased cost and increased risk.

If the barrier is big enough, the change won't happen unless there

is really no other choice.

Q: "What about addressing spec problems - are there tool solutions?"

- The last thing we need is another tool. We need to get the system

people involved with hardware and software people up front and

throughout product development. This is much more effective than

any tool. There is no substitute for thinking - tools are not

the answer.

- A good verification plan can be one of the best specs, and that is

best developed by system, hardware and software teams working

together, discussing together. We always find tons of interpretation

problems this way. It is important to remember the plan is never

static - it needs constant re-review.

Audience Q: "Have we made verification too easy - are designers are off

the hook to get it right?"

- There is a feedback mechanism. When a verification engineer finds

a bug, the designers take it personally. That's a good thing.

- 10 years ago, there was a lack of a verification culture, it was

not considered a career. Companies doing a good job had separate

verifiers. But walls would form, designers started throwing their

work over the wall and that leads to problems. But nobody knows

the design better than the designer. They are much better qualified

to find micro-architectural bugs. Some companies are putting more

verification burden back on designers, through linting, formal, etc.

Q: "Did standards create the verification gap? There hasn't been much

progress in 10 years..."

- The marketing view was that System Verilog was going to solve all

problems, and that led to too much time being spent on standards,

but not as an EDA initiative -- this was driven by customers.

Going forward, there should be more of a balance between innovation

and standardization. We would like to see more focus now on EDA

innovation rather than new EDA standards.

- We may be stuck in a verification loop because it has become so easy

to generate junk. People are not thinking so much about what they

are designing, they just run generator, then depend on verification

to shake out the problems. This doesn't work. You can't verify

your way to success.

Audience Q: "There seems to be more inertia against adopting new tools

in hardware than in software. Why is this?"

- Hardware is more difficult to change. It takes 9 months and a whole

lot of money to make a baby chip. You also have to think about

legacy - IP, verification suites, training, etc. Hardware design

is a very fast moving train, and there is a huge downside to being

wrong. That said, most design teams recognize that they need to

continue to evolve. Many formalize a process of change so it

becomes a part of the company R&D culture.

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

JIM HOGAN'S LUNCH ADDRESS

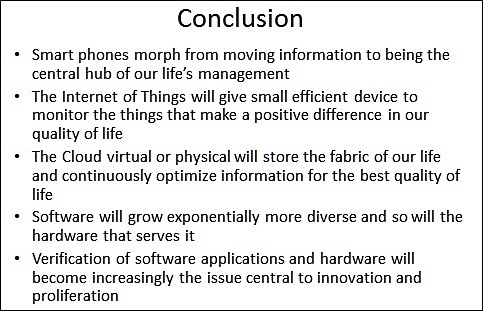

Jim addressed the "free lunch" (approx 250 attendees) with a discussion

about the shift from a single-OS-and-single-processor architecture to a

many-OS-to-many-processors eco system.

If you know Jim, he will include the Internet of Things in the discussion,

which he did here quite nicely. This shift to SoCs makes verification a

very difficult task. His conclusion slide sums it up well, I think.

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

DVCON PAPERS

Paper: Of Camels and committees -- Standards should enable

innovation, not strangle it

Authors: Tom Fitzpatrick and Dave Rich of Mentor

This was probably politically incorrect at DVcon, but this paper was a good

complementary fit with the message coming out of both panels. The panelists

all played down UVM verification for SoCs and they all played up software-

driven verification.

This paper was a view from the other side -- from inside the UVM committee.

Tom Fitzpatrick's key point was that standards should be cautious to adopt

only what minimally should be standard and they should leave everything else

to competition. He felt UVM had over-stepped that bound at least a couple

of times, most particularly with the 1.2 release, which adds significant

overhead, backward-incompatibility and a host of yet-to- be-discovered

problems in nearly 12,000 lines of new code which is probably not yet well

tested. Tom gave a number of examples of added UVM features of potentially

questionable value that contributed to this state of affairs.

To me, Tom's advice resonated well with the software-driven verification

discussions. UVM -- and any standard -- should stick to its domain and

serve that domain well with standards for only what is already well

understood and only what is suitable for standardization. It should not

take on a life of its own, independent of user's needs and industry

directions. (I grant it is easy for me to take the long view - I have

never served on a standards committee.)

---- ---- ---- ---- ---- ---- ----

Paper: Signoff with Bounded Formal Verification Proofs

Authors: Vigyan Singhal of Oski Techologies

NamDo Kim of Samsung Electronics

What does an inconclusive formal proof mean to an engineer? Vigyan Singhal

acknowledged that "almost all proofs result in bounded (incomplete) proofs",

but that does not mean they are not useful.

This paper presented a systematic way to understand bounded proofs. With a

little bit of analysis, the user can get more confidence in the coverage

provided by formal tools. This idea is getting wider traction in the SW

industry (we have a similar concept) and shows promise to extract value

from a previously-thought-unpromising aspect of formal tools.

---- ---- ---- ---- ---- ---- ----

Paper: Stepping into the UPF 2.1 World for Power Aware Verification

Authors: Amit Srivastava and Madhur Bhargava of Mentor India Ltd.

Very good paper on what's new in UPF 2.1 with lots of practical examples and

discussion on impact and limitations. Helpful to anyone new to 2.1.

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

EXHIBITORS

List - (N) Denote 1st Timer

-------------------------------------

Agnisys, Inc.

Aldec, Inc.

AMIQ EDA

Atrenta Inc.

(N) Avery Design Systems, Inc.

Blue Pearl Software

Breker Verification Systems

Cadence Design Systems, Inc.

Calypto Design Systems

(N) Coverify

(N) DeFacTo Technologies

DINI Group

(N) DOCEA Power

Doulos

EDACafe.com

(N) Flexras Technologies

(N) Frobas GmbH

(N) IC Bridge

IC Manage, Inc.

InnovativeLogic, Inc.

Jasper Design Automation, Inc.

(N) Kozio, Inc.

(N) Magillem Design Services

Mentor Graphics Corp.

OneSpin Solutions GmbH

Oski Technology, Inc.

Paradigm Works, Inc.

(N) PRO DESIGN Electronic GmbH

(N) Quantum Leap Sales

(N) Quixim, Inc.

Real Intent, Inc.

S2C Inc.

Semifore, Inc.

Sibridge Technologies

(N) Sonics, Inc.

Synapse Design

Synopsys, Inc. - Corporate

(N) Truechip Solutions Pvt. Ltd

Verific Design Automation

(N) Verification Technology Inc.

Interesting Observations - Bernard's Rule of Forty:

1) Synopsys went big with 40% more space than Cadence and Mentor.

2) Of the 40 exhibitors, 15 were first timers - which is almost 40%.

3) Cadence took over the Forte spot and converted it into a sitting area.

4) Wish I had 37 more observations...

Give Away Awards - IMHO

Most Unique: Real Intent gave away live red roses to those who asked

Most Useful: Avery had a nice sturdy flashlight

Atrenta had a dual port USB car charger (rated for iPhone)

Most $$$: Several exhibitors had raffle drawings for gift cards

or blue tooth speakers

For Kids: Aldec had a "Funny man" clock and biz card holder

Pro Design had a squishy, stress relief robot

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

DVcon has become an important conference for Atrenta and is a refreshing

contrast to the commercialism of DAC. We had several folks with full

conference passes that year and we are doing the same next week.

- Bernard Murphy

Atrenta, Inc. San Jose, CA

Editor's Note: This is continued from ESNUG 547 #5 -- Bernard on DVcon.

---- ---- ---- ---- ---- ---- ----

Related Articles:

Bernard Murphy on DVcon, Lib-bu, SW vs. HW engineers, specs, IP

Bernard on UVM, SW-driven verification, C-to-RTL, and Jim Hogan

If you know Jim, he will include the Internet of Things in the discussion,

which he did here quite nicely. This shift to SoCs makes verification a

very difficult task. His conclusion slide sums it up well, I think.

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

DVCON PAPERS

Paper: Of Camels and committees -- Standards should enable

innovation, not strangle it

Authors: Tom Fitzpatrick and Dave Rich of Mentor

This was probably politically incorrect at DVcon, but this paper was a good

complementary fit with the message coming out of both panels. The panelists

all played down UVM verification for SoCs and they all played up software-

driven verification.

This paper was a view from the other side -- from inside the UVM committee.

Tom Fitzpatrick's key point was that standards should be cautious to adopt

only what minimally should be standard and they should leave everything else

to competition. He felt UVM had over-stepped that bound at least a couple

of times, most particularly with the 1.2 release, which adds significant

overhead, backward-incompatibility and a host of yet-to- be-discovered

problems in nearly 12,000 lines of new code which is probably not yet well

tested. Tom gave a number of examples of added UVM features of potentially

questionable value that contributed to this state of affairs.

To me, Tom's advice resonated well with the software-driven verification

discussions. UVM -- and any standard -- should stick to its domain and

serve that domain well with standards for only what is already well

understood and only what is suitable for standardization. It should not

take on a life of its own, independent of user's needs and industry

directions. (I grant it is easy for me to take the long view - I have

never served on a standards committee.)

---- ---- ---- ---- ---- ---- ----

Paper: Signoff with Bounded Formal Verification Proofs

Authors: Vigyan Singhal of Oski Techologies

NamDo Kim of Samsung Electronics

What does an inconclusive formal proof mean to an engineer? Vigyan Singhal

acknowledged that "almost all proofs result in bounded (incomplete) proofs",

but that does not mean they are not useful.

This paper presented a systematic way to understand bounded proofs. With a

little bit of analysis, the user can get more confidence in the coverage

provided by formal tools. This idea is getting wider traction in the SW

industry (we have a similar concept) and shows promise to extract value

from a previously-thought-unpromising aspect of formal tools.

---- ---- ---- ---- ---- ---- ----

Paper: Stepping into the UPF 2.1 World for Power Aware Verification

Authors: Amit Srivastava and Madhur Bhargava of Mentor India Ltd.

Very good paper on what's new in UPF 2.1 with lots of practical examples and

discussion on impact and limitations. Helpful to anyone new to 2.1.

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

EXHIBITORS

List - (N) Denote 1st Timer

-------------------------------------

Agnisys, Inc.

Aldec, Inc.

AMIQ EDA

Atrenta Inc.

(N) Avery Design Systems, Inc.

Blue Pearl Software

Breker Verification Systems

Cadence Design Systems, Inc.

Calypto Design Systems

(N) Coverify

(N) DeFacTo Technologies

DINI Group

(N) DOCEA Power

Doulos

EDACafe.com

(N) Flexras Technologies

(N) Frobas GmbH

(N) IC Bridge

IC Manage, Inc.

InnovativeLogic, Inc.

Jasper Design Automation, Inc.

(N) Kozio, Inc.

(N) Magillem Design Services

Mentor Graphics Corp.

OneSpin Solutions GmbH

Oski Technology, Inc.

Paradigm Works, Inc.

(N) PRO DESIGN Electronic GmbH

(N) Quantum Leap Sales

(N) Quixim, Inc.

Real Intent, Inc.

S2C Inc.

Semifore, Inc.

Sibridge Technologies

(N) Sonics, Inc.

Synapse Design

Synopsys, Inc. - Corporate

(N) Truechip Solutions Pvt. Ltd

Verific Design Automation

(N) Verification Technology Inc.

Interesting Observations - Bernard's Rule of Forty:

1) Synopsys went big with 40% more space than Cadence and Mentor.

2) Of the 40 exhibitors, 15 were first timers - which is almost 40%.

3) Cadence took over the Forte spot and converted it into a sitting area.

4) Wish I had 37 more observations...

Give Away Awards - IMHO

Most Unique: Real Intent gave away live red roses to those who asked

Most Useful: Avery had a nice sturdy flashlight

Atrenta had a dual port USB car charger (rated for iPhone)

Most $$$: Several exhibitors had raffle drawings for gift cards

or blue tooth speakers

For Kids: Aldec had a "Funny man" clock and biz card holder

Pro Design had a squishy, stress relief robot

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

DVcon has become an important conference for Atrenta and is a refreshing

contrast to the commercialism of DAC. We had several folks with full

conference passes that year and we are doing the same next week.

- Bernard Murphy

Atrenta, Inc. San Jose, CA

Editor's Note: This is continued from ESNUG 547 #5 -- Bernard on DVcon.

---- ---- ---- ---- ---- ---- ----

Related Articles:

Bernard Murphy on DVcon, Lib-bu, SW vs. HW engineers, specs, IP

Bernard on UVM, SW-driven verification, C-to-RTL, and Jim Hogan

Join

Index

Next->Item

|

|