( ESNUG 547 Item 5 ) -------------------------------------------- [02/27/15]

Subject: Bernard Murphy on DVcon, UVM, Lib-bu, SW vs. HW engineers, specs

Hi, John,

I thought I'd follow in this pattern and give your readers our

own internal DVcon'14 Trip Report in anticipation of the

DVcon'15 coming up next week.

- Bernard Murphy

Atrenta, Inc. San Jose, CA

---- ---- ---- ---- ---- ---- ----

DVCON ATTENDANCE NUMBERS

2006 :################################ 650

2007 :################################### 707

2008 :######################################## 802

2009 :################################ 665

2010 :############################## 625

2011 :##################################### 749

2012 :########################################## 834

2013 :############################################ 883

2014 :############################################ 879

Attendance was flat over last year, though the number of exhibitors grew

(40 this year, including 15 first-timers and 10 international).

My main take-aways were:

- There is a common theme around software-driven SoC verification.

This was generally agreed in multiple forums. But this is not

using the production software, not even a branch of that software.

This software is purpose-built for verification.

- UVM and constrained random are great for IP and maybe some sub-

systems, but not at all so for SoCs. Speakers openly discouraged

developing UVM or any standard in this direction.

- UVM nicely formalizes what it can do. We now need breakthrough

innovation to get us to what it can't do (well).

- Standards stifle, rather than accelerate progress on verification

for SoCs.

There was emphasis this year on adding chip design content in addition to

verification. This seemed to come across mainly through tutorials and

papers on UPF, especially UPF 2.1 - not a bad place to start.

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

LIP-BU'S KEYNOTE ADDRESS

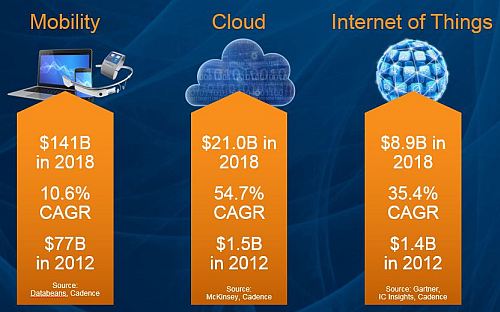

For those of you who haven't seen the stats, Lip-Bu divided the chip market

into Mobility, Cloud and Internet of Things

- Mobility (1B phones, 400M tablets today, 50%+ compound growth)

- Cloud (less easy to divide into units but also 50%+ compound growth)

- Internet of Things (perhaps 50B units, possible growth to >14T units)

He also pointed out that systems companies are becoming much more significant

as drivers in at least the first two categories.

Amazon, Apple and Google are obvious examples. Lip-Bu talks frequently to

his friends in these and other companies (like Facebook and Twitter) to

encourage them to see hardware as a differentiator -- that ultimately they

cannot win on software alone.

Amazon, Apple and Google are obvious examples. Lip-Bu talks frequently to

his friends in these and other companies (like Facebook and Twitter) to

encourage them to see hardware as a differentiator -- that ultimately they

cannot win on software alone.

Conversely for the HW developers in the audience, Lip-Bu cited that SW,

verification, and validation accounted for 80% of a chip's total development

costs.

Conversely for the HW developers in the audience, Lip-Bu cited that SW,

verification, and validation accounted for 80% of a chip's total development

costs.

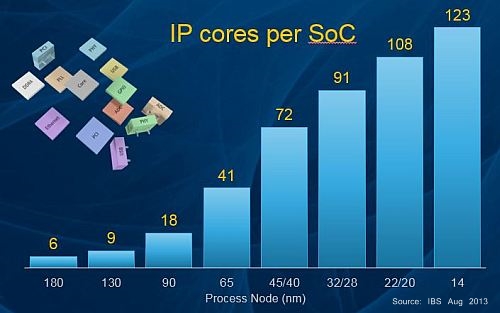

He also cited how design reuse and 3rd party IP cores were exponentially

growing in current SoC design. (Perhaps as an oblique reference to how CDNS

was expanding in IP vs. SNPS?)

He also cited how design reuse and 3rd party IP cores were exponentially

growing in current SoC design. (Perhaps as an oblique reference to how CDNS

was expanding in IP vs. SNPS?)

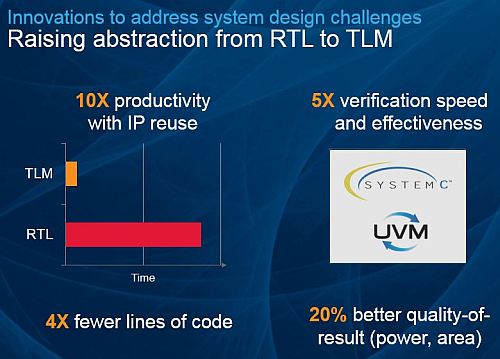

He made a pitch for the importance of ESL design, pointing to the recent

Cadence acquisition of Forte. He also stressed that system-level analysis

of characteristics like power and signal integrity are becoming increasingly

important.

Lip-Bu continues to see opportunity for innovation in silicon design, but

this needs to be more aware of the eco-system (IP, tools, software, foundry,

OEM). Interactions between these are becoming more strongly coupled; so

opportunities for innovation will be prominent in the overlaps.

BERNARD'S REACTION TO LIP-BU'S KEYNOTE

This speech was a bit of a let-down. Lip-Bu gave a polished but not very

informative address. The standard complaint, that he doesn't have technical

depth in EDA, is given. But we know he is a strong business leader, an

expert in finance, and an active investor. I was looking forward to a talk

more along these lines -- a nice contrast to all the UVM, UPF, formal and

other discussions that filled the week. But his DVcon keynote was really

a replay of common knowledge, even among us gear-heads.

I'm sure Lip-Bu has plenty of keen business insights. Perhaps he didn't

want to tip his hand. Perhaps his insights are competitively significant.

Who knows?

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

NOTE: To shorten this report, I have provided a digest of comments

from the panels rather than blow-by-blow quoting. Apologies in

advance to anyone whose brilliant insights I failed to include.

- Bernard Murphy, CTO of Atrenta

Panel: "Is Software The Missing Piece In Verification?"

Moderator: Ed Sperling - SemiEngineering.com

Panelists:

- Tom Anderson - Breker Systems

- Kenneth Knowlson - Intel

- Steve Chappell - Synopsys

- Sandeep Pendharkar - Vyavya Labs

- Frank Schirrmeister - Cadence

- Mark Olen - Mentor Graphics

Great discussion, not always obviously on-topic, but lively and adversarial;

the best kind of panel. Frank dominated the responses, though others also

made worthy contributions. No bad thing, Frank is an entertaining and

opinionated guy. He introduced us to the concept of "Elvis" and "non-Elvis"

software. (Elvis software is the software that leaves the building.)

Q: "What is software-driven verification, and is it different from system-

level verification or just marketing hype?"

- Software-driven verification is using the CPUs on an SoC design

along with purpose-built software as the primary mechanism to

drive full-chip verification, in contrast to a testbench. In

deployment, the device uses the processors, so it is unreasonable

to assume you can adequately test it without involving the

processors. But this is not the same as testing the production

software. Here you are using specialized software as part of

the hardware test.

- What if the design doesn't contain a CPU?

- We have seen cases where people add a simple processor, either

open-source, or a small ARM core to create an embedded testbench.

This makes the software easier to handle than an SV testbench.

- To answer why is this happening now: there are so many engines

involved in verification, from TLM techniques to RTL techniques:

simulation, formal, emulation, FPGA-based. A key advantage of

using software is that you can reuse verification across many

of these techniques. You can even use it in post-silicon debug.

- Most test software is typically not a branch of the production

software. Of course you need to test the Elvis software at

some point, but the non-Elvis software is designed to test

bring-up, all the peripherals start in the right order, that

sort of thing.

- We are seeing a lot more IPs these days are highly programmable.

So it makes sense that when you are verifying the design, an

optimum way to drive that is through software.

- But validating with the product software is also a part of the

process. You just don't want to confuse that with more detailed

verification.

- You need to be careful to distinguish what software aspect we

are talking about - production software, test software or models?

- This is not a new problem. Customers since 1995 have been using

software to drive hardware verification. It hasn't been easy but

they have been finding a way. About 10 years ago, hot-swap

technology made it simpler to switch between speed and accuracy,

and now we're getting to more clever approaches.

- As we get more processors and more code, we need to be able to

reuse it more. The more we can reuse between the Elvis and

non-Elvis software, the better.

Q: "You've got a room-full of people who turned up at 8:30 in the morning

to hear about something that is almost 20 years old. Why now?"

- We're in the hockey stick is what has changed. Diagnostic software

is piece that is changing. And the need to run verification across

engines is now paramount, which is a big change.

- Software becomes the testbench for driving verification - and we

need to be able to reproduce bugs found post-silicon using same

testbench I ran on the netlist.

- We see real tension between validation people and the embedded

software people. This is partly a capacity/complexity problem;

no one person understands all details about the hardware and

software. Making communication between hardware and software

more efficient is key. Is a common environment possible?

Q: "How does this change verification? What has to change?"

- You need to have a processor in the system. Almost like a next-

level BIST with an embedded processor for test. Plus you

need an education change - the verification engineer needs to

understand software - either needs to generate the software

themselves or at least understand what is happening in the

software - both Elvis and non-Elvis.

- We are finding that the validation software exercises the hardware

differently than production software. But we struggle to define

comprehensive use-cases to drive directed testing.

- Graph-based verification is one approach that can work to

communicate between different teams - it can also define a common

level between different engines.

- There was a lot of debate on graph-based approaches and some

confusion on graphical tools (undesirable) versus supporting the

concept. There seemed to be general agreement that the approach

is a good start, but less agreement about scalability to SoC.

There is a proposal to Accellera to create a standard for

graph-based design.

- Perhaps model-based approaches are the ultimate direction.

Q: "What does software-driven verification do for mixed-signal, RF, etc.?"

- Cadence is trying to understand how much of the system the customer

needs to model - not just the chip, but out to the air interface,

loss due to thunderstorms, etc. What we and our customers are still

trying to figure out is what bugs you find when simulate everything

(digital and analog) together. Where does total system simulation

add value?

- The ability to do this is still nascent. We need seamless modeling

across the flow. Need a framework to run software on, then be able

to take that to any of the platforms to run verification. The SW

people need to start on FPGA platform, which gives them ability to

get quick turns. The SW people also need to understand the HW, and

HW teams need to provide SW with a reasonably validated platform.

- When SW people meet HW people, the first thing they do is to exchange

business cards. EDA vendors are often enabling these meetings. Do

we need a new kind of engineer or a designer that can straddle

between teams - i.e. SW engineers working in the HW team?

- We are developing systems/glue engineers; building specific firmware

working with design people.

- Engineers building non-Elvis software are embedded people on loan

because hardware people can't write software.

- Many engineers use our Labview to test post silicon, and they are

thinking about the total system. Do the people who do this scale

to the pre-silicon task?

Q: "Can we get good coverage of an SoC? Or is it spinning out of control?

Do we need to just test to 'good enough'?"

- You can get good coverage at different levels of design, but you

are covering different things.

- If there is a good level of automation to translate between different

levels, then there can be more investment at higher levels, but

otherwise it is difficult to get engineers to invest in high-level

(abstract) testing if they cannot leverage to other testing - that

just looks like more work with uncertain return.

- We need to figure out how to do more with less, and only with the

relevant pieces.

- We talk about language, but that doesn't have to be syntax - it is

more about communicating between teams and between engines.

- Verification reuse is key.

Q: "Verification costs are now 80-85% of the NRE. Is there a way we can

knock down the cost?"

- Automation is always a part of the solution, but we should be

realistic. More likely we will hold steady through higher

productivity. Complexity is still growing faster than

productivity. Verification will always be an unbounded problem.

- We need to look beyond what is available today. Standards are

good, but they cement only what is already well known. We need

to look for breakthrough approaches.

- A truly seamless flow would reduce learning curve, and increase

productivity.

- Hardware verification won't go away with production software

validation. Production software testing needs weeks to run on

a real platform - that's a non-starter for hardware verification.

Non-Elvis software enablement is the real growth area.

IMHO, by far this was the best panel of DVcon'14.

---- ---- ---- ---- ---- ---- ----

Editor's Note: This is continued in ESNUG 547 #6 -- Bernard on UVM.

---- ---- ---- ---- ---- ---- ----

Related Articles:

Bernard Murphy on DVcon, Lib-bu, SW vs. HW engineers, specs, IP

Bernard on UVM, SW-driven verification, C-to-RTL, and Jim Hogan

He made a pitch for the importance of ESL design, pointing to the recent

Cadence acquisition of Forte. He also stressed that system-level analysis

of characteristics like power and signal integrity are becoming increasingly

important.

Lip-Bu continues to see opportunity for innovation in silicon design, but

this needs to be more aware of the eco-system (IP, tools, software, foundry,

OEM). Interactions between these are becoming more strongly coupled; so

opportunities for innovation will be prominent in the overlaps.

BERNARD'S REACTION TO LIP-BU'S KEYNOTE

This speech was a bit of a let-down. Lip-Bu gave a polished but not very

informative address. The standard complaint, that he doesn't have technical

depth in EDA, is given. But we know he is a strong business leader, an

expert in finance, and an active investor. I was looking forward to a talk

more along these lines -- a nice contrast to all the UVM, UPF, formal and

other discussions that filled the week. But his DVcon keynote was really

a replay of common knowledge, even among us gear-heads.

I'm sure Lip-Bu has plenty of keen business insights. Perhaps he didn't

want to tip his hand. Perhaps his insights are competitively significant.

Who knows?

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

NOTE: To shorten this report, I have provided a digest of comments

from the panels rather than blow-by-blow quoting. Apologies in

advance to anyone whose brilliant insights I failed to include.

- Bernard Murphy, CTO of Atrenta

Panel: "Is Software The Missing Piece In Verification?"

Moderator: Ed Sperling - SemiEngineering.com

Panelists:

- Tom Anderson - Breker Systems

- Kenneth Knowlson - Intel

- Steve Chappell - Synopsys

- Sandeep Pendharkar - Vyavya Labs

- Frank Schirrmeister - Cadence

- Mark Olen - Mentor Graphics

Great discussion, not always obviously on-topic, but lively and adversarial;

the best kind of panel. Frank dominated the responses, though others also

made worthy contributions. No bad thing, Frank is an entertaining and

opinionated guy. He introduced us to the concept of "Elvis" and "non-Elvis"

software. (Elvis software is the software that leaves the building.)

Q: "What is software-driven verification, and is it different from system-

level verification or just marketing hype?"

- Software-driven verification is using the CPUs on an SoC design

along with purpose-built software as the primary mechanism to

drive full-chip verification, in contrast to a testbench. In

deployment, the device uses the processors, so it is unreasonable

to assume you can adequately test it without involving the

processors. But this is not the same as testing the production

software. Here you are using specialized software as part of

the hardware test.

- What if the design doesn't contain a CPU?

- We have seen cases where people add a simple processor, either

open-source, or a small ARM core to create an embedded testbench.

This makes the software easier to handle than an SV testbench.

- To answer why is this happening now: there are so many engines

involved in verification, from TLM techniques to RTL techniques:

simulation, formal, emulation, FPGA-based. A key advantage of

using software is that you can reuse verification across many

of these techniques. You can even use it in post-silicon debug.

- Most test software is typically not a branch of the production

software. Of course you need to test the Elvis software at

some point, but the non-Elvis software is designed to test

bring-up, all the peripherals start in the right order, that

sort of thing.

- We are seeing a lot more IPs these days are highly programmable.

So it makes sense that when you are verifying the design, an

optimum way to drive that is through software.

- But validating with the product software is also a part of the

process. You just don't want to confuse that with more detailed

verification.

- You need to be careful to distinguish what software aspect we

are talking about - production software, test software or models?

- This is not a new problem. Customers since 1995 have been using

software to drive hardware verification. It hasn't been easy but

they have been finding a way. About 10 years ago, hot-swap

technology made it simpler to switch between speed and accuracy,

and now we're getting to more clever approaches.

- As we get more processors and more code, we need to be able to

reuse it more. The more we can reuse between the Elvis and

non-Elvis software, the better.

Q: "You've got a room-full of people who turned up at 8:30 in the morning

to hear about something that is almost 20 years old. Why now?"

- We're in the hockey stick is what has changed. Diagnostic software

is piece that is changing. And the need to run verification across

engines is now paramount, which is a big change.

- Software becomes the testbench for driving verification - and we

need to be able to reproduce bugs found post-silicon using same

testbench I ran on the netlist.

- We see real tension between validation people and the embedded

software people. This is partly a capacity/complexity problem;

no one person understands all details about the hardware and

software. Making communication between hardware and software

more efficient is key. Is a common environment possible?

Q: "How does this change verification? What has to change?"

- You need to have a processor in the system. Almost like a next-

level BIST with an embedded processor for test. Plus you

need an education change - the verification engineer needs to

understand software - either needs to generate the software

themselves or at least understand what is happening in the

software - both Elvis and non-Elvis.

- We are finding that the validation software exercises the hardware

differently than production software. But we struggle to define

comprehensive use-cases to drive directed testing.

- Graph-based verification is one approach that can work to

communicate between different teams - it can also define a common

level between different engines.

- There was a lot of debate on graph-based approaches and some

confusion on graphical tools (undesirable) versus supporting the

concept. There seemed to be general agreement that the approach

is a good start, but less agreement about scalability to SoC.

There is a proposal to Accellera to create a standard for

graph-based design.

- Perhaps model-based approaches are the ultimate direction.

Q: "What does software-driven verification do for mixed-signal, RF, etc.?"

- Cadence is trying to understand how much of the system the customer

needs to model - not just the chip, but out to the air interface,

loss due to thunderstorms, etc. What we and our customers are still

trying to figure out is what bugs you find when simulate everything

(digital and analog) together. Where does total system simulation

add value?

- The ability to do this is still nascent. We need seamless modeling

across the flow. Need a framework to run software on, then be able

to take that to any of the platforms to run verification. The SW

people need to start on FPGA platform, which gives them ability to

get quick turns. The SW people also need to understand the HW, and

HW teams need to provide SW with a reasonably validated platform.

- When SW people meet HW people, the first thing they do is to exchange

business cards. EDA vendors are often enabling these meetings. Do

we need a new kind of engineer or a designer that can straddle

between teams - i.e. SW engineers working in the HW team?

- We are developing systems/glue engineers; building specific firmware

working with design people.

- Engineers building non-Elvis software are embedded people on loan

because hardware people can't write software.

- Many engineers use our Labview to test post silicon, and they are

thinking about the total system. Do the people who do this scale

to the pre-silicon task?

Q: "Can we get good coverage of an SoC? Or is it spinning out of control?

Do we need to just test to 'good enough'?"

- You can get good coverage at different levels of design, but you

are covering different things.

- If there is a good level of automation to translate between different

levels, then there can be more investment at higher levels, but

otherwise it is difficult to get engineers to invest in high-level

(abstract) testing if they cannot leverage to other testing - that

just looks like more work with uncertain return.

- We need to figure out how to do more with less, and only with the

relevant pieces.

- We talk about language, but that doesn't have to be syntax - it is

more about communicating between teams and between engines.

- Verification reuse is key.

Q: "Verification costs are now 80-85% of the NRE. Is there a way we can

knock down the cost?"

- Automation is always a part of the solution, but we should be

realistic. More likely we will hold steady through higher

productivity. Complexity is still growing faster than

productivity. Verification will always be an unbounded problem.

- We need to look beyond what is available today. Standards are

good, but they cement only what is already well known. We need

to look for breakthrough approaches.

- A truly seamless flow would reduce learning curve, and increase

productivity.

- Hardware verification won't go away with production software

validation. Production software testing needs weeks to run on

a real platform - that's a non-starter for hardware verification.

Non-Elvis software enablement is the real growth area.

IMHO, by far this was the best panel of DVcon'14.

---- ---- ---- ---- ---- ---- ----

Editor's Note: This is continued in ESNUG 547 #6 -- Bernard on UVM.

---- ---- ---- ---- ---- ---- ----

Related Articles:

Bernard Murphy on DVcon, Lib-bu, SW vs. HW engineers, specs, IP

Bernard on UVM, SW-driven verification, C-to-RTL, and Jim Hogan

Join

Index

Next->Item

|

|