( ESNUG 538 Item 12 ) ------------------------------------------- [10/17/14]

Subject: Test consultant irked at Wally's misleading cell-aware DFT launch

Hi, John,

Next week ITC will be held in Seattle. Everyone who is anyone in

test will be there. And before ITC happens, I want to call out

Wally Rhines on his misleading cell-aware launch that he did last

year at the SWDFT conference in Austin...

- Luis Basto

DFT consultant Austin, TX

---- ---- ---- ---- ---- ---- ----

From: [ Luis Basto, DFT consultant, lbasto=user domain=ieee.org ]

Hi, John,

To set the stage, here's my notes on what Wally said in his SWDFT keynote:

WALLY RHINES: THE 3 DISCONTINUITIES IN DFT

Wally started his keynote with some design & test history. It was the late

1970's. TI struggled with the microprocessor business. It was difficult to

compete with Intel and Motorola. Also, TI uprocessors had quality issues.

Sea change came in 1979 with a MOS design from a renegade group inside IBM;

a custom DSP chip named "Yoda" with something called LSSD, which stood for

"level-sensitive scan design".

At the time, designers had to provide an additional emulator chip for every

DSP or microprocessor chip that was manufactured. Not only was this extra

work, but there was no good plan to correlate the main design with the

emulator design. (Thus they were frequently out of sync.)

The Yoda chip saved the day because the LSSD design meant every latch is now

an accessible test point, eliminating the need for an emulator chip.

From that point on, scan adoption was rapid at TI and subsequently all DSP

designs included full scan.

EDA ECONOMICS

Wally said that there are some 70 tool segments in EDA that each generate

$1 million or greater. Synopsys, Cadence, and Mentor dominate some major

segments -- but the smaller companies also carve their niches.

The #1 supplier of a specific EDA segment averages 68% market share in that

segment. The second grabs most of the remaining share. The third and rest

fight for air. So basically if you're not #1 or #2, you get the scraps.

Since no vendor, none of the Big 3, dominates all segments, this reinforces

the fact that a single EDA vendor tool flow doesn't make sense. That is,

if your chip design team needs Best-in-Class in all the steps of your design

flow, you will be buying tools from at least 2 of the Big 3 plus a number

of small EDA start-ups. Add verification and test, and your tool supplier

roster will be each of the EDA Big 3 plus even more small start-ups.

DFT tools is a small part of EDA tool sales; DFT does not even make it into

the top 10 EDA segments.

DISCONTINUITY 1: PAST

Wally gave his famous India/Test joke:

1. Early 1970's and 1980's IC testing was functional test. As chips

got bigger and more complex, you wrote more tests

2. When Mentor opened up India offices, they hired more engineers to

write functional tests.

3. If structural techniques such as scan test were not invented,

the entire population of India would be needed to write enough

functional tests.

Wally then went on with his own personal history w.r.t. test.

Wally moved from TI to Mentor in 1993 and acquired a small company called

Checklogic. It had a tool called "Fastscan" which was the first ATPG tool

on the market. Actually it was the first commercial vendor test tool

because it was written by John Waicukauski -- who worked on Testbench at

IBM -- which was later sold to Cadence to become Encounter Test. John

later joined Synopsys and developed TetraMax. "John is therefore the DFT

tool equalizer," joked Wally.

Wally admitted that in the early 90's, MENT lacked a viable test/DFT tool

set, so he felt it was a "growth" segment for MENT.

At the time, designers had to provide an additional emulator chip for every

DSP or microprocessor chip that was manufactured. Not only was this extra

work, but there was no good plan to correlate the main design with the

emulator design. (Thus they were frequently out of sync.)

The Yoda chip saved the day because the LSSD design meant every latch is now

an accessible test point, eliminating the need for an emulator chip.

From that point on, scan adoption was rapid at TI and subsequently all DSP

designs included full scan.

EDA ECONOMICS

Wally said that there are some 70 tool segments in EDA that each generate

$1 million or greater. Synopsys, Cadence, and Mentor dominate some major

segments -- but the smaller companies also carve their niches.

The #1 supplier of a specific EDA segment averages 68% market share in that

segment. The second grabs most of the remaining share. The third and rest

fight for air. So basically if you're not #1 or #2, you get the scraps.

Since no vendor, none of the Big 3, dominates all segments, this reinforces

the fact that a single EDA vendor tool flow doesn't make sense. That is,

if your chip design team needs Best-in-Class in all the steps of your design

flow, you will be buying tools from at least 2 of the Big 3 plus a number

of small EDA start-ups. Add verification and test, and your tool supplier

roster will be each of the EDA Big 3 plus even more small start-ups.

DFT tools is a small part of EDA tool sales; DFT does not even make it into

the top 10 EDA segments.

DISCONTINUITY 1: PAST

Wally gave his famous India/Test joke:

1. Early 1970's and 1980's IC testing was functional test. As chips

got bigger and more complex, you wrote more tests

2. When Mentor opened up India offices, they hired more engineers to

write functional tests.

3. If structural techniques such as scan test were not invented,

the entire population of India would be needed to write enough

functional tests.

Wally then went on with his own personal history w.r.t. test.

Wally moved from TI to Mentor in 1993 and acquired a small company called

Checklogic. It had a tool called "Fastscan" which was the first ATPG tool

on the market. Actually it was the first commercial vendor test tool

because it was written by John Waicukauski -- who worked on Testbench at

IBM -- which was later sold to Cadence to become Encounter Test. John

later joined Synopsys and developed TetraMax. "John is therefore the DFT

tool equalizer," joked Wally.

Wally admitted that in the early 90's, MENT lacked a viable test/DFT tool

set, so he felt it was a "growth" segment for MENT.

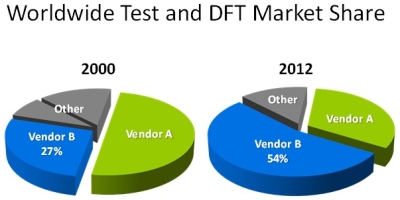

In 2000, Mentor (Vendor B) had 27% of the total DFT market. (Although Wally

never said who Vendor A was, I'm fairly sure it's Synopsys.) By 2012, MENT

captured 54%, and has been the leader for several years.

In the 1990's as geometries shrunk, transition fault testing became dominant

over stuck-at fault testing. With this, the number of test vectors also

exploded. The winner in this game were big ATE boxes like Teradyne,

Advantest, LTX, Agilent, Credence, etc. Companies had to buy more testers

for the ever growing test data volume.

"It costs more to test a transistor than to manufacture one."

- Pat Gelsinger of Intel (1999)

By 2000, the sales of ATE boxes hit $7 billion, the highest ever.

DISCONTINUITY 2: PRESENT

By 2014, it is common to use stuck-at fault tests and transition fault tests

that are delivered in compressed form inside your chip. That is, pattern

compression (aka MENT TestKompress) was invented as a fix for the need to

get all those vectors past your pin I/O bottleneck.

In 2000, Mentor (Vendor B) had 27% of the total DFT market. (Although Wally

never said who Vendor A was, I'm fairly sure it's Synopsys.) By 2012, MENT

captured 54%, and has been the leader for several years.

In the 1990's as geometries shrunk, transition fault testing became dominant

over stuck-at fault testing. With this, the number of test vectors also

exploded. The winner in this game were big ATE boxes like Teradyne,

Advantest, LTX, Agilent, Credence, etc. Companies had to buy more testers

for the ever growing test data volume.

"It costs more to test a transistor than to manufacture one."

- Pat Gelsinger of Intel (1999)

By 2000, the sales of ATE boxes hit $7 billion, the highest ever.

DISCONTINUITY 2: PRESENT

By 2014, it is common to use stuck-at fault tests and transition fault tests

that are delivered in compressed form inside your chip. That is, pattern

compression (aka MENT TestKompress) was invented as a fix for the need to

get all those vectors past your pin I/O bottleneck.

The early compression/decompression ratios were 5X to 10X. This has since

grown to 100X to 200X -- with some designs achieving up to 1000X.

Wally added the colorful early customer reactions to TestKompress. Because

this technology could clearly save the semi houses 10's to 100's of millions

of dollars, MENT Sales priced it at a "meager $1 million per seat".

Customers balked. Some even started creating their own compression HW in

house. But the technology stuck. Market forces equalized the license fees.

Compression technology is in widespread common use today.

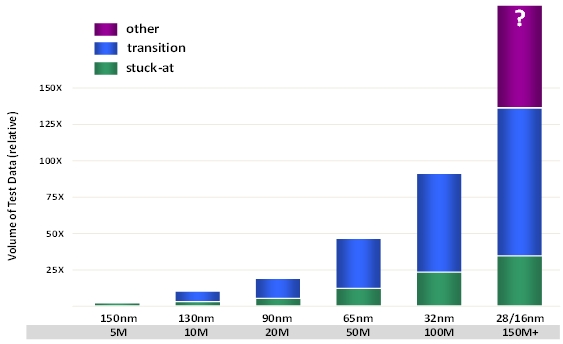

Notice the purple "? Other" part of the bar chart at 28/16 nm. That's the

tests needed to find the faults not covered by stuck-at and transition; it's

Wally setting the stage for his "cell-aware" ATPG.

DISCONTINUITY 3: FUTURE

Because Wally owns the #1 DRC/LVS tool, Calibre, and MENT does equally well

in DFT -- with the uglier physical problems that are presenting themselves

with 20 nm and FinFETs -- why not make a Calibre-Test tool?

The early compression/decompression ratios were 5X to 10X. This has since

grown to 100X to 200X -- with some designs achieving up to 1000X.

Wally added the colorful early customer reactions to TestKompress. Because

this technology could clearly save the semi houses 10's to 100's of millions

of dollars, MENT Sales priced it at a "meager $1 million per seat".

Customers balked. Some even started creating their own compression HW in

house. But the technology stuck. Market forces equalized the license fees.

Compression technology is in widespread common use today.

Notice the purple "? Other" part of the bar chart at 28/16 nm. That's the

tests needed to find the faults not covered by stuck-at and transition; it's

Wally setting the stage for his "cell-aware" ATPG.

DISCONTINUITY 3: FUTURE

Because Wally owns the #1 DRC/LVS tool, Calibre, and MENT does equally well

in DFT -- with the uglier physical problems that are presenting themselves

with 20 nm and FinFETs -- why not make a Calibre-Test tool?

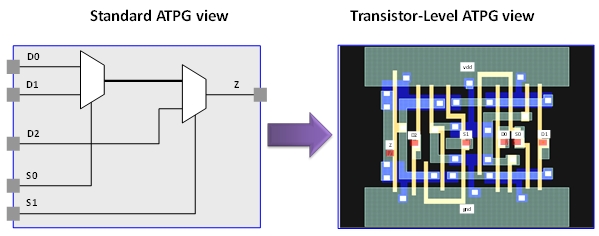

Mentor calls their technology "transistor-level" ATPG or "cell-aware" ATPG.

Looking at the left side, most ATPG tools today deal only with pin faults,

i.e. faults on the input or output pins of a library cell.

On the right side, cell-aware ATPG looks at potential internal defects of a

cell or gate. It takes into account more physical defects such as oxide

opens, bridges, etc.

The more complex the gate, the more internal defects may exist, the more it

makes sense to use "cell-aware" ATPG.

Wally said that using MENT "cell-aware" ATPG:

- AMD improved their wafer sort by 885 DPM on one processor.

- On Semi, who is shipping automotive parts requiring single

digit DPM, was surprised to find cell-aware tests caught

an extra 88 DPM on one product.

- ITC 2012 paper 11.3, "Modeling Faults in FinFET Logic",

shows 40 M parts tested with improvements of 100-900 DPM.

Wally added that "Upcoming FinFET designs will require transistor-level test

to meet acceptable quality levels."

It was during the follow-up Q&A that Wally added the interesting news:

- Wally estimates that "cell-aware" ATPG will increase the

total volume of test vectors to 50% or more.

- Because of lessons MENT learned from the early TestKompress

sales, Wally said that MENT was giving "cell-aware" ATPG

for free to all existing TestKompress customers. That is,

this would be a $0 upgrade to TestKompress.

It's with that last claim is where I take offense.

---- ---- ---- ---- ---- ---- ----

FIRST, MY RANT ABOUT TEST STANDARDS

Now, since this is right before ITC, I have one basic complaint about test

in general which I must make before dissecting Wally's "free" cell-aware

claim.

The following is a partial list of standards for test. The biggest and most

widespread is JTAG IEEE 1149. This is further sub-divided into:

1149.1-2001 - Standard Test Access Port and Boundary Scan

Architecture, which is superseded by

1149.1-2013 - The original was ~200 pages. The new one is

440 pages. In widespread use.

1149.4 - Mixed signal test bus. Not too many use this.

1149.6 - Boundary scan of high-speed AC-couple signals.

Used by designs that need it. An update is

also in the wings.

1149.7 - Standard for Reduced-Pin and Enhanced Functionality

Test Access Port and Boundary Scan Architecture.

Sometimes known as CJTAG. The standard is about

1200 pages. Very few companies use it.

And also:

1450.6 - Core Test Language.

1500 - Standard for Embedded Core Test.

P1687 - This is IJTAG or internal JTAG. Standard for Access

and Control of Instrumentation Embedded within a

Semiconductor Device. Only 300 pages.

P1838 - Standard for Test Access Architecture for 3-D

Stacked Integrated Circuits.

The last two with the P in front of the numbers mean they are working drafts

although P1687 will become a certified standard Real Soon Now (RSN).

Which one should I use? Or which three? Some of the hardware standards

also introduce new software standards. For example, 1149.1 requires

Boundary Scan Description Language (BSDL). 1500 introduced 1450.6, the

Core Test Language (CTL). P1687 created two new languages, Procedural

Description Language (PDL), and Instrument Connectivity Language (ICL);

which kind of looks like a subset of Verilog.

My problem is even with all this bureaucracy, there is no one small simple

set of standards that everyone agrees on! It's a nightmare of what the

customer thinks he wants, what the current EDA tools can currently do,

and what the board manufacturers suggest.

Is there some way we can cut this back? Or agree on as minimally OK?

---- ---- ---- ---- ---- ---- ----

NOW MY RANT ABOUT WALLY'S "FREE" CELL-AWARE ATPG

I must complement Wally for MENT finally coming out with a better flavor of

cell-aware ATPG inside TestKompress. With the DPPM data he's showing, his

tool clearly leapfrogs Cadence and Synopsys.

Cadence did have cell-aware support in its ATPG tool for a while (7 years

ago) but it requires Conformal Custom. In addition, Mentor cell-aware ATPG

is more accurate because it uses layout data plus transistor connectivity;

the Cadence tool uses just transistor connectivity. Synopsys uses slack-

based transition delay fault, similar to Cadence dynamic True Time test.

I will now prove:

Wally's cell-aware upgrade to TestKompress costs ~$120,000.

What Wally failed to mention is to use his cell-aware ATPG your company must

go through several "pre-steps":

1. For a TSMC 28 nm lib, you have ~300 std cells. There are 4-6

transistors in an inverter; 50-200 transistors in a flip-flop.

There are 6 RC parameters for each. SPICE simulations are

done on PVT-RC corners, so 5 x 3 x 3 x 6 == 270 corners.

Total number of SPICE simulations is:

1 sim per PVT-RC corner x

270 corners per std cell x

300 std cells in the lib == 81,000 SPICE runs.

Even with 100 SPICE licenses, this takes 810 job submissions and

then your engineers have to analyze the results.

This is assuming your engineers already did all the scripting

ahead of time to do this automatically.

2. I don't have data for Calibre LDE extraction to catch all the

layout dependent effects, but I'm sure that they're comparably

as involved as the SPICE verification runs.

3. TSMC 28 nm comes in 5 libs. HP, LP, HPL, HPM, and HPC.

4. This requires $$$ for SPICE licenses, Calibre licenses, HW to

run them, plus engineering man-hours.

Now for the DFT part: these results are gathered to derive a "logical" fault

model. Whereas the standard stuck-at fault model deals with inputs or

outputs that are tied to power or ground; and transition fault models deal

with inputs or outputs that are slow-to-rise or slow-to-fall -- cell-aware

faults may model a gate-to-drain bridging fault -- which is more troublesome.

One rule of thumb I've heard is a std cell library verification takes a team

of 3 engineers about 4 weeks to do. That's 3 engineers x 20 days x 8 hours

which is 480 man-hours. 5 libs is 2,400 man-hours. Assume 1 engineering

man-hour costs $100. 5 libs is therefore 2,400 x $100 == $240,000.

Now assume you can get some economies by doing 5 libs at once, you should

be able to cut this cost in 1/2, so, after all the estimations:

Wally's so-called "free" cell-aware ATPG actually costs $120 K!

Is this bad? Not really. This is useful for chips with a long-cycle-life

like automotive electronics that have to run for 10+ years, or for

critical medical applications like implanted heart pacemakers.

But it's not free.

- Luis Basto

DFT consultant Austin, TX

Editor's Note: I felt Luis was especially qualified to write this

because he worked on "cell-aware" ATPG at Cadence back in 2007.

His CDNlive'07 paper on it with Freescale is #69 in Downloads.

Luis does DFT consulting. He's lbasto at ieee dot org. - John

Mentor calls their technology "transistor-level" ATPG or "cell-aware" ATPG.

Looking at the left side, most ATPG tools today deal only with pin faults,

i.e. faults on the input or output pins of a library cell.

On the right side, cell-aware ATPG looks at potential internal defects of a

cell or gate. It takes into account more physical defects such as oxide

opens, bridges, etc.

The more complex the gate, the more internal defects may exist, the more it

makes sense to use "cell-aware" ATPG.

Wally said that using MENT "cell-aware" ATPG:

- AMD improved their wafer sort by 885 DPM on one processor.

- On Semi, who is shipping automotive parts requiring single

digit DPM, was surprised to find cell-aware tests caught

an extra 88 DPM on one product.

- ITC 2012 paper 11.3, "Modeling Faults in FinFET Logic",

shows 40 M parts tested with improvements of 100-900 DPM.

Wally added that "Upcoming FinFET designs will require transistor-level test

to meet acceptable quality levels."

It was during the follow-up Q&A that Wally added the interesting news:

- Wally estimates that "cell-aware" ATPG will increase the

total volume of test vectors to 50% or more.

- Because of lessons MENT learned from the early TestKompress

sales, Wally said that MENT was giving "cell-aware" ATPG

for free to all existing TestKompress customers. That is,

this would be a $0 upgrade to TestKompress.

It's with that last claim is where I take offense.

---- ---- ---- ---- ---- ---- ----

FIRST, MY RANT ABOUT TEST STANDARDS

Now, since this is right before ITC, I have one basic complaint about test

in general which I must make before dissecting Wally's "free" cell-aware

claim.

The following is a partial list of standards for test. The biggest and most

widespread is JTAG IEEE 1149. This is further sub-divided into:

1149.1-2001 - Standard Test Access Port and Boundary Scan

Architecture, which is superseded by

1149.1-2013 - The original was ~200 pages. The new one is

440 pages. In widespread use.

1149.4 - Mixed signal test bus. Not too many use this.

1149.6 - Boundary scan of high-speed AC-couple signals.

Used by designs that need it. An update is

also in the wings.

1149.7 - Standard for Reduced-Pin and Enhanced Functionality

Test Access Port and Boundary Scan Architecture.

Sometimes known as CJTAG. The standard is about

1200 pages. Very few companies use it.

And also:

1450.6 - Core Test Language.

1500 - Standard for Embedded Core Test.

P1687 - This is IJTAG or internal JTAG. Standard for Access

and Control of Instrumentation Embedded within a

Semiconductor Device. Only 300 pages.

P1838 - Standard for Test Access Architecture for 3-D

Stacked Integrated Circuits.

The last two with the P in front of the numbers mean they are working drafts

although P1687 will become a certified standard Real Soon Now (RSN).

Which one should I use? Or which three? Some of the hardware standards

also introduce new software standards. For example, 1149.1 requires

Boundary Scan Description Language (BSDL). 1500 introduced 1450.6, the

Core Test Language (CTL). P1687 created two new languages, Procedural

Description Language (PDL), and Instrument Connectivity Language (ICL);

which kind of looks like a subset of Verilog.

My problem is even with all this bureaucracy, there is no one small simple

set of standards that everyone agrees on! It's a nightmare of what the

customer thinks he wants, what the current EDA tools can currently do,

and what the board manufacturers suggest.

Is there some way we can cut this back? Or agree on as minimally OK?

---- ---- ---- ---- ---- ---- ----

NOW MY RANT ABOUT WALLY'S "FREE" CELL-AWARE ATPG

I must complement Wally for MENT finally coming out with a better flavor of

cell-aware ATPG inside TestKompress. With the DPPM data he's showing, his

tool clearly leapfrogs Cadence and Synopsys.

Cadence did have cell-aware support in its ATPG tool for a while (7 years

ago) but it requires Conformal Custom. In addition, Mentor cell-aware ATPG

is more accurate because it uses layout data plus transistor connectivity;

the Cadence tool uses just transistor connectivity. Synopsys uses slack-

based transition delay fault, similar to Cadence dynamic True Time test.

I will now prove:

Wally's cell-aware upgrade to TestKompress costs ~$120,000.

What Wally failed to mention is to use his cell-aware ATPG your company must

go through several "pre-steps":

1. For a TSMC 28 nm lib, you have ~300 std cells. There are 4-6

transistors in an inverter; 50-200 transistors in a flip-flop.

There are 6 RC parameters for each. SPICE simulations are

done on PVT-RC corners, so 5 x 3 x 3 x 6 == 270 corners.

Total number of SPICE simulations is:

1 sim per PVT-RC corner x

270 corners per std cell x

300 std cells in the lib == 81,000 SPICE runs.

Even with 100 SPICE licenses, this takes 810 job submissions and

then your engineers have to analyze the results.

This is assuming your engineers already did all the scripting

ahead of time to do this automatically.

2. I don't have data for Calibre LDE extraction to catch all the

layout dependent effects, but I'm sure that they're comparably

as involved as the SPICE verification runs.

3. TSMC 28 nm comes in 5 libs. HP, LP, HPL, HPM, and HPC.

4. This requires $$$ for SPICE licenses, Calibre licenses, HW to

run them, plus engineering man-hours.

Now for the DFT part: these results are gathered to derive a "logical" fault

model. Whereas the standard stuck-at fault model deals with inputs or

outputs that are tied to power or ground; and transition fault models deal

with inputs or outputs that are slow-to-rise or slow-to-fall -- cell-aware

faults may model a gate-to-drain bridging fault -- which is more troublesome.

One rule of thumb I've heard is a std cell library verification takes a team

of 3 engineers about 4 weeks to do. That's 3 engineers x 20 days x 8 hours

which is 480 man-hours. 5 libs is 2,400 man-hours. Assume 1 engineering

man-hour costs $100. 5 libs is therefore 2,400 x $100 == $240,000.

Now assume you can get some economies by doing 5 libs at once, you should

be able to cut this cost in 1/2, so, after all the estimations:

Wally's so-called "free" cell-aware ATPG actually costs $120 K!

Is this bad? Not really. This is useful for chips with a long-cycle-life

like automotive electronics that have to run for 10+ years, or for

critical medical applications like implanted heart pacemakers.

But it's not free.

- Luis Basto

DFT consultant Austin, TX

Editor's Note: I felt Luis was especially qualified to write this

because he worked on "cell-aware" ATPG at Cadence back in 2007.

His CDNlive'07 paper on it with Freescale is #69 in Downloads.

Luis does DFT consulting. He's lbasto at ieee dot org. - John

Join

Index

Next->Item

|

|