( ESNUG 537 Item 4 ) -------------------------------------------- [02/28/14]

Subject: Mark Wallace of Cavium's Jasper User Group (JUG'13) Trip Report

> - The Jasper User Group Meeting is coming up on Oct. 22-23 at the

> Cypress Hotel in Cupertino, CA. They'll be having user-lead

> Birds-of-a-Feathers on: Proof Grids, Property synthesis, IP-XACT,

> Clock & Reset Set-up and verification, Low power verification,

> AMBA Proofkit, Protocol verification, Security path verification.

>

> - from http://www.deepchip.com/items/0528-04.html

From: [ Mark Wallace of Cavium ]

Hi John,

This is a trip report about my experiences at the Jasper User Group meeting

(JUG 2013), which was held in Silicon Valley towards the end of October.

I am a Senior Design Verification Engineer at Cavium in San Jose. I work

closely with designers to verify internal blocks as well as system-level

scenarios in the network chips.

The two-day meeting covered 14 user presentations and five presentations by

Jasper R&D, together with 8 user-led "birds-of-a-feather" discussions and 7

demo stations.

The user presentations were from: Applied Micro, ARM, Broadcom, Juniper

Networks, Nvidia, Samsung, Sony, and STMicroelectronics, and two "must read"

presentations for new formal users. The 5 Jasper presentations covered low

power verification, security verification, coverage, debugging, and

behavioral property synthesis. Around 150 user attended, from the U.S,

Europe, and Japan.

Offices in Mountain View / Sweden/ Brazil / Israel

130 employees, 70 in R&D

Many are PhD's and experts in formal

Highest # of formal R&D in EDA; >3X nearest competitor

The user-led birds-of-a-feather sessions covered low power verification;

security verification; protocol verification; property synthesis; coverage;

clock/reset verification; IP-XACT; efficient allocation of properties,

proof engines and licenses across available compute resources, using

Jasper's ProofGrid.

USER PRESENTATIONS

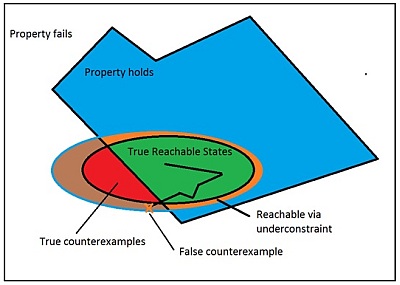

- APPLIED MICRO Keith Schakel gave an excellent primer on adopting and

using formal verification - one of the two "must reads" for new formal

users. It covered:

Inputs to JasperGold

Outputs from JasperGold

State definition

State space

Reachable and unreachable states

Full proofs

Counterexamples

Bounded proofs

State space explosion

Underconstraining

Overconstraining

Coverage

Concluded with "Counter-intuitive Truths About Formal" rundown:

Free inputs automatically take every possible value, every

combination of values, and every sequence of every possible

combination of values; testbenches are unnecessary for

formal; and proofs are valid even if huge chunks of the

design are abstracted away.

---- ---- ---- ---- ---- ---- ----

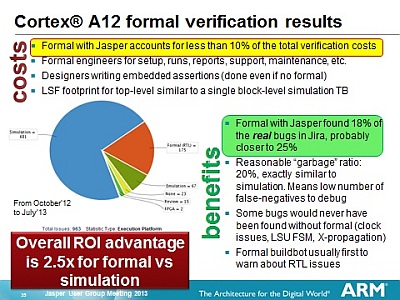

- ARM Laurent Arditi showed how they used Jasper formal - mostly at

the top level - to verify the Cortex A12 in Jira. Jasper found

25 percent of the 963 real bugs, but constituted only 10 percent

of the total verification effort.

Concluded with "Counter-intuitive Truths About Formal" rundown:

Free inputs automatically take every possible value, every

combination of values, and every sequence of every possible

combination of values; testbenches are unnecessary for

formal; and proofs are valid even if huge chunks of the

design are abstracted away.

---- ---- ---- ---- ---- ---- ----

- ARM Laurent Arditi showed how they used Jasper formal - mostly at

the top level - to verify the Cortex A12 in Jira. Jasper found

25 percent of the 963 real bugs, but constituted only 10 percent

of the total verification effort.

Some bugs, such as clock issues, X-propagation and load store unit

(LSU) FSM bugs would never have been found by simulation. The

presentation showed how formal can "please your project manager,

too" by significantly boosting verification ROI.

---- ---- ---- ---- ---- ---- ----

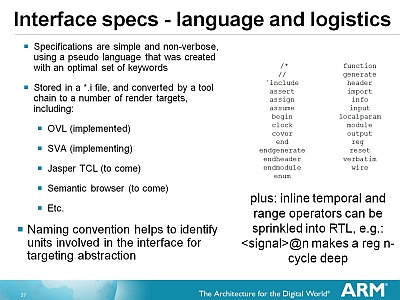

- ARM Alex Netterville showed how they used Jasper's formal apps to

verify the memory interfaces for a new processor design (Pelican).

Some bugs, such as clock issues, X-propagation and load store unit

(LSU) FSM bugs would never have been found by simulation. The

presentation showed how formal can "please your project manager,

too" by significantly boosting verification ROI.

---- ---- ---- ---- ---- ---- ----

- ARM Alex Netterville showed how they used Jasper's formal apps to

verify the memory interfaces for a new processor design (Pelican).

This enabled ARM to test a functional unit in its own unit-level

formal bench, isolated from the rest of the design; to run top-level

formal to check the interactions of all functional units; and to use

black-box abstraction to verify a functional unit within a "whole

design" context. The team used Jasper's Visualize tool to analyze

interface models, both in checking expected interface specification

behavior and in exploring back-to-back request patterns.

---- ---- ---- ---- ---- ---- ----

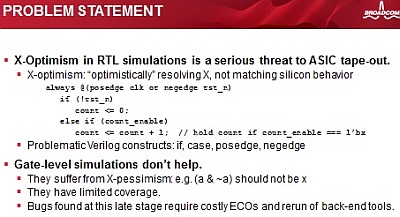

- BROADCOM Shuqing Zhao described using formal to detect X-propagation

in a power management controller for a quad-core processor. They used

formal reset analysis to detect uninitialized flip-flops and Jasper's

X-Propagation Verification App to analyze the identified RTL blocks.

This enabled ARM to test a functional unit in its own unit-level

formal bench, isolated from the rest of the design; to run top-level

formal to check the interactions of all functional units; and to use

black-box abstraction to verify a functional unit within a "whole

design" context. The team used Jasper's Visualize tool to analyze

interface models, both in checking expected interface specification

behavior and in exploring back-to-back request patterns.

---- ---- ---- ---- ---- ---- ----

- BROADCOM Shuqing Zhao described using formal to detect X-propagation

in a power management controller for a quad-core processor. They used

formal reset analysis to detect uninitialized flip-flops and Jasper's

X-Propagation Verification App to analyze the identified RTL blocks.

It detected 4 flip-flops that were not initialized by reset, despite

prior "comprehensive" UVM-constrained random simulation. It also

detected one flop that caused a "nasty bug" in the state-machine

transition after reset.

---- ---- ---- ---- ---- ---- ----

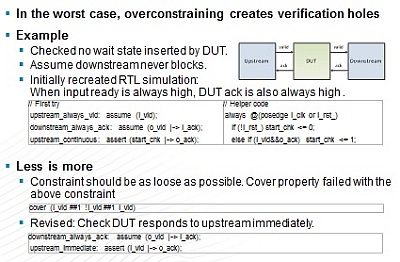

- BROADCOM William Su highlighted the different outcomes in applying

constraints in formal vs. simulation - the 2nd of the two "must reads"

for new formal users. Using the formal results from 4 case studies

in live projects, the presentation concluded that "less is more."

It detected 4 flip-flops that were not initialized by reset, despite

prior "comprehensive" UVM-constrained random simulation. It also

detected one flop that caused a "nasty bug" in the state-machine

transition after reset.

---- ---- ---- ---- ---- ---- ----

- BROADCOM William Su highlighted the different outcomes in applying

constraints in formal vs. simulation - the 2nd of the two "must reads"

for new formal users. Using the formal results from 4 case studies

in live projects, the presentation concluded that "less is more."

In contrast to a constrained-random testbench, where more constraints

can aid bug detection, over-constraining a formal tool can have the

opposite result, significantly limiting its ability to explore the

state space and to detect corner case bugs. In the worst case, over-

constraining can leave verification holes. The presentation showed

that it was easier to achieve convergence by

(a) using fewer constraints

and

(b) using cover properties to determine which functionality

has been covered.

---- ---- ---- ---- ---- ---- ----

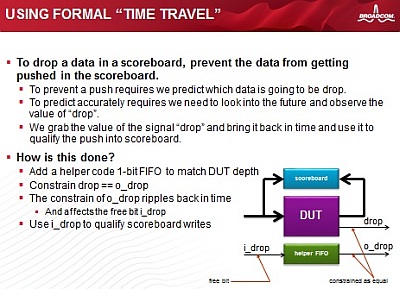

- BROADCOM Normando Montecillo won "best paper" award for his talk on

an innovative use of Jasper's Scoreboard proof accelerator. The task

was to verify a design-under-test (DUT) that had the legal ability to

occasionally throw away data. In the conventional use of Scoreboard,

all input data must appear at the outputs, and there is no means to

remove data that has been input to the tool.

In contrast to a constrained-random testbench, where more constraints

can aid bug detection, over-constraining a formal tool can have the

opposite result, significantly limiting its ability to explore the

state space and to detect corner case bugs. In the worst case, over-

constraining can leave verification holes. The presentation showed

that it was easier to achieve convergence by

(a) using fewer constraints

and

(b) using cover properties to determine which functionality

has been covered.

---- ---- ---- ---- ---- ---- ----

- BROADCOM Normando Montecillo won "best paper" award for his talk on

an innovative use of Jasper's Scoreboard proof accelerator. The task

was to verify a design-under-test (DUT) that had the legal ability to

occasionally throw away data. In the conventional use of Scoreboard,

all input data must appear at the outputs, and there is no means to

remove data that has been input to the tool.

To identify data that should be prevented from entering the tool, the

team devised an approach in which the tool's output is constrained to

observe the data to be dropped. These values are then used to qualify

the data input to the tool. "This technique appears to defy logic and

causality in the simulation world, but works in the formal world.

There are more clever techniques waiting to be discovered, we just

need to remove some of our preconceived boundaries."

---- ---- ---- ---- ---- ---- ----

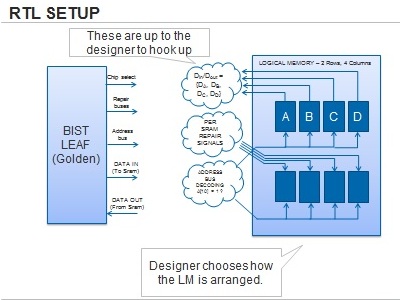

- JUNIPER Rohit Date used the Jasper Connectivity Verification App for

the verification of BIST repair in a chip with 5,000 SRAM instances.

To identify data that should be prevented from entering the tool, the

team devised an approach in which the tool's output is constrained to

observe the data to be dropped. These values are then used to qualify

the data input to the tool. "This technique appears to defy logic and

causality in the simulation world, but works in the formal world.

There are more clever techniques waiting to be discovered, we just

need to remove some of our preconceived boundaries."

---- ---- ---- ---- ---- ---- ----

- JUNIPER Rohit Date used the Jasper Connectivity Verification App for

the verification of BIST repair in a chip with 5,000 SRAM instances.

They had decided against simulation because of its laborious flow and

long run/debug times. Juniper chose formal because of its ability to

verify each connection's property in isolation and its strong

correlation between property and bug, yielding faster turnaround and

debug. The team found 7 critical bugs in 2 months. Deployment time

was 6 weeks, including devising the parameters of the flow, building

the flow, running pilot test cases, and using the results to perfect

the flow to ensure that it would work on all blocks. The flow was

developed towards the end of the design schedule, but is reusable on

a "verify as you go" basis in future projects.

---- ---- ---- ---- ---- ---- ----

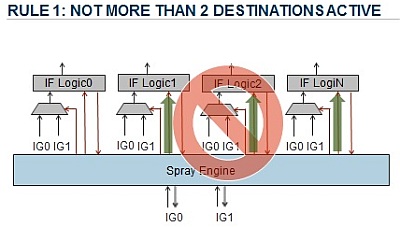

- JUNIPER Anamaya Sullerey presented the bring-up and verification of a

load balancer. Simulation was used as the sign-off method, so only

a limited amount of time was allocated to formal verification.

They had decided against simulation because of its laborious flow and

long run/debug times. Juniper chose formal because of its ability to

verify each connection's property in isolation and its strong

correlation between property and bug, yielding faster turnaround and

debug. The team found 7 critical bugs in 2 months. Deployment time

was 6 weeks, including devising the parameters of the flow, building

the flow, running pilot test cases, and using the results to perfect

the flow to ensure that it would work on all blocks. The flow was

developed towards the end of the design schedule, but is reusable on

a "verify as you go" basis in future projects.

---- ---- ---- ---- ---- ---- ----

- JUNIPER Anamaya Sullerey presented the bring-up and verification of a

load balancer. Simulation was used as the sign-off method, so only

a limited amount of time was allocated to formal verification.

The team detected most of the bugs/issues with 9 basic properties,

saving many weeks of simulation-based discovery and debug time. The

first implementation of the load balancer took 3 days to verify, with

each bug taking only a couple of hours to find and debug. However,

verification of the circuit after architecture modification was much

faster because the properties and infrastructure remained the same.

Although the original goal was to use formal verification only as a

"first line of defense," subsequent simulation found no further bugs.

---- ---- ---- ---- ---- ---- ----

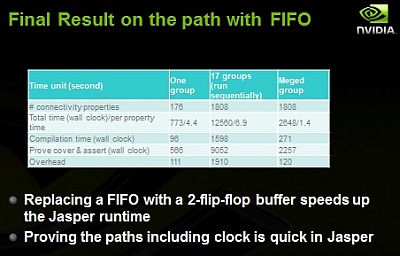

- NVIDIA Scott Xu described how they took just 10 hours to verify the

connectivity on a multicore SoC, using Jasper formal. There were

40,000 paths, with registers and asynchronous FIFOs along the paths,

and 150 clocks. The team reported Jasper has "good performance, easy

setup, easy debug, and good local support." They also noted that

Jasper has a customized function for reverse connectivity extraction.

The team detected most of the bugs/issues with 9 basic properties,

saving many weeks of simulation-based discovery and debug time. The

first implementation of the load balancer took 3 days to verify, with

each bug taking only a couple of hours to find and debug. However,

verification of the circuit after architecture modification was much

faster because the properties and infrastructure remained the same.

Although the original goal was to use formal verification only as a

"first line of defense," subsequent simulation found no further bugs.

---- ---- ---- ---- ---- ---- ----

- NVIDIA Scott Xu described how they took just 10 hours to verify the

connectivity on a multicore SoC, using Jasper formal. There were

40,000 paths, with registers and asynchronous FIFOs along the paths,

and 150 clocks. The team reported Jasper has "good performance, easy

setup, easy debug, and good local support." They also noted that

Jasper has a customized function for reverse connectivity extraction.

Despite using 40 simulation licenses per job, simulation could not

cover all source signals; and could not verify all registers and

asynchronous FIFOs; and could not check time latency from source to

destination. They said Cadence IFV could not support parallel

processing of their 40,000+ properties. Even after partitioning

the verification tasks, IFV's regression time was still more than

3 days, and it was difficult to debug.

---- ---- ---- ---- ---- ---- ----

- NVIDIA Homayoon Akhiani used JG-COV App's reset analysis and debug

to find the logic that takes the longest time to reset.

Despite using 40 simulation licenses per job, simulation could not

cover all source signals; and could not verify all registers and

asynchronous FIFOs; and could not check time latency from source to

destination. They said Cadence IFV could not support parallel

processing of their 40,000+ properties. Even after partitioning

the verification tasks, IFV's regression time was still more than

3 days, and it was difficult to debug.

---- ---- ---- ---- ---- ---- ----

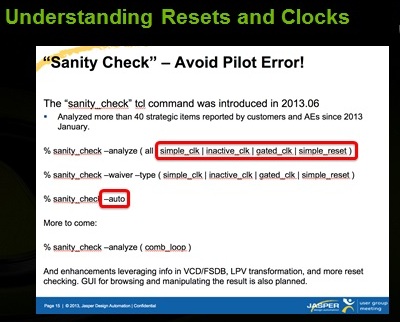

- NVIDIA Homayoon Akhiani used JG-COV App's reset analysis and debug

to find the logic that takes the longest time to reset.

With the help of appropriate black boxing, the team systematically

analyzed the SoC design to identify long-delay resets, enabling them

to devise a less time-consuming reset methodology. The team also

used Jasper's Visualize, which automatically generates waveforms

without the need for a testbench. The methodology found reset

problems missed by simulation (by extra-long resets), and some

gated clocks that did not clock during reset.

---- ---- ---- ---- ---- ---- ----

- NVIDIA Syed Suhaib used Jasper Sequential Equivalency Checking (SEC)

App to verify clock-gating schemes used in dynamic power reduction.

He emphasized that clock-gating verification is often left until

late in the design, and inadequate verification can mean "lights out"

for large sections of the chip; severe violation of the power specs;

costly engineering change orders (ECOs); and even re-spins.

With the help of appropriate black boxing, the team systematically

analyzed the SoC design to identify long-delay resets, enabling them

to devise a less time-consuming reset methodology. The team also

used Jasper's Visualize, which automatically generates waveforms

without the need for a testbench. The methodology found reset

problems missed by simulation (by extra-long resets), and some

gated clocks that did not clock during reset.

---- ---- ---- ---- ---- ---- ----

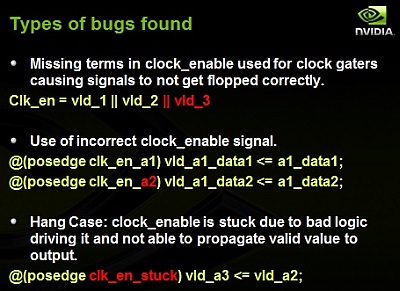

- NVIDIA Syed Suhaib used Jasper Sequential Equivalency Checking (SEC)

App to verify clock-gating schemes used in dynamic power reduction.

He emphasized that clock-gating verification is often left until

late in the design, and inadequate verification can mean "lights out"

for large sections of the chip; severe violation of the power specs;

costly engineering change orders (ECOs); and even re-spins.

NVIDIA used the SEC App to compare IP blocks free of clock-gating with

their clock-gated versions. They said the App implemented a 15 minute

automatic mapping of the two designs' I/O, and automatic generation of

all requisite assertions. Debug was straightforward because the App's

GUI places the two DUT instances side-by-side, making mismatches

obvious to identify and debug. The 20 man team created a script which

automatically plotted the mismatches between signals until the first

failure was found. The SEC App found 41 clock gating bugs, 50% of

which were found after supposedly high-coverage simulation.

The presenter said simulation was "necessary but not sufficient."

---- ---- ---- ---- ---- ---- ----

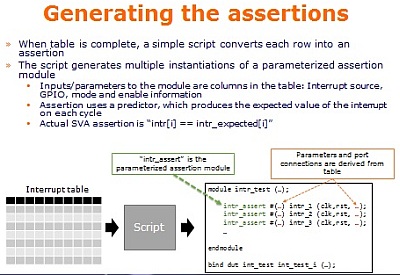

- SAMSUNG Joanna Zhang showed how they used formal verification to devise

an automated flow to verify a CPU interrupt scheme consisting of 200

interrupt lines. Instead of manually writing 200 assertions, the team

used Jasper's Visualize tool to generate waveforms for each interrupt.

NVIDIA used the SEC App to compare IP blocks free of clock-gating with

their clock-gated versions. They said the App implemented a 15 minute

automatic mapping of the two designs' I/O, and automatic generation of

all requisite assertions. Debug was straightforward because the App's

GUI places the two DUT instances side-by-side, making mismatches

obvious to identify and debug. The 20 man team created a script which

automatically plotted the mismatches between signals until the first

failure was found. The SEC App found 41 clock gating bugs, 50% of

which were found after supposedly high-coverage simulation.

The presenter said simulation was "necessary but not sufficient."

---- ---- ---- ---- ---- ---- ----

- SAMSUNG Joanna Zhang showed how they used formal verification to devise

an automated flow to verify a CPU interrupt scheme consisting of 200

interrupt lines. Instead of manually writing 200 assertions, the team

used Jasper's Visualize tool to generate waveforms for each interrupt.

The team then used the data in tabular form, together with a simple

script, to automatically generate the assertions. The assertions

detected incorrect reset values and missing synchronizers. This took

as long as using manually-written assertions, but will deliver time

and effort savings in its future reuse on modified and new RTL code.

---- ---- ---- ---- ---- ---- ----

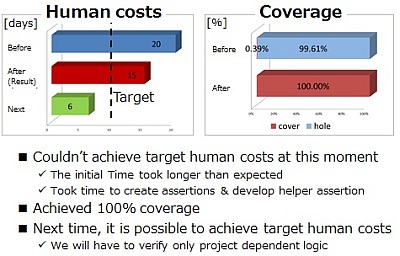

- SONY Kenta Ono described how they applied Jasper formal to verify a

complex timing generation block, parts of which are reused in multiple

designs. Formal got 100% functional coverage with a 25% cut in human

effort vs. simulation, which never hit the required coverage.

The team then used the data in tabular form, together with a simple

script, to automatically generate the assertions. The assertions

detected incorrect reset values and missing synchronizers. This took

as long as using manually-written assertions, but will deliver time

and effort savings in its future reuse on modified and new RTL code.

---- ---- ---- ---- ---- ---- ----

- SONY Kenta Ono described how they applied Jasper formal to verify a

complex timing generation block, parts of which are reused in multiple

designs. Formal got 100% functional coverage with a 25% cut in human

effort vs. simulation, which never hit the required coverage.

The team expects more reductions in human effort because the re-usable

parts of the design can now be deployed without further verification.

They were a relative newcomer to formal verification.

---- ---- ---- ---- ---- ---- ----

- STMICRO: This case study (presented by a stand-in from Jasper) showed

how they used Jasper's Low Power Verification App to verify interface

slices and signals on an ARM-based quad-core CPU sub-system with

multiple power domains. Power verification started a few weeks from

tapeout, so they used existing assertion-based verification IP from

Jasper - Intelligent Proof Kits for ACE, AX13, APB and ATB. They also

used Jasper's Power Sequencer and Scoreboard proof accelerators.

Exhaustive verification identified problems with some clamp values in

the first hour of the first verification run. The team went on to

verify the low power aspects of ACE, AX13, APB and ATB interface slices

for protocol correctness and data integrity in all valid power states.

The team -- whose members were relatively new to formal methods -- said

that environment setup was fast, and that the reuse of power-aware

properties for future projects/derivatives would be easy.

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

My take-away: The two days gave me a good overview of the breadth and depth

of the use of Jasper formal. The meeting was highly interactive, and the

exchange of important "how to" information with fellow users and Jasper R&D

spanned the whole range of functional verification challenges.

For me, JUG is definitely a "mark your calendar" event. Although it would

have also been a good introduction to formal on its own, it also helped that

I had participated in a 1 month formal eval just two months before. Even

with my limited eval experience I was able to ask additional questions and

the Jasper AEs helped by carrying me to additional points of interest.

Over the next year I would like to eval IP-XACT and the Security Apps that

Jasper has. From the birds-of-a-feather discussion on IP-XACT, I saw how

others used the tool to improve register verification and documentation.

From their Security App demo, I got an idea how it might help verify

datapath security.

- Mark Wallace

Cavium, Inc. San Jose, CA

The team expects more reductions in human effort because the re-usable

parts of the design can now be deployed without further verification.

They were a relative newcomer to formal verification.

---- ---- ---- ---- ---- ---- ----

- STMICRO: This case study (presented by a stand-in from Jasper) showed

how they used Jasper's Low Power Verification App to verify interface

slices and signals on an ARM-based quad-core CPU sub-system with

multiple power domains. Power verification started a few weeks from

tapeout, so they used existing assertion-based verification IP from

Jasper - Intelligent Proof Kits for ACE, AX13, APB and ATB. They also

used Jasper's Power Sequencer and Scoreboard proof accelerators.

Exhaustive verification identified problems with some clamp values in

the first hour of the first verification run. The team went on to

verify the low power aspects of ACE, AX13, APB and ATB interface slices

for protocol correctness and data integrity in all valid power states.

The team -- whose members were relatively new to formal methods -- said

that environment setup was fast, and that the reuse of power-aware

properties for future projects/derivatives would be easy.

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

My take-away: The two days gave me a good overview of the breadth and depth

of the use of Jasper formal. The meeting was highly interactive, and the

exchange of important "how to" information with fellow users and Jasper R&D

spanned the whole range of functional verification challenges.

For me, JUG is definitely a "mark your calendar" event. Although it would

have also been a good introduction to formal on its own, it also helped that

I had participated in a 1 month formal eval just two months before. Even

with my limited eval experience I was able to ask additional questions and

the Jasper AEs helped by carrying me to additional points of interest.

Over the next year I would like to eval IP-XACT and the Security Apps that

Jasper has. From the birds-of-a-feather discussion on IP-XACT, I saw how

others used the tool to improve register verification and documentation.

From their Security App demo, I got an idea how it might help verify

datapath security.

- Mark Wallace

Cavium, Inc. San Jose, CA

Join

Index

Next->Item

|

|