( ESNUG 524 Item 3 ) ---------------------------------------------- [05/16/13]

Subject: Jasper case study on formally verifying secure on-chip datapaths

Highly secure NoCs allow firewalls in the network fabric so that certain

masters can neither "read" nor "write" to certain slaves. They allow you

to set up private secure regions that applications can or cannot write to.

Security can be put in place to ensure that specific regions of memory

are only accessed by authorized processes. Signaling must be reconciled

across differing interfaces, and corrective actions coordinated across

multiple cores.

One can have a NoC with a proprietary security scheme, or use the widely

accepted ARM 'TrustZone', where you can define a secure address region.

By supporting ARM's TrustZone, a commercial NoC can provide secure zones

for applications such as: secured PIN entry, anti-malware, digital rights

management, SW license management, access control of cloud-based docs.

- Jim Hogan, Vista Ventures, LLC

http://www.deepchip.com/items/0511-04.html

From: [ Ziyad Hanna of Jasper DA ]

Hi, John,

Back in ESNUG 511, Jim Hogan did a detailed analysis of On-Chip Networks and

NoCs. In that write-up, Jim made only a cursory mention of the security of

on-chip networks.

What I'd like to do is delve more into securing datapaths on your chip, and

the problem of knowing whether-or-not your datapaths are ACTUALLY secure.

ON-CHIP SECURITY NOW

Currently for HW design, there are three major on-chip security approaches:

1. ARM TrustZone is a system-wide approach to security on mobile

computing used for secure payment, digital rights management

(DRM), enterprise, and web-based services.

2. Intel Trusted Execution Technology (Intel TXT) is HW-based

security for mostly PC physical and virtual infrastructures.

3. A number of other chip design houses have come up with their

own proprietary on-chip datapath security schemes.

Encryption algorithms and techniques for securing on-chip data are an active

research topics in academia and on industrial fronts. Often, researchers

will use commercial formal property tools to check the correctness of their

algorithms. However, the big question that comes up is exactly where to

store such on-chip encrypted data (in the software or the hardware) and how

to verify the access control. Recently, we have seen a trend toward storing

secure data in hardware in the form of embedded microcode or in the actual

silicon implementation itself. The aim being to make it much harder for

attackers to have access to sensitive data.

Despite these approaches, we still see successful break-ins on secure data.

The problem is that these on-chip secure data systems are NOT fully NOR

formally verified in order to 100% know that they have no "holes".

THE VERIFICATION PROBLEM

Current methodologies use an "ad hoc" approach to security verification that

is part of design, IP integration, system validation, and SW development.

The main method of verification is through simulation with a focus on trying

to enumerate all of the possible security leakage scenarios and simulating

them. While this approach is scalable with simulation capacity, it is not

exhaustive -- thus many scenarios are left unchecked -- which means there

are unchecked pathways for attackers to use.

Using formal techniques is a natural way to increase the overall confidence

in the security of your chip. However, the major focus we see continues to

rely on verifying ONLY the correctness of encryption algorithms and their

implementation in software and hardware -- NOT the whole security process.

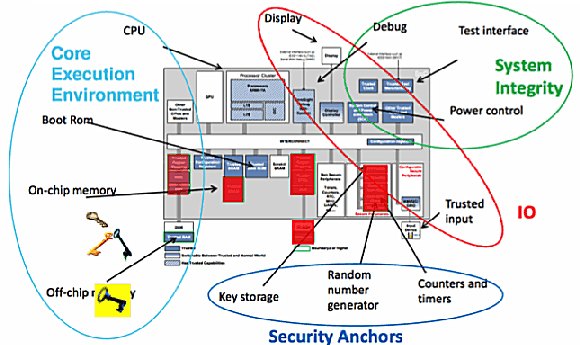

ARM Trust Based System Architecture

In a typical chip, you can identify various security anchors, such as:

- key storage,

- random number generators,

- counters and timers, and

- various security data storage elements.

The big question is, "is it possible to access our sensitive information

from outside the pin interface (or external access points) to the system?"

In other words:

1. Is it possible to read any security related data from a

certain location A to location B through some control C?

2. Is it possible to modify any security related data?

3. Are there failure modes that would compromise the safety

of any security related data?

We created our Jasper Secure Path Verification App (SPV) to just answer such

practical questions.

UNINTENDED DATAPATH HOLES

The main reason why Secure Datapath Verification is so messy is because most

chips today consist of 10's (to maybe ~100) IP blocks -- which creates a

mishmash of 100 (to maybe ~100,000) different possible data transfers.

In addition, test/scan logic can add unexpected paths where secure data can

be leaked out of a chip.

ARM Trust Based System Architecture

In a typical chip, you can identify various security anchors, such as:

- key storage,

- random number generators,

- counters and timers, and

- various security data storage elements.

The big question is, "is it possible to access our sensitive information

from outside the pin interface (or external access points) to the system?"

In other words:

1. Is it possible to read any security related data from a

certain location A to location B through some control C?

2. Is it possible to modify any security related data?

3. Are there failure modes that would compromise the safety

of any security related data?

We created our Jasper Secure Path Verification App (SPV) to just answer such

practical questions.

UNINTENDED DATAPATH HOLES

The main reason why Secure Datapath Verification is so messy is because most

chips today consist of 10's (to maybe ~100) IP blocks -- which creates a

mishmash of 100 (to maybe ~100,000) different possible data transfers.

In addition, test/scan logic can add unexpected paths where secure data can

be leaked out of a chip.

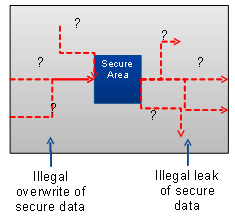

To contain the secure datapath verification problem, system architects will:

- isolate secure areas (the red areas in the above drawing),

- analyze the data flow of the whole system,

- manually identify possible leakage paths by observing, and

- adding blocking conditions to block these leaks.

On the other hand, system integration engineers analyze the structural paths

and try to convince themselves that they are false paths.

No doubt, this is a tedious "ad hoc" methodology that can work on a small

subset of traces, but it is difficult to get a real sense of completeness.

SECURE DATAPATH SPECIFICATION

Specifying whether there is a legitimate path from location A to location B

in your Verilog/VHDL RTL is not straightforward using standard specification

languages like SVA. Users need to add some glue logic and many assumptions

to constrain the search for such a path.

One way to do this is by:

- disconnecting signal A from its driving logic,

- constrain signal A to a stable value of A_unique,

- ensure other sources of data can NOT drive A_unique, and

- then check whether target signal B can eventually

receive the value A_unique.

If so, then there is a logical path from signal A to signal B; otherwise, no

functional path exists.

Notice that the number of instances of the assumption

assume (other_source_of_data1 != A_unique)

can be large depending how big the cone of influence on target signal B is.

It's possible to use this approach, but it's clearly not a straightforward

method. It requires the user to add the glue logic and enumerate all the

assumptions; therefore, it's error prone. Imagine the enormity of the task

if the user wants to check many paths. That requires much more logic and

more added assumptions.

Another important deficiency of this method is that if your data mutates

(for example, is inverted or split into smaller chunks) between A and B,

this approach will miss a path.

With our Jasper SPV App, the user simply specifies the path property:

assert -no_path -from A -to B

This simpler method hides the complexity of the modeling details, so the

user does not need to develop nor maintain the model. We extended the

semantics of our security path verification to find path conditions that

force the paths to visit or skip certain locations in the design.

Checking from two points is used in two complementary ways:

- is there is a datapath from a non-secure area to a secure area?

- is there is a datapath from a secure area to a non-secure area?

Illegal datapaths to a secure area can introduce vulnerabilities in which

non-secure data is mistaken as secure, while illegal datapaths from a

secure area can leak data to the hackers. With the Jasper SPV App, the

user can specify the datapaths and then verify them formally.

HOW THIS FORMAL VERIFICATION STUFF WORKS

A simple verification approach is to apply structural analysis to traverse

the cone of influence of the target signal B backwards and determine whether

the traversal can reach signal A. If the traversal does not reach signal A,

it is guaranteed that there is no path from A to B, and this is trivially

a false path.

However, if the traversal reaches signal A, there may or may not be a

functional path. This depends on the logical behavior of the design and

the assumptions involved.

The Jasper SPV App applies the structural analysis as a simple pre-process

method to rule out false paths.

---- ---- ---- ---- ---- ---- ----

Simulation is another conventional method used to validate security paths.

It is usually based on inserting X values at the source of the paths and

checking whether the destination signals can receive the X value. This

method is not complete because it can show the paths, but it cannot verify

their absence. In addition, simulation methods suffer from X optimism and

X pessimism, which can cause generation and suppression of X values during

simulation.

And as a result, false paths are detected and true paths are missed.

---- ---- ---- ---- ---- ---- ----

Applying a standard formal property verification approach may be effective,

but it suffers from usability issues -- and that is because of the need to

add assumptions to force a desired path to be from a desired point.

Another issue is correctness, and that is because paths may be missed in

cases where the data is mutated between source and destination.

---- ---- ---- ---- ---- ---- ----

Jasper's approach does not have the limitations/issues of the above methods.

It is based on the "path sensitization" technology we developed specifically

for secure datapath verification problems.

We introduce a new type of property with the format

assert -no_path -from A -to B

that checks if there's a functional path from source signal A to destination

signal B. By proving this property, the formal engines inject a unique tag

called "taint" on A and check whether this unique tag can ever appear at B.

If the property is formally proven, it means that it's impossible to expose

data from A on B under the current set of constraints.

On the other hand, if the tool is able to make the property fail, a counter-

example will show how data from A can be exposed on B.

BLACK BOXING

Checking assertions on big SoC designs can be a challenging proof because of

the complexity of the preconditions at A and B and the deep levels/complex

nature of the logic. Obviously, it is possible to safely black box some of

the modules that are not in the desired paths -- however, if such modules

can interfere with the paths, then black boxing is not a valid approach.

To contain the secure datapath verification problem, system architects will:

- isolate secure areas (the red areas in the above drawing),

- analyze the data flow of the whole system,

- manually identify possible leakage paths by observing, and

- adding blocking conditions to block these leaks.

On the other hand, system integration engineers analyze the structural paths

and try to convince themselves that they are false paths.

No doubt, this is a tedious "ad hoc" methodology that can work on a small

subset of traces, but it is difficult to get a real sense of completeness.

SECURE DATAPATH SPECIFICATION

Specifying whether there is a legitimate path from location A to location B

in your Verilog/VHDL RTL is not straightforward using standard specification

languages like SVA. Users need to add some glue logic and many assumptions

to constrain the search for such a path.

One way to do this is by:

- disconnecting signal A from its driving logic,

- constrain signal A to a stable value of A_unique,

- ensure other sources of data can NOT drive A_unique, and

- then check whether target signal B can eventually

receive the value A_unique.

If so, then there is a logical path from signal A to signal B; otherwise, no

functional path exists.

Notice that the number of instances of the assumption

assume (other_source_of_data1 != A_unique)

can be large depending how big the cone of influence on target signal B is.

It's possible to use this approach, but it's clearly not a straightforward

method. It requires the user to add the glue logic and enumerate all the

assumptions; therefore, it's error prone. Imagine the enormity of the task

if the user wants to check many paths. That requires much more logic and

more added assumptions.

Another important deficiency of this method is that if your data mutates

(for example, is inverted or split into smaller chunks) between A and B,

this approach will miss a path.

With our Jasper SPV App, the user simply specifies the path property:

assert -no_path -from A -to B

This simpler method hides the complexity of the modeling details, so the

user does not need to develop nor maintain the model. We extended the

semantics of our security path verification to find path conditions that

force the paths to visit or skip certain locations in the design.

Checking from two points is used in two complementary ways:

- is there is a datapath from a non-secure area to a secure area?

- is there is a datapath from a secure area to a non-secure area?

Illegal datapaths to a secure area can introduce vulnerabilities in which

non-secure data is mistaken as secure, while illegal datapaths from a

secure area can leak data to the hackers. With the Jasper SPV App, the

user can specify the datapaths and then verify them formally.

HOW THIS FORMAL VERIFICATION STUFF WORKS

A simple verification approach is to apply structural analysis to traverse

the cone of influence of the target signal B backwards and determine whether

the traversal can reach signal A. If the traversal does not reach signal A,

it is guaranteed that there is no path from A to B, and this is trivially

a false path.

However, if the traversal reaches signal A, there may or may not be a

functional path. This depends on the logical behavior of the design and

the assumptions involved.

The Jasper SPV App applies the structural analysis as a simple pre-process

method to rule out false paths.

---- ---- ---- ---- ---- ---- ----

Simulation is another conventional method used to validate security paths.

It is usually based on inserting X values at the source of the paths and

checking whether the destination signals can receive the X value. This

method is not complete because it can show the paths, but it cannot verify

their absence. In addition, simulation methods suffer from X optimism and

X pessimism, which can cause generation and suppression of X values during

simulation.

And as a result, false paths are detected and true paths are missed.

---- ---- ---- ---- ---- ---- ----

Applying a standard formal property verification approach may be effective,

but it suffers from usability issues -- and that is because of the need to

add assumptions to force a desired path to be from a desired point.

Another issue is correctness, and that is because paths may be missed in

cases where the data is mutated between source and destination.

---- ---- ---- ---- ---- ---- ----

Jasper's approach does not have the limitations/issues of the above methods.

It is based on the "path sensitization" technology we developed specifically

for secure datapath verification problems.

We introduce a new type of property with the format

assert -no_path -from A -to B

that checks if there's a functional path from source signal A to destination

signal B. By proving this property, the formal engines inject a unique tag

called "taint" on A and check whether this unique tag can ever appear at B.

If the property is formally proven, it means that it's impossible to expose

data from A on B under the current set of constraints.

On the other hand, if the tool is able to make the property fail, a counter-

example will show how data from A can be exposed on B.

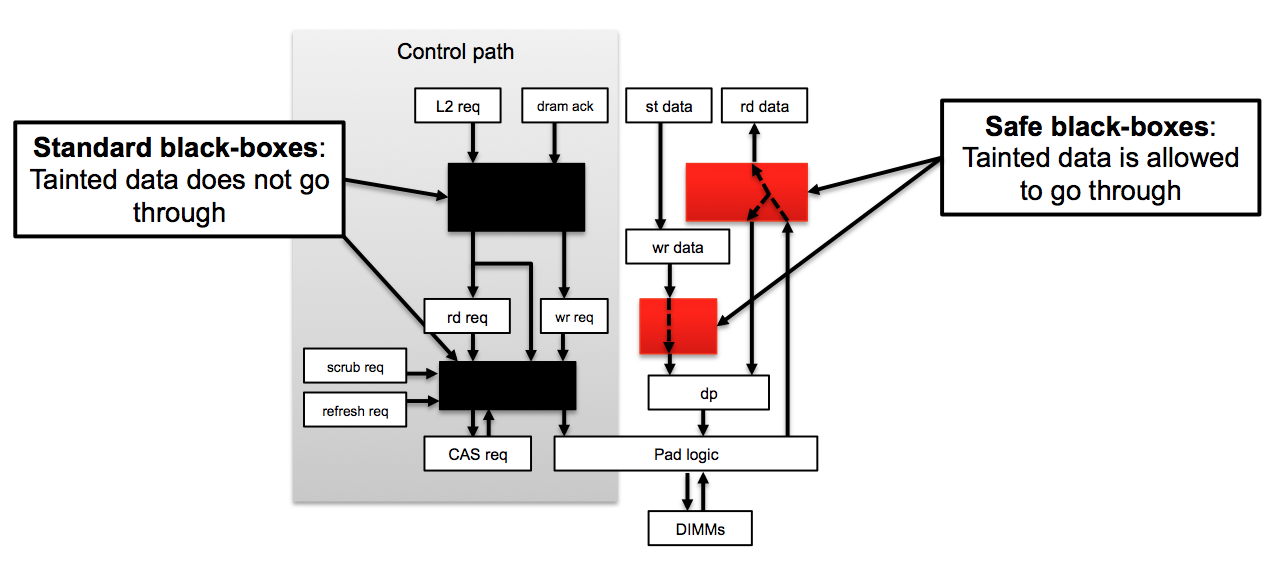

BLACK BOXING

Checking assertions on big SoC designs can be a challenging proof because of

the complexity of the preconditions at A and B and the deep levels/complex

nature of the logic. Obviously, it is possible to safely black box some of

the modules that are not in the desired paths -- however, if such modules

can interfere with the paths, then black boxing is not a valid approach.

To cope with these issues and for scalability reasons, Jasper SPV App adds a

concept of the "safe connectivity abstraction" to make sure that "tainted"

values still propagate in semi-black-boxed modules.

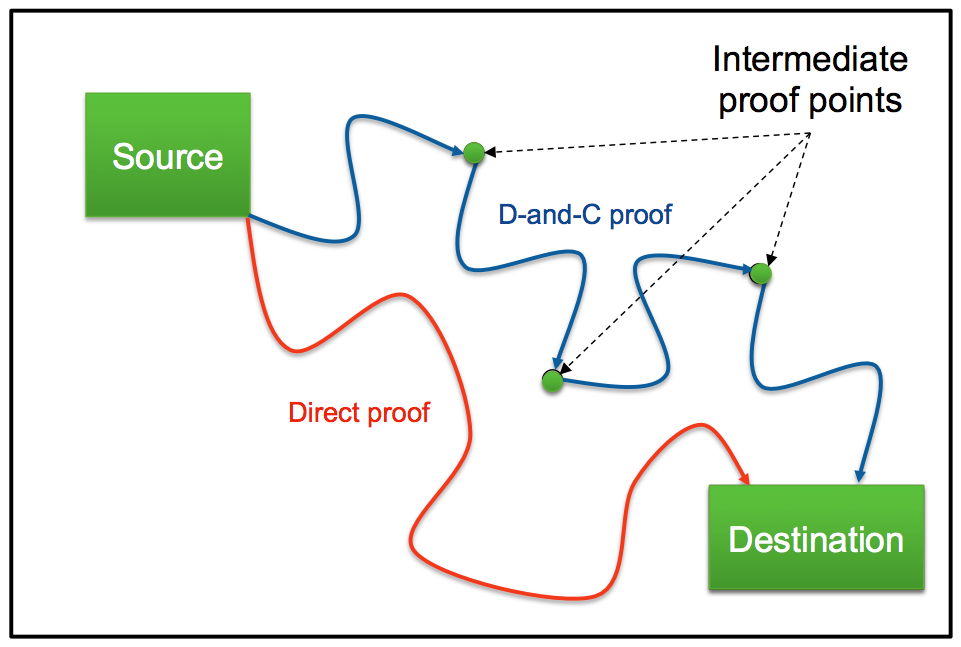

DIVIDE AND CONQUER

Another method used by the Jasper SPV App to cope with long search paths is

divide-and-conquer. With this approach, the tool searches for a functional

path between points A and B by searching for intermediate relevant points

and trying to construct a path through them.

To cope with these issues and for scalability reasons, Jasper SPV App adds a

concept of the "safe connectivity abstraction" to make sure that "tainted"

values still propagate in semi-black-boxed modules.

DIVIDE AND CONQUER

Another method used by the Jasper SPV App to cope with long search paths is

divide-and-conquer. With this approach, the tool searches for a functional

path between points A and B by searching for intermediate relevant points

and trying to construct a path through them.

SPV App is built on JasperGold -- it leverages its modeling, optimization,

proof engines, and debugging features. We're happy to say with D-and-C,

it scales nicely to large designs.

JASPER SPV VS. FORMAL PROPERTY VERIFICATION

Secure Path Verification is essentially different from the formal property

verification approach.

In security verification, we check whether there are possible paths outside

the normal specification scope. Basically, we mimic the hacker approach by

breaking the specification to see if it is possible to leak secure data via

unexpected paths.

On the other hand, with formal property verification, we check whether the

property is always correct under the constraints.

That said, in security verification, we sometime want to run in an under-

constrained environment (such as during reset) or without environmental

constraints or even with partial constraints.

If we prove there is no functional path without environmental constraints,

this is a much stronger result compared to a fully constrained environment.

The challenge is to cope with false negatives, which means that since the

SPV App is showing a path under a partial formal setup: "have we found a

security violation or not?"

Our JasperGold debugging capabilities (such as Visualize, what-if analysis,

dynamic constraints, and so forth) help users analyze the results of the

verification to feel confident in the results -- that is, be convinced that

such a path is legal or not.

A JASPER SPV CASE STUDY

In this case study, we show SPV App being used on a small example design.

SPV App is built on JasperGold -- it leverages its modeling, optimization,

proof engines, and debugging features. We're happy to say with D-and-C,

it scales nicely to large designs.

JASPER SPV VS. FORMAL PROPERTY VERIFICATION

Secure Path Verification is essentially different from the formal property

verification approach.

In security verification, we check whether there are possible paths outside

the normal specification scope. Basically, we mimic the hacker approach by

breaking the specification to see if it is possible to leak secure data via

unexpected paths.

On the other hand, with formal property verification, we check whether the

property is always correct under the constraints.

That said, in security verification, we sometime want to run in an under-

constrained environment (such as during reset) or without environmental

constraints or even with partial constraints.

If we prove there is no functional path without environmental constraints,

this is a much stronger result compared to a fully constrained environment.

The challenge is to cope with false negatives, which means that since the

SPV App is showing a path under a partial formal setup: "have we found a

security violation or not?"

Our JasperGold debugging capabilities (such as Visualize, what-if analysis,

dynamic constraints, and so forth) help users analyze the results of the

verification to feel confident in the results -- that is, be convinced that

such a path is legal or not.

A JASPER SPV CASE STUDY

In this case study, we show SPV App being used on a small example design.

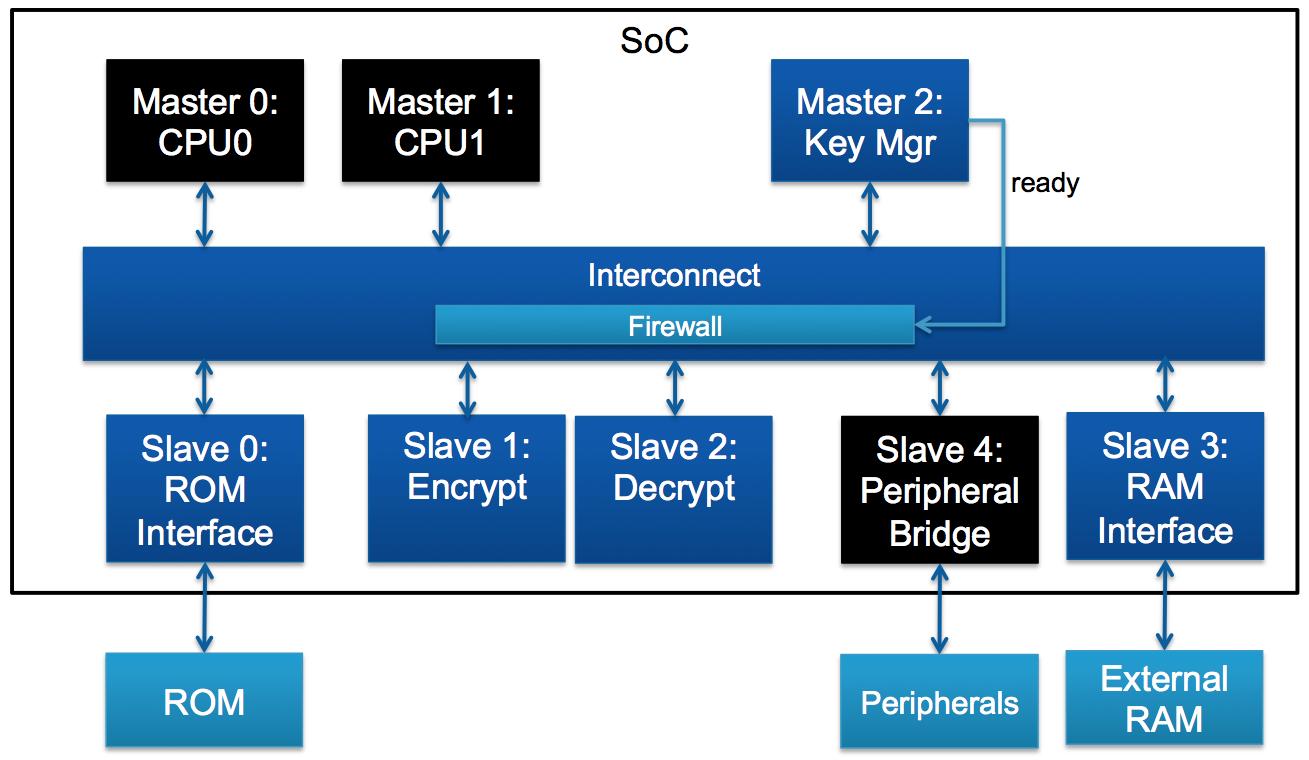

The masters of this network are the CPUs and a "Key Manager". The role of

the Key Manager is to read encryption/decryption keys from the ROM and

transport them to specific cryptographic slaves, which are in charge of

encryption/decryption operations. After completing this process, the FSM

inside Key Manager moves to a "ready" state, which unblocks communication

channels in the firewall through a side channel.

This network has several security requirements. Some are functional

requirements that deal with the proper operation of Key Manager, firewall,

and ROM interface. These can be described in SVA or PSL and are a good

fit for JasperGold Formal Property Verification (FPV) App.

However, this network also has data propagation and control propagation

requirements that specify which resources are visible (i.e. accessible)

by agents in this system under a specific configuration. As explained

earlier in this post, those are not a good fit for SVA or PSL properties,

but they are a good fit for JasperGold SPV App.

Usually, these propagation requirements can be divided into "leak" and

"illegal overwrite" checks. We will focus on five requirements, which we

expect will cover different areas where the tool can be applied.

1. No Security Leak: Secure data/control should never reach

non-secure areas

a. Keys should never reach CPUs without going through

encryption logic

b. Key Manager FSM state should never reach CPUs

2. No Illegal Overwrite: Non-secure data/control should never

reach secure areas

a. Slave's copy of key never be overwritten by CPUs

b. Slave's copy of key not come from non-secure ROM locations

c. Slave's key control information never be affected by CPUs

The above requirements will be verified by

assert -no_path properties

SPV App creates the properties when you use the command

check_spv -create

THE "NO SECURE LEAK" CHECK

We want to check that keys should never reach the CPUs without going

through encryption logic. Here we tell the tool to find a path between the

ROM interface and CPUs that does not go through encryption/decryption logic.

To ignore paths going through the cryptographic logic, we use the command

"stopat", which disconnects the logic driving the signal:

% stopat s1_encrypt.cypher_data ;# Disconnect encryption logic

% stopat s2_decrypt.cypher_data ;# Disconnect decryption logic

Now, we can create the main properties that will look for a path between the

ROM and the CPU:

% check_spv -create \

-name leak:KEY_to_CPU0 \

-from rom_data -from_precond \

{rom_read && (rom_addr == `KEY_ENC || rom_addr == `KEY_DEC)} \

-to m0_cpu.M_rdata -to_precond m0_cpu.M_req

% check_spv -create \

-name leak:KEY_to_CPU1 \

-from rom_data -from_precond \

{rom_read && (rom_addr == `KEY_ENC || rom_addr == `KEY_DEC)} \

-to m1_cpu.M_rdata -to_precond m1_cpu.M_req

Notice how the assertions are qualified with the switches -from_precond and

-to_precond. We use the switch -from_precond to direct the tool to search

only for paths coming from rom_data when an encryption key is being read

from the ROM.

As for -to_precond, we use it to restrict the search for scenarios in which

tainted data gets to the CPU when it is requesting data. When proving the

assertions, the tool finds a bug related to a write operation being done

to the ROM.

The masters of this network are the CPUs and a "Key Manager". The role of

the Key Manager is to read encryption/decryption keys from the ROM and

transport them to specific cryptographic slaves, which are in charge of

encryption/decryption operations. After completing this process, the FSM

inside Key Manager moves to a "ready" state, which unblocks communication

channels in the firewall through a side channel.

This network has several security requirements. Some are functional

requirements that deal with the proper operation of Key Manager, firewall,

and ROM interface. These can be described in SVA or PSL and are a good

fit for JasperGold Formal Property Verification (FPV) App.

However, this network also has data propagation and control propagation

requirements that specify which resources are visible (i.e. accessible)

by agents in this system under a specific configuration. As explained

earlier in this post, those are not a good fit for SVA or PSL properties,

but they are a good fit for JasperGold SPV App.

Usually, these propagation requirements can be divided into "leak" and

"illegal overwrite" checks. We will focus on five requirements, which we

expect will cover different areas where the tool can be applied.

1. No Security Leak: Secure data/control should never reach

non-secure areas

a. Keys should never reach CPUs without going through

encryption logic

b. Key Manager FSM state should never reach CPUs

2. No Illegal Overwrite: Non-secure data/control should never

reach secure areas

a. Slave's copy of key never be overwritten by CPUs

b. Slave's copy of key not come from non-secure ROM locations

c. Slave's key control information never be affected by CPUs

The above requirements will be verified by

assert -no_path properties

SPV App creates the properties when you use the command

check_spv -create

THE "NO SECURE LEAK" CHECK

We want to check that keys should never reach the CPUs without going

through encryption logic. Here we tell the tool to find a path between the

ROM interface and CPUs that does not go through encryption/decryption logic.

To ignore paths going through the cryptographic logic, we use the command

"stopat", which disconnects the logic driving the signal:

% stopat s1_encrypt.cypher_data ;# Disconnect encryption logic

% stopat s2_decrypt.cypher_data ;# Disconnect decryption logic

Now, we can create the main properties that will look for a path between the

ROM and the CPU:

% check_spv -create \

-name leak:KEY_to_CPU0 \

-from rom_data -from_precond \

{rom_read && (rom_addr == `KEY_ENC || rom_addr == `KEY_DEC)} \

-to m0_cpu.M_rdata -to_precond m0_cpu.M_req

% check_spv -create \

-name leak:KEY_to_CPU1 \

-from rom_data -from_precond \

{rom_read && (rom_addr == `KEY_ENC || rom_addr == `KEY_DEC)} \

-to m1_cpu.M_rdata -to_precond m1_cpu.M_req

Notice how the assertions are qualified with the switches -from_precond and

-to_precond. We use the switch -from_precond to direct the tool to search

only for paths coming from rom_data when an encryption key is being read

from the ROM.

As for -to_precond, we use it to restrict the search for scenarios in which

tainted data gets to the CPU when it is requesting data. When proving the

assertions, the tool finds a bug related to a write operation being done

to the ROM.

This counter-example shows how a piece of an encryption key (red highlights)

can get from the ROM to the CPU.

In this scenario, the CPU posts a "write" request to the ROM (orange), which

generates an "error" response (signal S_err/M_err asserted). However, the

data associated with this response is from the prior "read" operation done

to the ROM. The previous "read" operation (green) is to read an encryption

key, which was initiated by the Key Manager. Therefore, the data associated

with the error response going to the CPU is also a piece of the encryption

key, and that is a security hole.

- Ziyad Hanna

Jasper DA, Ltd. Haifa, Israel

This counter-example shows how a piece of an encryption key (red highlights)

can get from the ROM to the CPU.

In this scenario, the CPU posts a "write" request to the ROM (orange), which

generates an "error" response (signal S_err/M_err asserted). However, the

data associated with this response is from the prior "read" operation done

to the ROM. The previous "read" operation (green) is to read an encryption

key, which was initiated by the Key Manager. Therefore, the data associated

with the error response going to the CPU is also a piece of the encryption

key, and that is a security hole.

- Ziyad Hanna

Jasper DA, Ltd. Haifa, Israel

Join

Index

Next->Item

|

|