!!! "It's not a BUG,  /o o\ / it's a FEATURE!" (508) 429-4357

( > )

\ - / INDUSTRY GADFLY: "Prakash's not-so-secret DVcon'13 Report"

_] [_

by John Cooley

Holliston Poor Farm, P.O. Box 6222, Holliston, MA 01746-6222

---- ---- ---- ---- ---- ---- ----

Hi, John,

After the recent DVcon'13 in February in Santa Clara, the employees

inside Real Intent collectively got together and wrote this trip

report about the conference. I hope your DeepChip readers like it.

- Prakash Narain

Real Intent, Inc. Sunnyvale, CA

---- ---- ---- ---- ---- ---- ----

DVCON ATTENDANCE NUMBERS

2006 :################################ 650

2007 :################################### 707

2008 :######################################## 802

2009 :################################ 665

2010 :############################## 625

2011 :##################################### 749

2012 :########################################## 834

2013 :############################################ 883

DVcon had the same drop after 2008 that many other conferences had reacting

to the drop in the world economy. The interesting thing is it recovered

fairly quickly -- by 2011 -- and has since grown to 883 attendees by 2013.

---- ---- ---- ---- ---- ---- ----

WALLY'S KEYNOTE ADDRESS

As usual, Wally gave an informative keynote address full of data.

Starting with the state of verification today, Wally stated that for both

2010 and 2012, surveys show that verification consumes 56% of (mean) project

time, with a peak of respondents landing in the 60-70% bucket. He concluded

verification has not been keeping up with growing design complexity. He

showed the dramatic 75% growth in the number of verification engineers so

there is now a 1-to-1 match with the number of designers on a chip.

Wally drew the humorous conclusion, that if this 75% growth rate continues,

we will need everyone in India(!) to do verification.

/o o\ / it's a FEATURE!" (508) 429-4357

( > )

\ - / INDUSTRY GADFLY: "Prakash's not-so-secret DVcon'13 Report"

_] [_

by John Cooley

Holliston Poor Farm, P.O. Box 6222, Holliston, MA 01746-6222

---- ---- ---- ---- ---- ---- ----

Hi, John,

After the recent DVcon'13 in February in Santa Clara, the employees

inside Real Intent collectively got together and wrote this trip

report about the conference. I hope your DeepChip readers like it.

- Prakash Narain

Real Intent, Inc. Sunnyvale, CA

---- ---- ---- ---- ---- ---- ----

DVCON ATTENDANCE NUMBERS

2006 :################################ 650

2007 :################################### 707

2008 :######################################## 802

2009 :################################ 665

2010 :############################## 625

2011 :##################################### 749

2012 :########################################## 834

2013 :############################################ 883

DVcon had the same drop after 2008 that many other conferences had reacting

to the drop in the world economy. The interesting thing is it recovered

fairly quickly -- by 2011 -- and has since grown to 883 attendees by 2013.

---- ---- ---- ---- ---- ---- ----

WALLY'S KEYNOTE ADDRESS

As usual, Wally gave an informative keynote address full of data.

Starting with the state of verification today, Wally stated that for both

2010 and 2012, surveys show that verification consumes 56% of (mean) project

time, with a peak of respondents landing in the 60-70% bucket. He concluded

verification has not been keeping up with growing design complexity. He

showed the dramatic 75% growth in the number of verification engineers so

there is now a 1-to-1 match with the number of designers on a chip.

Wally drew the humorous conclusion, that if this 75% growth rate continues,

we will need everyone in India(!) to do verification.

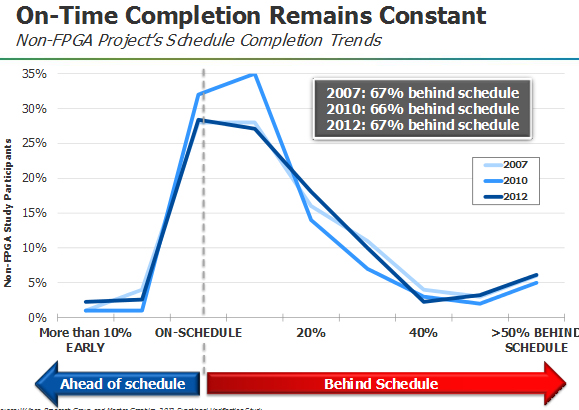

He then went on to state that on-time project completion is always behind

schedule a constant 67% of the time from 2007 to 2012. Wally showed the

trend that 80% of designs contain an embedded processor, the number used in

design grows year-over-year, and on average 2.7 embedded processors are used

in design greater than 20 M gates.

Wally then identified several important trends in verification methodologies.

1. The first trend is that the industry is converging on the System

Verilog language and its built-in test capabilities.

For designs over 20 M gates, 89% are using System Verilog. By

standardizing on the Universal Verification Methodology (UVM) from

Accellera, testbench automation is making a dramatic change, with

almost 500% growth since 2010, and a further 46% growth in adoption

is expected in 2013.

2. The second trend is the standardization of a proven verification flow

for an SOC and the end of ad-hoc approaches. Wally claimed that even

with best-in-class tools, an ad-hoc process could result in a cost

increase of 6-9%. By introducing a good process, the cost of the flow

actually decreases by 20-30%.

Wally laid out four specific checking stages that went from the

- unit-level,

- through connectivity checking,

- IP integration and data-path checking,

- and then system-level checks.

This is a known good process that many companies have implemented.

(To me, Wally's good process is practicing "Early Verification" with

gradual deployment of more expensive technologies. It's best to make

extensive use of tools with a low cost of bug detection and high bug

coverage. An obvious example is using lint tools which has the best

cost-to-bug-detection ratio and very high coverage.)

(The value of best-in-class tools was well underscored. I think this

will be a good time for your readers to call their local EDA sales guy

to get the best-in-class tools for SOC verification. :)

3. "How do you know you are making progress?" was Wally's lead-in to his

Trend #3 which is the measuring/analysis of verification coverage and

power issues at all levels of the design hierarchy.

"What gets measured, gets done.

What gets measured, gets improved.

What gets measured, gets managed."

- Peter Drucker, American management consultant (1909 - 2005)

He celebrated the new Unified Coverage Interoperability Standard from

Accellera which allows all engines in the verification tool chain to

share data and avoid duplication of effort. "Why verify something that

has already been covered?"

He then went on to state that on-time project completion is always behind

schedule a constant 67% of the time from 2007 to 2012. Wally showed the

trend that 80% of designs contain an embedded processor, the number used in

design grows year-over-year, and on average 2.7 embedded processors are used

in design greater than 20 M gates.

Wally then identified several important trends in verification methodologies.

1. The first trend is that the industry is converging on the System

Verilog language and its built-in test capabilities.

For designs over 20 M gates, 89% are using System Verilog. By

standardizing on the Universal Verification Methodology (UVM) from

Accellera, testbench automation is making a dramatic change, with

almost 500% growth since 2010, and a further 46% growth in adoption

is expected in 2013.

2. The second trend is the standardization of a proven verification flow

for an SOC and the end of ad-hoc approaches. Wally claimed that even

with best-in-class tools, an ad-hoc process could result in a cost

increase of 6-9%. By introducing a good process, the cost of the flow

actually decreases by 20-30%.

Wally laid out four specific checking stages that went from the

- unit-level,

- through connectivity checking,

- IP integration and data-path checking,

- and then system-level checks.

This is a known good process that many companies have implemented.

(To me, Wally's good process is practicing "Early Verification" with

gradual deployment of more expensive technologies. It's best to make

extensive use of tools with a low cost of bug detection and high bug

coverage. An obvious example is using lint tools which has the best

cost-to-bug-detection ratio and very high coverage.)

(The value of best-in-class tools was well underscored. I think this

will be a good time for your readers to call their local EDA sales guy

to get the best-in-class tools for SOC verification. :)

3. "How do you know you are making progress?" was Wally's lead-in to his

Trend #3 which is the measuring/analysis of verification coverage and

power issues at all levels of the design hierarchy.

"What gets measured, gets done.

What gets measured, gets improved.

What gets measured, gets managed."

- Peter Drucker, American management consultant (1909 - 2005)

He celebrated the new Unified Coverage Interoperability Standard from

Accellera which allows all engines in the verification tool chain to

share data and avoid duplication of effort. "Why verify something that

has already been covered?"

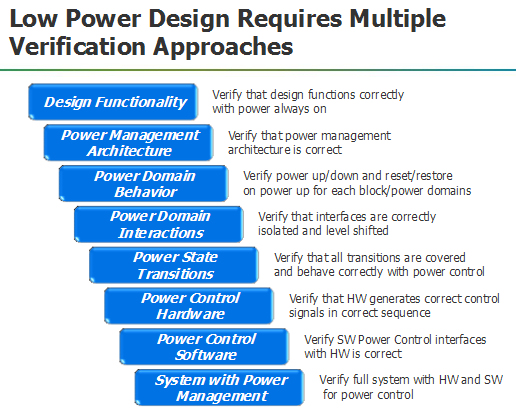

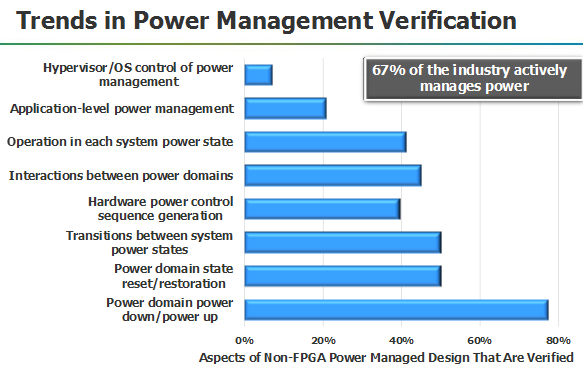

Wally then showed 8 different power management and verification issues

and then noted that 67% of the design industry is actively managing

power in their chips.

Wally then showed 8 different power management and verification issues

and then noted that 67% of the design industry is actively managing

power in their chips.

4. Wally next shared his vision on how "macro enablers in verification"

will deliver orders of magnitude improvement. His enablers were:

- the intelligent test bench,

- multi-engine verification platforms, and

- application specific formal.

The Intelligent Test Bench (with MENT's InFact tool as illustration)

delivers 10X faster code coverage vs. industry standard "constrained

random" testing and did it 10X faster than other methods. He saw the

promise of further speed-ups for software-driven verification at the

intersection of simulation and emulation when system bring-up is done.

With Multi-Engine Verification Platforms, the use of emulation is

growing rapidly with 57% of chips using emulation for design sizes

over 20 M gates. Using an integrated simulation/emulation/software

verification environment gives you the ability to combine testbench

acceleration with protocol, software, and system debug. (This can be

a powerful way to debug SOCs but the cost will make it tenable only

for the largest designs.

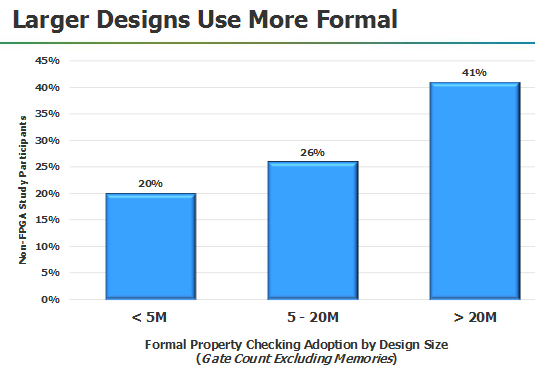

Wally's third enabler, Application-Specific Formal, also happens to

be our domain of expertise at Real Intent. He said originally formal

property verification was tough to use, and required a Ph.D. and a

very sophisticated designer. What happened in the last decade is that

people identified specific tasks that were narrow enough that you

could develop formal methods that would automate that portion of the

verification task. Fully automatic formal is a reality for a lot of

companies and in many cases push-button formal where you don't have

to be an expert. If this change didn't happen, we'd have a serious

problem doing all the verification we have to do. And some things

really can't be effectively verified without formal methods.

Wally showed that 41% of designs over 20 M gates use formal of some sort:

4. Wally next shared his vision on how "macro enablers in verification"

will deliver orders of magnitude improvement. His enablers were:

- the intelligent test bench,

- multi-engine verification platforms, and

- application specific formal.

The Intelligent Test Bench (with MENT's InFact tool as illustration)

delivers 10X faster code coverage vs. industry standard "constrained

random" testing and did it 10X faster than other methods. He saw the

promise of further speed-ups for software-driven verification at the

intersection of simulation and emulation when system bring-up is done.

With Multi-Engine Verification Platforms, the use of emulation is

growing rapidly with 57% of chips using emulation for design sizes

over 20 M gates. Using an integrated simulation/emulation/software

verification environment gives you the ability to combine testbench

acceleration with protocol, software, and system debug. (This can be

a powerful way to debug SOCs but the cost will make it tenable only

for the largest designs.

Wally's third enabler, Application-Specific Formal, also happens to

be our domain of expertise at Real Intent. He said originally formal

property verification was tough to use, and required a Ph.D. and a

very sophisticated designer. What happened in the last decade is that

people identified specific tasks that were narrow enough that you

could develop formal methods that would automate that portion of the

verification task. Fully automatic formal is a reality for a lot of

companies and in many cases push-button formal where you don't have

to be an expert. If this change didn't happen, we'd have a serious

problem doing all the verification we have to do. And some things

really can't be effectively verified without formal methods.

Wally showed that 41% of designs over 20 M gates use formal of some sort:

Wally then went on to identify four examples of push-button formal:

- identify deadlock and other FSM bugs

- identify unreachable code to improve simulation coverage

- identify SOC IP connectivity issues and

- identify the propagation of Xs (unknowns) at the RTL

He then talked about how clock domain crossing (CDC) verification can NOT be

done with any RTL simulation and this is an essential application-specific

formal tool. And the trend shows that for designs over 20 M gates that 11+

clock domains are used. He concluded that full-chip CDC verification is

available today and this has been made possible by formal methods.

PRAKASH'S ANALYSIS OF WALLY'S KEYNOTE

I agree with Wally that application-specific tools will provide considerable

time and work saving for both design and verification teams.

However, I wish to embellish Wally's discussion of "Application-Specific

Solutions as Formal" with his previously stated "Good Process" principle.

Application-specific solutions are multi-step solutions that need to employ

best suited techniques at each step. Typically, formal methods form a key

part of the solution but in many applications, like CDC, they are only

effectively deployed in later stages.

We find that designers can quickly harden their designs using tools that,

"under the hood", combine the right mix of structural, formal, and dynamic

methods that are tailored specifically to a defined problem area.

Designers do NOT need to spend valuable time customizing their verification

tools to match a problem area.

Finally, according to the Real Intent 2012 DAC survey which was published

on DeepChip (DAC'12 #6), we found that 32% of new designs will use 50 or

more clock domains.

A captivating speaker, Wally delivered his message well:

The growing familiarity with the SOC design methodology, where the next SOC

uses an established architecture but offers an expanding feature set, can

employ methods that focus on each of the verification stages from unit-level

to full-chip, deliver 10x to 100x improvements and use application-specific

techniques. This accelerating EDA innovation will keep verification in

bounds, and achieve working SOCs.

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

Wally then went on to identify four examples of push-button formal:

- identify deadlock and other FSM bugs

- identify unreachable code to improve simulation coverage

- identify SOC IP connectivity issues and

- identify the propagation of Xs (unknowns) at the RTL

He then talked about how clock domain crossing (CDC) verification can NOT be

done with any RTL simulation and this is an essential application-specific

formal tool. And the trend shows that for designs over 20 M gates that 11+

clock domains are used. He concluded that full-chip CDC verification is

available today and this has been made possible by formal methods.

PRAKASH'S ANALYSIS OF WALLY'S KEYNOTE

I agree with Wally that application-specific tools will provide considerable

time and work saving for both design and verification teams.

However, I wish to embellish Wally's discussion of "Application-Specific

Solutions as Formal" with his previously stated "Good Process" principle.

Application-specific solutions are multi-step solutions that need to employ

best suited techniques at each step. Typically, formal methods form a key

part of the solution but in many applications, like CDC, they are only

effectively deployed in later stages.

We find that designers can quickly harden their designs using tools that,

"under the hood", combine the right mix of structural, formal, and dynamic

methods that are tailored specifically to a defined problem area.

Designers do NOT need to spend valuable time customizing their verification

tools to match a problem area.

Finally, according to the Real Intent 2012 DAC survey which was published

on DeepChip (DAC'12 #6), we found that 32% of new designs will use 50 or

more clock domains.

A captivating speaker, Wally delivered his message well:

The growing familiarity with the SOC design methodology, where the next SOC

uses an established architecture but offers an expanding feature set, can

employ methods that focus on each of the verification stages from unit-level

to full-chip, deliver 10x to 100x improvements and use application-specific

techniques. This accelerating EDA innovation will keep verification in

bounds, and achieve working SOCs.

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

DESIGN VS. VERIFICATION PANEL

The main DVcon panel "Where Does Design End and Verification Begin?", had:

- John Goodenough of ARM

- Harry Foster of Mentor

- Oren Katzir of Intel

- Gary Smith of Gary Smith EDA

- Pranav Ashar of Real Intent

Brian Hunter from Cavium was the moderator and asked many questions during

the 90-minute discussion. A number of the questions and remarks related to

Wally's prior keynote address.

Q. What do designers need to do to contribute to the verification process?

Ashar cited two things: Clearer articulation of the designer's intent

in executable form, and to do as much of the design hardening using

the wide variety of tools available to analyze the design at the RTL,

pre-simulation before handing the design over to the verification team.

Ashar pointed to the application-specific verification as was mentioned

by Wally Rhines in his keynote, and specifically CDC.

Q. Are we seeing the adoption of static verification such as formal? In

the past, formal has over-promised and under-delivered?

Smith said that are primarily formal tools are finding their home as

verification apps. "Designers are now using what were verification

team tools. In its broader sense, the top teams are using formal

as a design tool for some of the harder problems."

Hunter then commented that at Cavium their SOC designs are highly

configurable and that the use of formal has been an encumbrance and

is hard to use with their designs.

Q. What is the sweet spot for formal?

Ashar said today we are looking for solutions and not technologies.

"Solutions for CDC, X-management, power, and early functional

verification for easy errors. Formal is just one part. There are

a collection of techniques now used. An SOC today is a collection

of IP. The reference design is pretty much the same with the same

kinds of components. Verification is now at the interfaces and not

so much at the IP. You know the problem you are trying to solve

with an implicit spec that aids in the verification."

Foster said formal required a lot of sophistication in specification

in the past. "The beauty of automatic applications is that the user

does not have the burden of creating the properties."

Katzir said designers will not run a formal tool. But they will run

a CDC tool and get a report of actionable items. "And this is a

designer's tool not verification engineers."

Q. Dr. Goodenough, you are using formal at ARM?

Goodenough replied that formal is an experiment that has been running

for 15 years (chuckles on the panel). "In the last 2 to 3 years, it

has gained traction as the tools mature. Practical work flows are

starting to appear in a number of areas. We clearly segment our use

of formal. We have developed an internal acronym - AHA. That is

using formal for Avoidance and that is where it crosses over into the

design side. Designers understand and can reason about the design.

If they use formal to replace or along side their mini-testbench that

leads to a more structured design, a design populated with assertions

and where the interfaces are much more cleanly documented...

Using pre-packaged flows supports good collaboration between designers

and verification engineers. There we are using formal as Hunting ('H'

in AHA). The assertions have been primarily authored by the designers,

but you are giving them a set of assertion that cover 80% of the design

and can be extend to 90%.

The jury is still out on the Absence ('A' in AHA). You are trying to

do some end-to-end formal proofs to show that deadlock or livelock or

data corruption is not a possibility. We have successes in informing

designers about things they didnít know about... You end with a lot

of spec clarifications instead of complete 100% proven assertions...

In all those areas there has been significant progress but there is

still a lot to go. The static formal engine is finding its home in

those 3 workflows and methodologies."

Q. Mr. Katzir, at Intel, do you have the designers doing verification?

Katzir replied that 70% to 80% of the designer's work is verification.

Whether its formal tools, structural tools, and running simulations;

the verification team focuses on building the verification environment,

stitching together the verification IPs and enabling the designers to

do the verification. "That is just a fact of life."

Q. Are verification engineers skilled at breaking a design because they

do not know it?

Katzir said yes. "In an SOC environment where everything is an IP, each

unit comes with its verification IP (VIP). The knowledge of what this

IP is supposed to do is in the VIP, which enables the task of the

verification team. The design team still runs the scenarios. The trend

of SOC, which integrates different IP, calls for more standardization

and working with different languages in the same environment.

At Intel, for all the register configuration, fuses, TAP registers, we

are using System RDL. It abstracts for us those descriptions, and

captures all the specifications. From System RDL, we generate all the

different formats. That has been a major improvement for us.

Coverage is another aspect. If I can get, and often do get, coverage

database for those IPs this is helpful. Whatever tool was used,

structural, formal, or simulation, I can build up my SOC coverage much

faster. This is missing frequently in structural and formal tools.

Unless they are generating assertions that we can write coverage

statements on them, we have to guess.

If I can catch those assigned executions, block-enable, undriven logic,

and put them in my coverage database, say using UCIS, then I don't

have to repeat those tests. That is a major gap we see right now.

I do agree with Gary that these apps are the sweet spots for formal

verification: CDC, X-propagation, low-power, DFT."

Q. Applying formal too early just shows that your design is incomplete.

Too late and you perhaps have wasted simulation cycles? When is

the right point to do formal?

Goodenough repeated Brian's view. "I am struggling with finding some

objective metrics that tell me when the right time to run it is. It

seems to be up to the preference of the designer for the order of

attack. There frankly isnít a right or wrong answer. Running formal

early in some of the bring-up activities, we have seen value in

pulling bug curves forward in time, because the design is a little

better structured before it goes to bulk simulation. Beyond that,

I have no data."

Foster added: "I agree formal applications can be run early, such as

X-propagation which is an easy one to clean out those types of bugs.

This problem of when is not unique to formal. We have the same issue

with coverage and simulation. You don't want to gather coverage data

too soon; otherwise you have to toss all that data out."

Ashar added that some areas are not suitable for simulation such

as CDC, and complicated corner-case control checking, which static

analysis and formal are good for those problems. "You have to use

holistic techniques to find these problems and the timing is not so

much a matter of early or late. With X-propagation static analysis,

it improves simulation productivity. By doing the X-optimism and

X-pessimism mitigation in your RTL you improve the productivity of

the verification engineer."

Q. We are asking designers to do the RTL, synthesis and the timing. We

want them to do lint, code coverage, CDC, X-verification. What else?

Goodenough said designers can do Design for Verification. Which are

design practices like no messy interfaces, and instrument your design.

Then there is Design to Help Verification, such as instrumentation to

help integration-level debug -- which becomes key. The critical path

is a system-level bug seen on an emulator running late in a project

and the team is scratching their heads. What you need is a way for

the IP designer to look at the design and the guy running an advanced

application payload to look at the same design running and each can

see their view of it and figure it out together.

"We emphasize putting together debug environments with different levels

of visibility. The designers can do a lot to instrument the design to

provide 'finger-pointing' IP or assertions: It collapses the time to

not solve the bug, but triage where the problem is. Is it located in

the software, in the testbench, in the IP block, in the way the IP has

been integrated. This is often the critical path on our projects

and in our customer's SOCs."

Katzir said there are gaps in tools and environments. The triage of

where the problem is located takes days and days of time. Most

debuggers are not handling System Verilog testbenches in a good way.

Regarding the mix of design hardware and running software, most of the

debug and verification is done in completely different teams, tools,

and environments.

There is a big gap in terms of the linkage of the technology and tools.

Something we are looking at how you link the high-level specification

to the design code you are debugging. If you can see how a transaction

is failing in your high-level modeling and how it is this linked to the

specific checker that failed, it can save valuable time.

Q. We seem to be asking designers to look beyond their RTL and take on more

knowledge of the software. At Cavium, when we have a simulation that

fails it usually is a problem in the SW environment. Are we asking

designers to triage that problem and find it in that SW environment?

Goodenough says no. "You ask the designer to help the guy running that

SW environment and tell him the problem is or is not in the IP.

Assertions allow you to do online documentation for your IP in a

simulation or emulation environment.

If you view them that way, my responsibility as a designer is to provide

an executable data book and that you're not doing anything dumb.

If your FIFO can only take 5 transactions and 6 have been fired at it,

tell them that it happened. Don't make them mine down through waveforms

to find that. You're asking designers to be aware of the SW integration

environments that their IP is going into.

And you need to give methodologies on how to instrument their designs."

Q. What is assertion synthesis? Can someone explain this to me?

Ashar identified two kinds of assertions. "The first kind is implicit

with the problem domain that you are looking at such as CDC.

The second kind comes from mining simulation output and correlating that

to your verification." He has concerns about extracting meaningful

hypotheses from that.

Goodenough said they tried the second kind. "It may give you one little

gotcha that you haven't thought about. These assertions that come out

are not high value. They won't aid system-level debug or input to a

formal tool to do higher-level reasoning. Like packaged X-propagation

flows, it can inform you about something you didn't know about your

design. These generated assertions are machine-oriented and not very

designer meaningful."

Foster said there's two definitions. There's synthesis of properties

automatically. And there is the second where assertions are synthesized

for use in emulation and post-silicon validation.

Q. When do I use assertion synthesis?

Goodenough said they use it when the RTL is stable and functionally

complete. "When I think I have done everything I thought. It is

another look at things. Ooops. I wrote my test plan. I executed my

test plan. But I am sure there are things I haven't thought of?

Assertion synthesis can upgrade your test plan. I can look at my

testbench as it executes my test plan. I can see when I am not

getting a lot of activity on this event against this another critical

event in the design."

Q. Wasn't functional coverage supposed to solve that for us?

Goodenough said no. "Functional coverage is when I traverse a state

space, I want the simulation to hit a particular point in the state

space of the design. The verification team executes the validation

plan. What I am interested in is finding whether their validation

plan is complete or not. Because what kills me is an errata after I

have delivered the chip. I want to have some understanding of what

I haven't done. Assertion synthesis and stimulus grading have a role

to play in the completeness of our test plan."

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

STU SUTHERLAND PAPER ON X'S

Stuart Sutherland's "I'm Still In Love With My X, But Do I Want My X To Be

An Optimist, A Pessimist, Or Eliminated?" paper at DVCon was an excellent

summary of the good, the bad and the ugly with X's (unknowns) in a design.

Stu began with a quick review. X is a simulation-only value that can mean

- uninitialized

- Don't Care

- ambiguous

and has no reality in silicon. Stu identified 15 possible sources of X's

- uninitialized 4-state variables

- uninitialized registers and latches

- low power logic shutdown or power-up

- unconnected module input ports

- multi-driver conflicts (bus contention)

- operations with an unknown result

- out-of-range bit-selects and array indices

- logic gates with unknown output values

- set-up or hold timing violations

- user-assigned X values in hardware models

- testbench X injection

In simulation, X-optimism is when uncertainty on an input to an expression

or gate resolves to a known result instead of an X. This can hide bugs.

If...Else and Case decision statements are X-optimistic and not match

silicon behavior. Stuart cites 7 different constructs and statement types

that are X-optimistic.

X-pessimism is when uncertainty on an input to an expression or gate always

resolves to an unknown result (X). It does not hide design ambiguities but

again does not match silicon. It can make debug difficult, propagate X's

unnecessarily and can cause simulation lock-up. Stuart cites 6 different

operators and assignments that are X-pessimistic.

While it is possible to have just a two-state simulator with zero and one

only, verification cannot check for ambiguity, which is important to have.

Stuart says that the X is your friend and you should live with it.

The "?" operator is discussed as a possible alternative to the If...Else

statement. Stu thinks this is not a good idea since complex decisions coded

with "?" are hard to debug, and can still propagate an optimistic result.

Some simulators have a special X-propagation option to change the behavior

of If...Else, Case, posedge and negedge statements. But not all simulators

have it, and this is not part of the System Verilog standard, so there may

be differences in behavior.

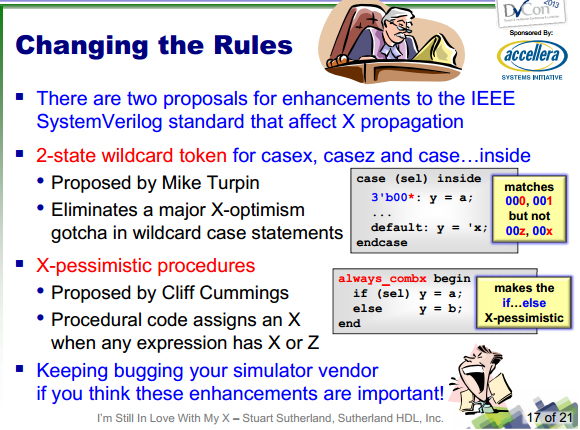

Here's two proposals to the System Verilog standard for X-safe simulation.

One is proposed by Mike Turpin of ARM; other by consultant Cliff Cummings.

Stuart recommends you contact your simulation vendor, if you think these

enhancements are important.

DESIGN VS. VERIFICATION PANEL

The main DVcon panel "Where Does Design End and Verification Begin?", had:

- John Goodenough of ARM

- Harry Foster of Mentor

- Oren Katzir of Intel

- Gary Smith of Gary Smith EDA

- Pranav Ashar of Real Intent

Brian Hunter from Cavium was the moderator and asked many questions during

the 90-minute discussion. A number of the questions and remarks related to

Wally's prior keynote address.

Q. What do designers need to do to contribute to the verification process?

Ashar cited two things: Clearer articulation of the designer's intent

in executable form, and to do as much of the design hardening using

the wide variety of tools available to analyze the design at the RTL,

pre-simulation before handing the design over to the verification team.

Ashar pointed to the application-specific verification as was mentioned

by Wally Rhines in his keynote, and specifically CDC.

Q. Are we seeing the adoption of static verification such as formal? In

the past, formal has over-promised and under-delivered?

Smith said that are primarily formal tools are finding their home as

verification apps. "Designers are now using what were verification

team tools. In its broader sense, the top teams are using formal

as a design tool for some of the harder problems."

Hunter then commented that at Cavium their SOC designs are highly

configurable and that the use of formal has been an encumbrance and

is hard to use with their designs.

Q. What is the sweet spot for formal?

Ashar said today we are looking for solutions and not technologies.

"Solutions for CDC, X-management, power, and early functional

verification for easy errors. Formal is just one part. There are

a collection of techniques now used. An SOC today is a collection

of IP. The reference design is pretty much the same with the same

kinds of components. Verification is now at the interfaces and not

so much at the IP. You know the problem you are trying to solve

with an implicit spec that aids in the verification."

Foster said formal required a lot of sophistication in specification

in the past. "The beauty of automatic applications is that the user

does not have the burden of creating the properties."

Katzir said designers will not run a formal tool. But they will run

a CDC tool and get a report of actionable items. "And this is a

designer's tool not verification engineers."

Q. Dr. Goodenough, you are using formal at ARM?

Goodenough replied that formal is an experiment that has been running

for 15 years (chuckles on the panel). "In the last 2 to 3 years, it

has gained traction as the tools mature. Practical work flows are

starting to appear in a number of areas. We clearly segment our use

of formal. We have developed an internal acronym - AHA. That is

using formal for Avoidance and that is where it crosses over into the

design side. Designers understand and can reason about the design.

If they use formal to replace or along side their mini-testbench that

leads to a more structured design, a design populated with assertions

and where the interfaces are much more cleanly documented...

Using pre-packaged flows supports good collaboration between designers

and verification engineers. There we are using formal as Hunting ('H'

in AHA). The assertions have been primarily authored by the designers,

but you are giving them a set of assertion that cover 80% of the design

and can be extend to 90%.

The jury is still out on the Absence ('A' in AHA). You are trying to

do some end-to-end formal proofs to show that deadlock or livelock or

data corruption is not a possibility. We have successes in informing

designers about things they didnít know about... You end with a lot

of spec clarifications instead of complete 100% proven assertions...

In all those areas there has been significant progress but there is

still a lot to go. The static formal engine is finding its home in

those 3 workflows and methodologies."

Q. Mr. Katzir, at Intel, do you have the designers doing verification?

Katzir replied that 70% to 80% of the designer's work is verification.

Whether its formal tools, structural tools, and running simulations;

the verification team focuses on building the verification environment,

stitching together the verification IPs and enabling the designers to

do the verification. "That is just a fact of life."

Q. Are verification engineers skilled at breaking a design because they

do not know it?

Katzir said yes. "In an SOC environment where everything is an IP, each

unit comes with its verification IP (VIP). The knowledge of what this

IP is supposed to do is in the VIP, which enables the task of the

verification team. The design team still runs the scenarios. The trend

of SOC, which integrates different IP, calls for more standardization

and working with different languages in the same environment.

At Intel, for all the register configuration, fuses, TAP registers, we

are using System RDL. It abstracts for us those descriptions, and

captures all the specifications. From System RDL, we generate all the

different formats. That has been a major improvement for us.

Coverage is another aspect. If I can get, and often do get, coverage

database for those IPs this is helpful. Whatever tool was used,

structural, formal, or simulation, I can build up my SOC coverage much

faster. This is missing frequently in structural and formal tools.

Unless they are generating assertions that we can write coverage

statements on them, we have to guess.

If I can catch those assigned executions, block-enable, undriven logic,

and put them in my coverage database, say using UCIS, then I don't

have to repeat those tests. That is a major gap we see right now.

I do agree with Gary that these apps are the sweet spots for formal

verification: CDC, X-propagation, low-power, DFT."

Q. Applying formal too early just shows that your design is incomplete.

Too late and you perhaps have wasted simulation cycles? When is

the right point to do formal?

Goodenough repeated Brian's view. "I am struggling with finding some

objective metrics that tell me when the right time to run it is. It

seems to be up to the preference of the designer for the order of

attack. There frankly isnít a right or wrong answer. Running formal

early in some of the bring-up activities, we have seen value in

pulling bug curves forward in time, because the design is a little

better structured before it goes to bulk simulation. Beyond that,

I have no data."

Foster added: "I agree formal applications can be run early, such as

X-propagation which is an easy one to clean out those types of bugs.

This problem of when is not unique to formal. We have the same issue

with coverage and simulation. You don't want to gather coverage data

too soon; otherwise you have to toss all that data out."

Ashar added that some areas are not suitable for simulation such

as CDC, and complicated corner-case control checking, which static

analysis and formal are good for those problems. "You have to use

holistic techniques to find these problems and the timing is not so

much a matter of early or late. With X-propagation static analysis,

it improves simulation productivity. By doing the X-optimism and

X-pessimism mitigation in your RTL you improve the productivity of

the verification engineer."

Q. We are asking designers to do the RTL, synthesis and the timing. We

want them to do lint, code coverage, CDC, X-verification. What else?

Goodenough said designers can do Design for Verification. Which are

design practices like no messy interfaces, and instrument your design.

Then there is Design to Help Verification, such as instrumentation to

help integration-level debug -- which becomes key. The critical path

is a system-level bug seen on an emulator running late in a project

and the team is scratching their heads. What you need is a way for

the IP designer to look at the design and the guy running an advanced

application payload to look at the same design running and each can

see their view of it and figure it out together.

"We emphasize putting together debug environments with different levels

of visibility. The designers can do a lot to instrument the design to

provide 'finger-pointing' IP or assertions: It collapses the time to

not solve the bug, but triage where the problem is. Is it located in

the software, in the testbench, in the IP block, in the way the IP has

been integrated. This is often the critical path on our projects

and in our customer's SOCs."

Katzir said there are gaps in tools and environments. The triage of

where the problem is located takes days and days of time. Most

debuggers are not handling System Verilog testbenches in a good way.

Regarding the mix of design hardware and running software, most of the

debug and verification is done in completely different teams, tools,

and environments.

There is a big gap in terms of the linkage of the technology and tools.

Something we are looking at how you link the high-level specification

to the design code you are debugging. If you can see how a transaction

is failing in your high-level modeling and how it is this linked to the

specific checker that failed, it can save valuable time.

Q. We seem to be asking designers to look beyond their RTL and take on more

knowledge of the software. At Cavium, when we have a simulation that

fails it usually is a problem in the SW environment. Are we asking

designers to triage that problem and find it in that SW environment?

Goodenough says no. "You ask the designer to help the guy running that

SW environment and tell him the problem is or is not in the IP.

Assertions allow you to do online documentation for your IP in a

simulation or emulation environment.

If you view them that way, my responsibility as a designer is to provide

an executable data book and that you're not doing anything dumb.

If your FIFO can only take 5 transactions and 6 have been fired at it,

tell them that it happened. Don't make them mine down through waveforms

to find that. You're asking designers to be aware of the SW integration

environments that their IP is going into.

And you need to give methodologies on how to instrument their designs."

Q. What is assertion synthesis? Can someone explain this to me?

Ashar identified two kinds of assertions. "The first kind is implicit

with the problem domain that you are looking at such as CDC.

The second kind comes from mining simulation output and correlating that

to your verification." He has concerns about extracting meaningful

hypotheses from that.

Goodenough said they tried the second kind. "It may give you one little

gotcha that you haven't thought about. These assertions that come out

are not high value. They won't aid system-level debug or input to a

formal tool to do higher-level reasoning. Like packaged X-propagation

flows, it can inform you about something you didn't know about your

design. These generated assertions are machine-oriented and not very

designer meaningful."

Foster said there's two definitions. There's synthesis of properties

automatically. And there is the second where assertions are synthesized

for use in emulation and post-silicon validation.

Q. When do I use assertion synthesis?

Goodenough said they use it when the RTL is stable and functionally

complete. "When I think I have done everything I thought. It is

another look at things. Ooops. I wrote my test plan. I executed my

test plan. But I am sure there are things I haven't thought of?

Assertion synthesis can upgrade your test plan. I can look at my

testbench as it executes my test plan. I can see when I am not

getting a lot of activity on this event against this another critical

event in the design."

Q. Wasn't functional coverage supposed to solve that for us?

Goodenough said no. "Functional coverage is when I traverse a state

space, I want the simulation to hit a particular point in the state

space of the design. The verification team executes the validation

plan. What I am interested in is finding whether their validation

plan is complete or not. Because what kills me is an errata after I

have delivered the chip. I want to have some understanding of what

I haven't done. Assertion synthesis and stimulus grading have a role

to play in the completeness of our test plan."

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

STU SUTHERLAND PAPER ON X'S

Stuart Sutherland's "I'm Still In Love With My X, But Do I Want My X To Be

An Optimist, A Pessimist, Or Eliminated?" paper at DVCon was an excellent

summary of the good, the bad and the ugly with X's (unknowns) in a design.

Stu began with a quick review. X is a simulation-only value that can mean

- uninitialized

- Don't Care

- ambiguous

and has no reality in silicon. Stu identified 15 possible sources of X's

- uninitialized 4-state variables

- uninitialized registers and latches

- low power logic shutdown or power-up

- unconnected module input ports

- multi-driver conflicts (bus contention)

- operations with an unknown result

- out-of-range bit-selects and array indices

- logic gates with unknown output values

- set-up or hold timing violations

- user-assigned X values in hardware models

- testbench X injection

In simulation, X-optimism is when uncertainty on an input to an expression

or gate resolves to a known result instead of an X. This can hide bugs.

If...Else and Case decision statements are X-optimistic and not match

silicon behavior. Stuart cites 7 different constructs and statement types

that are X-optimistic.

X-pessimism is when uncertainty on an input to an expression or gate always

resolves to an unknown result (X). It does not hide design ambiguities but

again does not match silicon. It can make debug difficult, propagate X's

unnecessarily and can cause simulation lock-up. Stuart cites 6 different

operators and assignments that are X-pessimistic.

While it is possible to have just a two-state simulator with zero and one

only, verification cannot check for ambiguity, which is important to have.

Stuart says that the X is your friend and you should live with it.

The "?" operator is discussed as a possible alternative to the If...Else

statement. Stu thinks this is not a good idea since complex decisions coded

with "?" are hard to debug, and can still propagate an optimistic result.

Some simulators have a special X-propagation option to change the behavior

of If...Else, Case, posedge and negedge statements. But not all simulators

have it, and this is not part of the System Verilog standard, so there may

be differences in behavior.

Here's two proposals to the System Verilog standard for X-safe simulation.

One is proposed by Mike Turpin of ARM; other by consultant Cliff Cummings.

Stuart recommends you contact your simulation vendor, if you think these

enhancements are important.

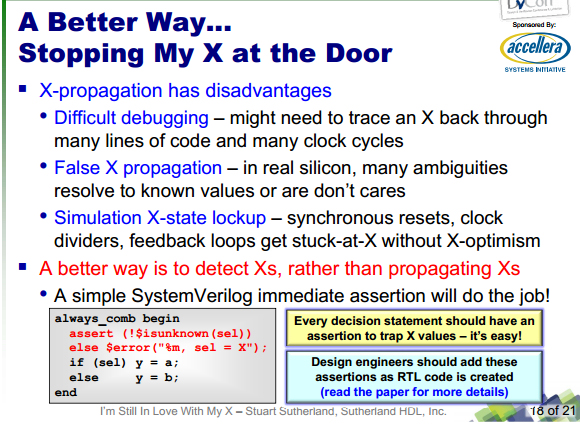

And Stu Sutherland offers his own solution to the X-propagation problem.

Detect the X's instead of propagating them by having a coding trap for every

decision statement.

And Stu Sutherland offers his own solution to the X-propagation problem.

Detect the X's instead of propagating them by having a coding trap for every

decision statement.

Stu's final recommendations is keep using your 4-state date types -- on

power-up initialize hardware registers with random 0 or 1 values instead

of the default X, don't assign X values deliberately, and use X-detect

assertions on all decision statements.

Stuart made an excellent and detailed presentation. I wish to expand the

final perspective a bit.

X-Management is another application that lends itself very well to an

Application-Specific solution. The notion of Assertion-Synthesis can be

adapted to automatically create X-detect assertions. An integrated

"Good Process" solution can then utilize multiple technologies, including

dynamic and formal, to provide an efficient X-management solution.

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

WHO ELSE EXHIBITED

This year DVcon'13 had 30 exhibitors; each of the Big 3, plus 6 new booths.

Synopsys + EVE + SpringSoft

Cadence

Mentor Graphics

Agnisys

Aldec

AMIQ EDA

* - ASICSoft/ProDesign

* - Atrenta

Blue Pearl Software

Breker

Calypto

* - CircuitSutra

Dini Group

Doulos

Duolog

* - FishTail

Forte

Innovative Logic

Jasper

Methodics

* - OneSpin GmbH

Oski Technology

Paradigm Works

PerfectVIPs

Real Intent

* - Realization Tech

S2C

Semifore

Sibridge Tech

Verific

* -- first time showing at DVcon

Because of the concentration of so many verification specialists that were

all in one place, for us (Real Intent) this year's DVcon was a good source

of quality sales leads.

We definitely plan to return next year.

- Prakash Narain

Real Intent, Inc. Sunnyvale, CA

-----

John Cooley runs DeepChip.com, is a contract ASIC designer, and loves

hearing from engineers at

Stu's final recommendations is keep using your 4-state date types -- on

power-up initialize hardware registers with random 0 or 1 values instead

of the default X, don't assign X values deliberately, and use X-detect

assertions on all decision statements.

Stuart made an excellent and detailed presentation. I wish to expand the

final perspective a bit.

X-Management is another application that lends itself very well to an

Application-Specific solution. The notion of Assertion-Synthesis can be

adapted to automatically create X-detect assertions. An integrated

"Good Process" solution can then utilize multiple technologies, including

dynamic and formal, to provide an efficient X-management solution.

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

---- ---- ---- ---- ---- ---- ----

WHO ELSE EXHIBITED

This year DVcon'13 had 30 exhibitors; each of the Big 3, plus 6 new booths.

Synopsys + EVE + SpringSoft

Cadence

Mentor Graphics

Agnisys

Aldec

AMIQ EDA

* - ASICSoft/ProDesign

* - Atrenta

Blue Pearl Software

Breker

Calypto

* - CircuitSutra

Dini Group

Doulos

Duolog

* - FishTail

Forte

Innovative Logic

Jasper

Methodics

* - OneSpin GmbH

Oski Technology

Paradigm Works

PerfectVIPs

Real Intent

* - Realization Tech

S2C

Semifore

Sibridge Tech

Verific

* -- first time showing at DVcon

Because of the concentration of so many verification specialists that were

all in one place, for us (Real Intent) this year's DVcon was a good source

of quality sales leads.

We definitely plan to return next year.

- Prakash Narain

Real Intent, Inc. Sunnyvale, CA

-----

John Cooley runs DeepChip.com, is a contract ASIC designer, and loves

hearing from engineers at  or (508) 429-4357. or (508) 429-4357.

|

|